Overall - Product System Design - Hello Interview

Here is some note for product system design.

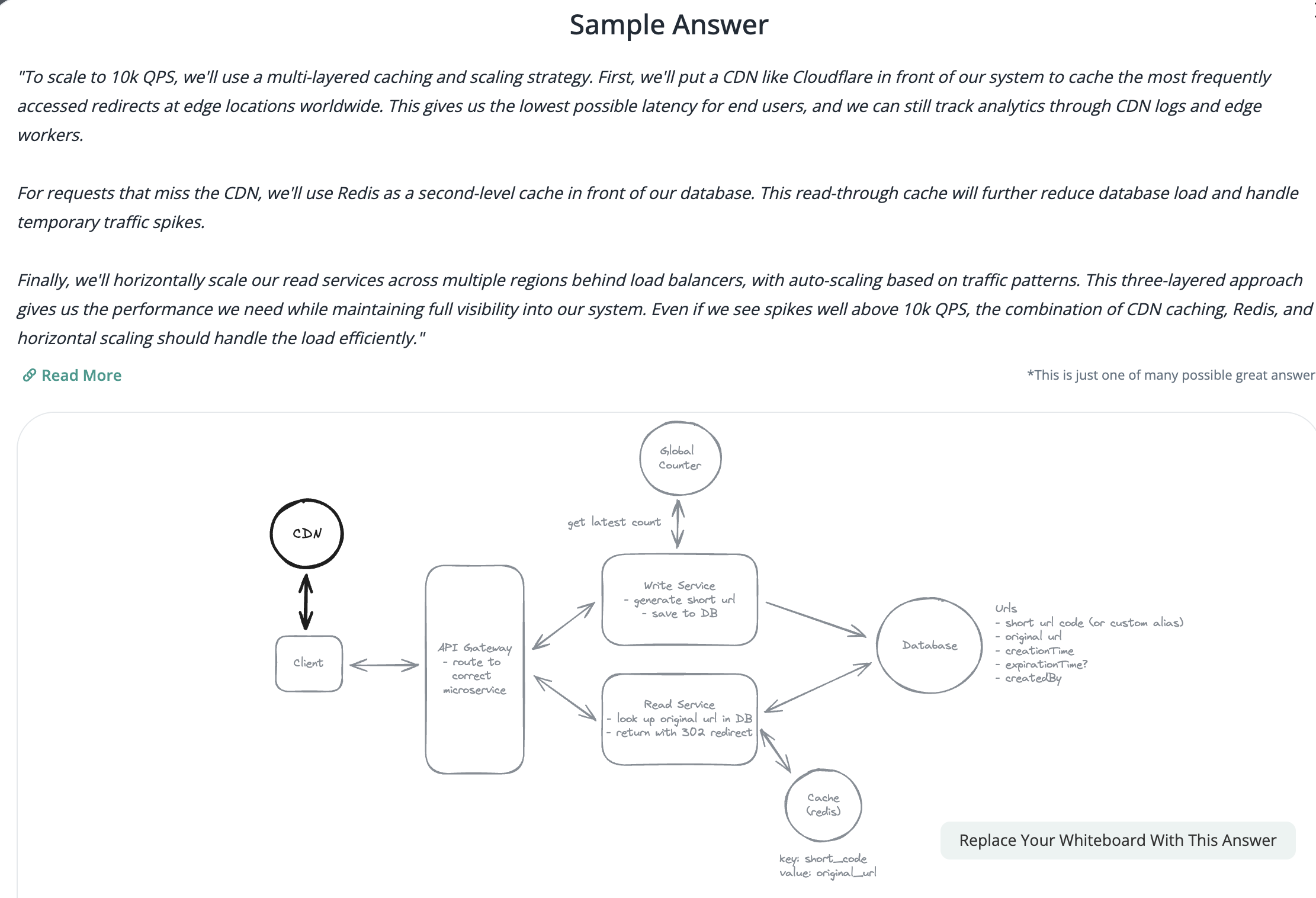

1. Bit-ly

-

Applying CQRS seperate read-write pattern.

-

Using auto increment Redis + base62 [A-Z] [a-z] [0-9] hasing pattern.

-

Centralize the distributed Redis Counter -> Make sure the url-id is unique across multiple instances.

-

To achieve 10M rps, using multiple layer cache: CDN > Redis > Horizontal Scaling (Stateless).

-

Algorithm of Hashing

- Hex: [0–9] [a–f]

- Base64: [A–Z] [a–z] [0–9] [+ /]

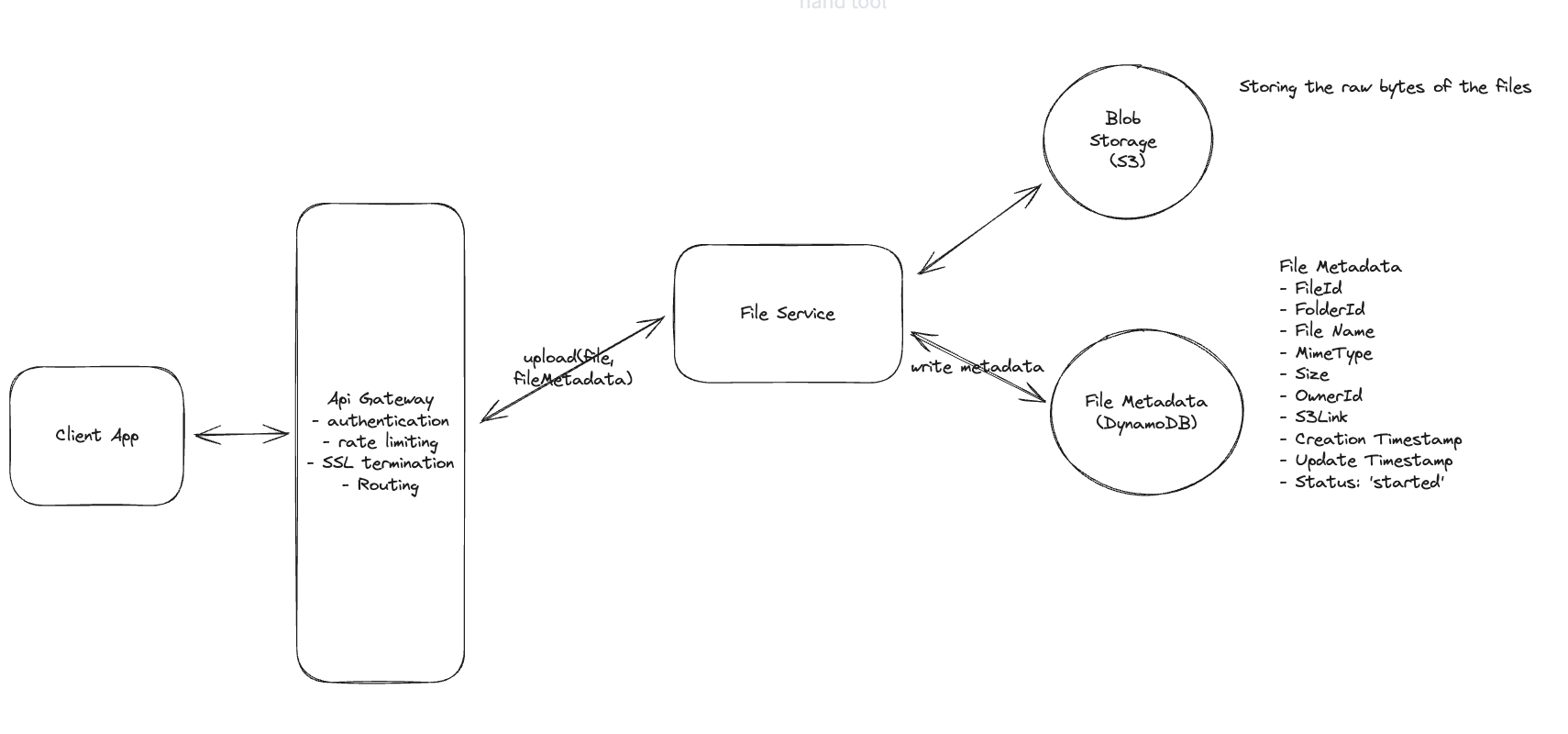

2. Dropbox File System

2.1. Upload File

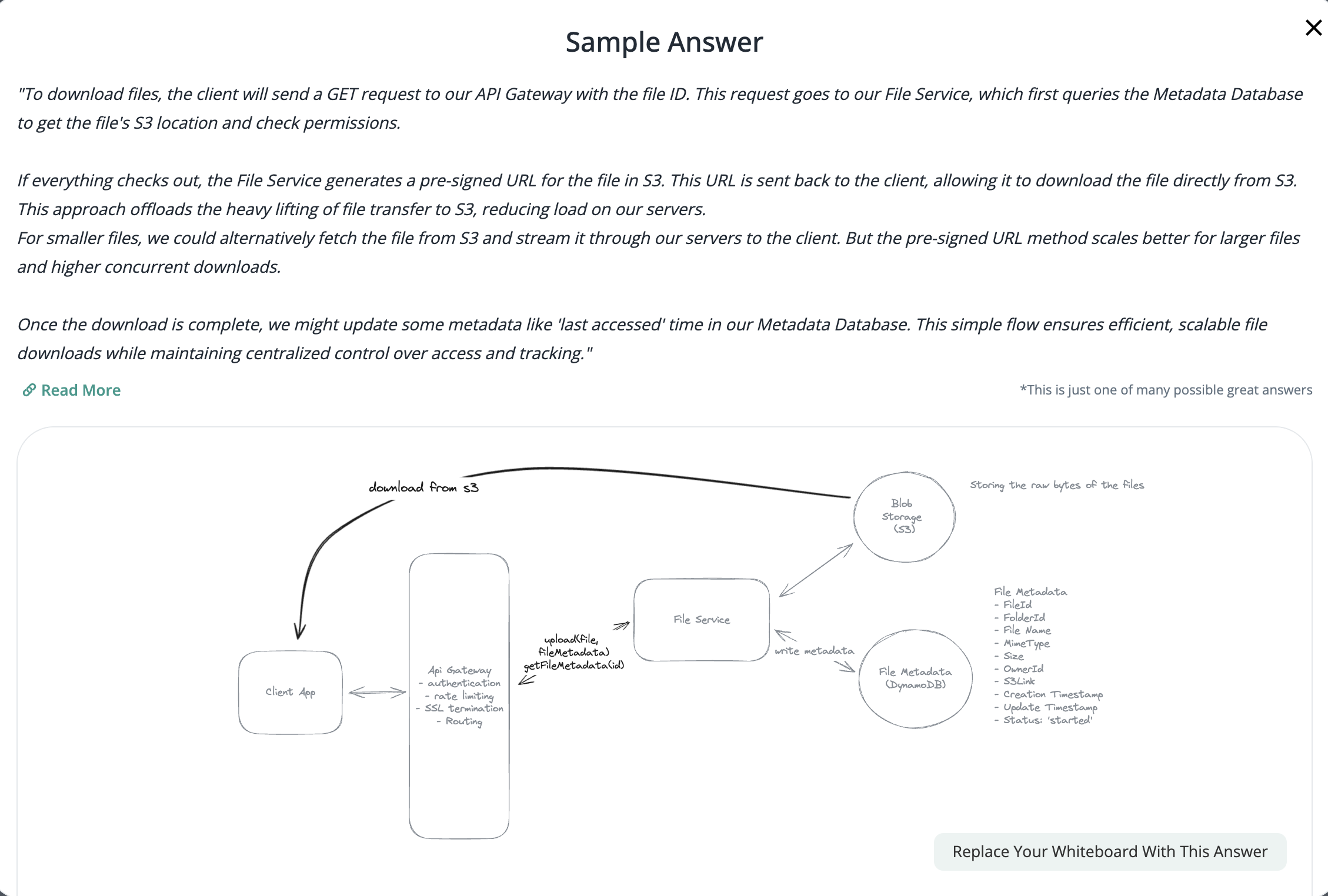

2.2. Download File

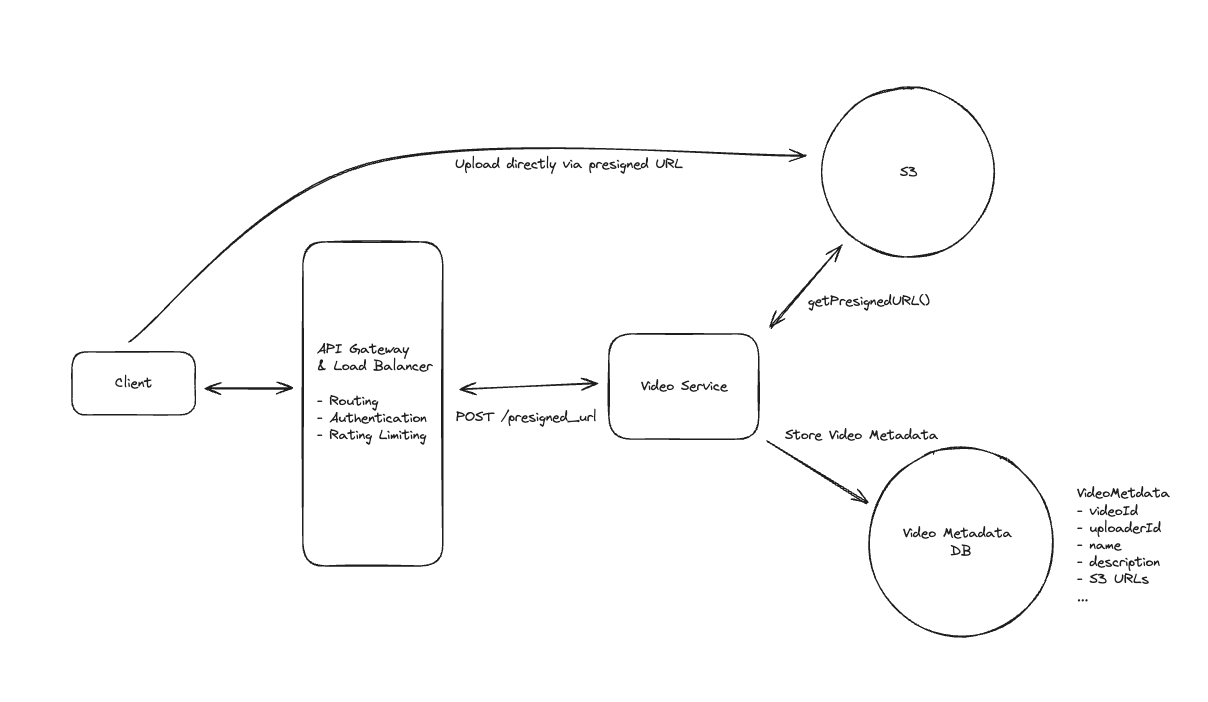

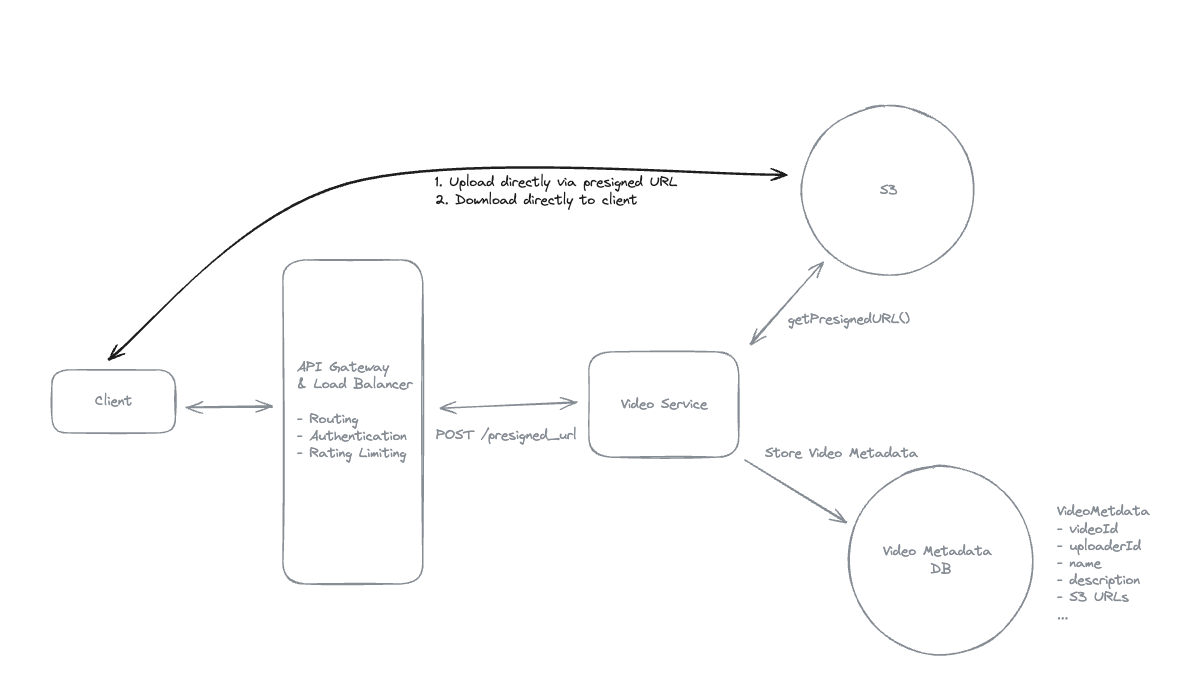

- Pre-signed URL: We need pre-signed URLs to provide secure, temporary access to private resources in a time-range without exposing credentials or making the resource publicly accessible.

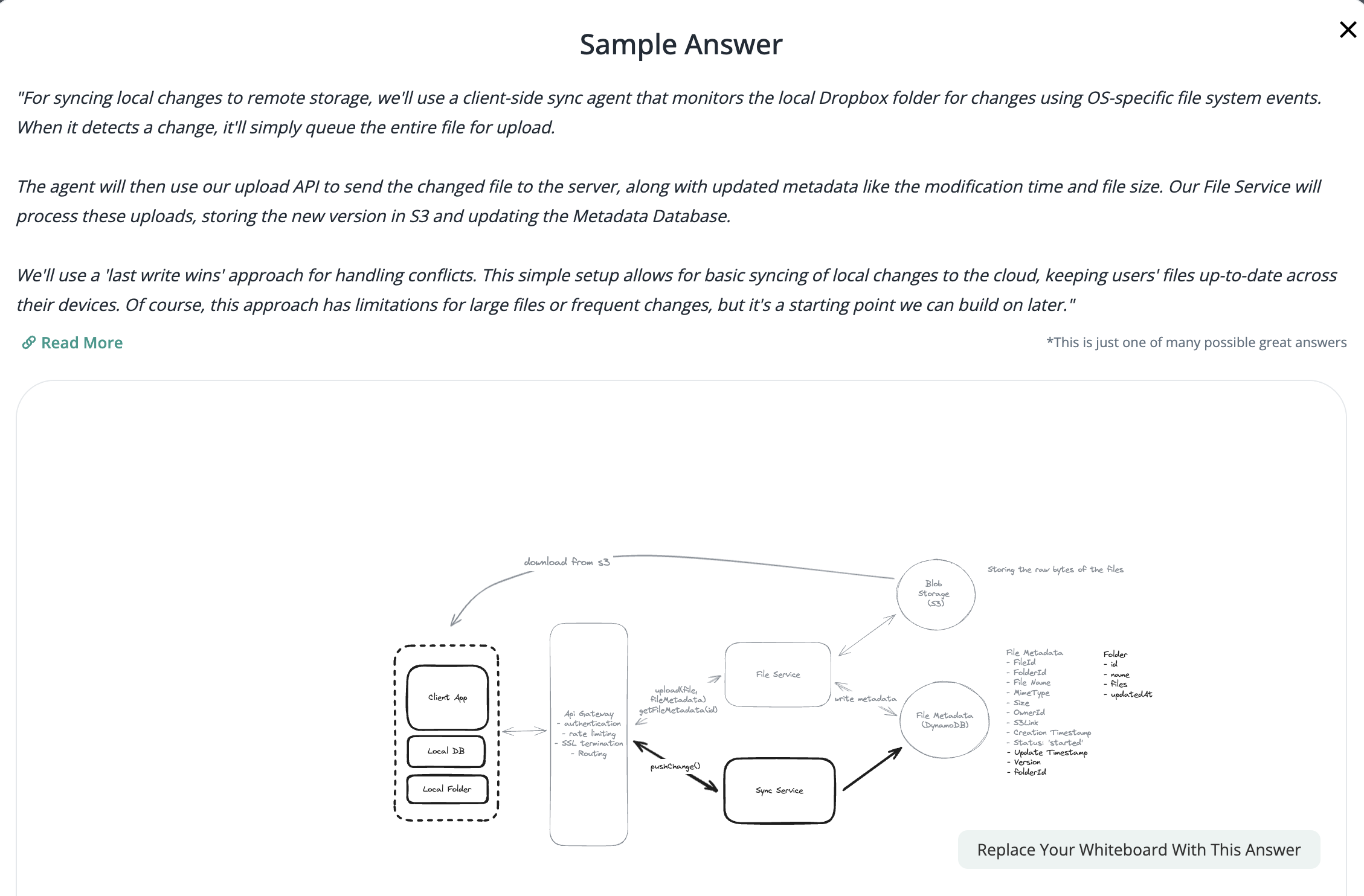

2.3. Sync local file and remote change in cloud

-

Using client-side sync agent + ‘Last write win’ to handle conflicts => sync update metadata to DynamoDB.

-

Using 2 services:

-

File Service: Upload file and create metadata.

-

Sync Service: Listen changes and update metadata.

-

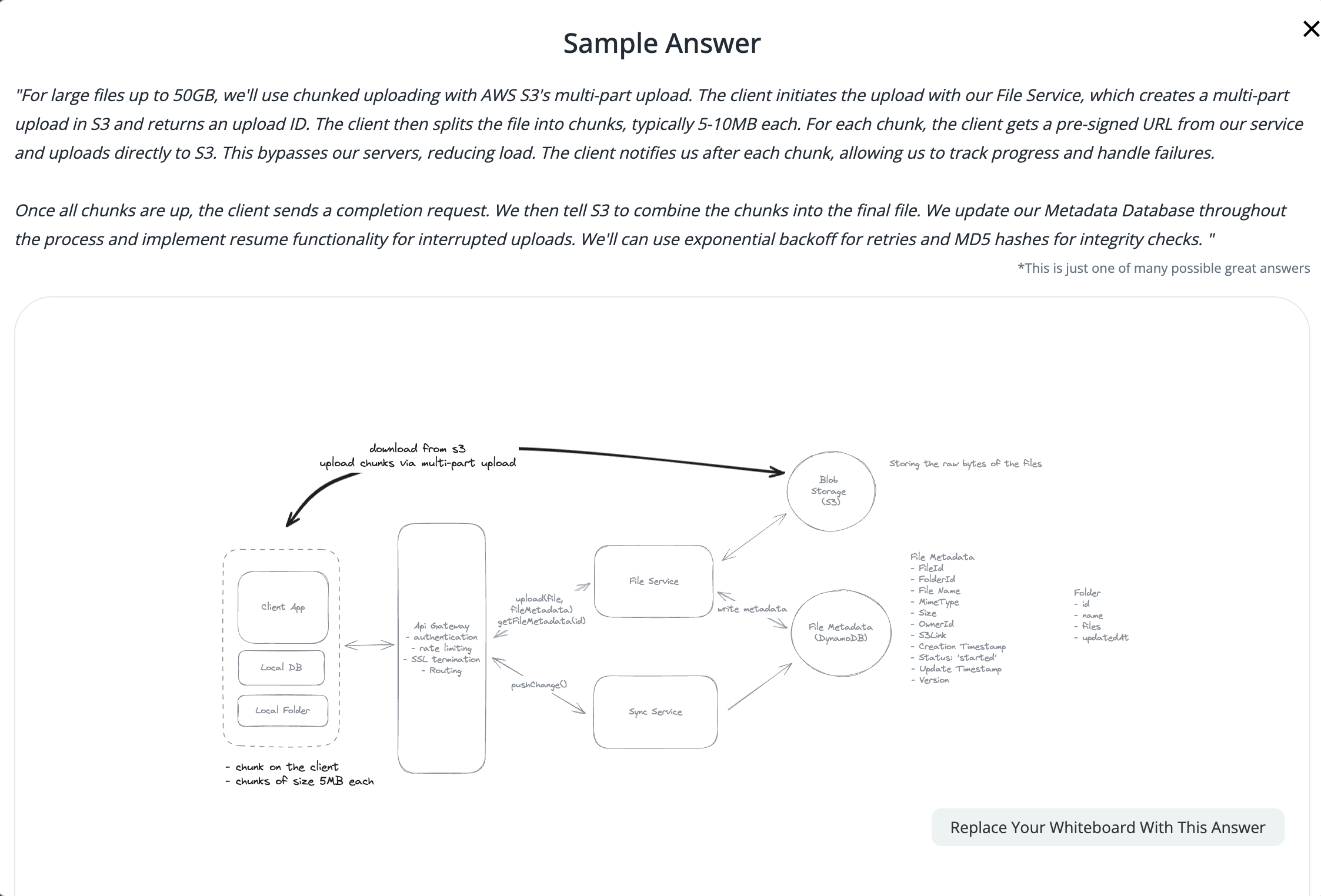

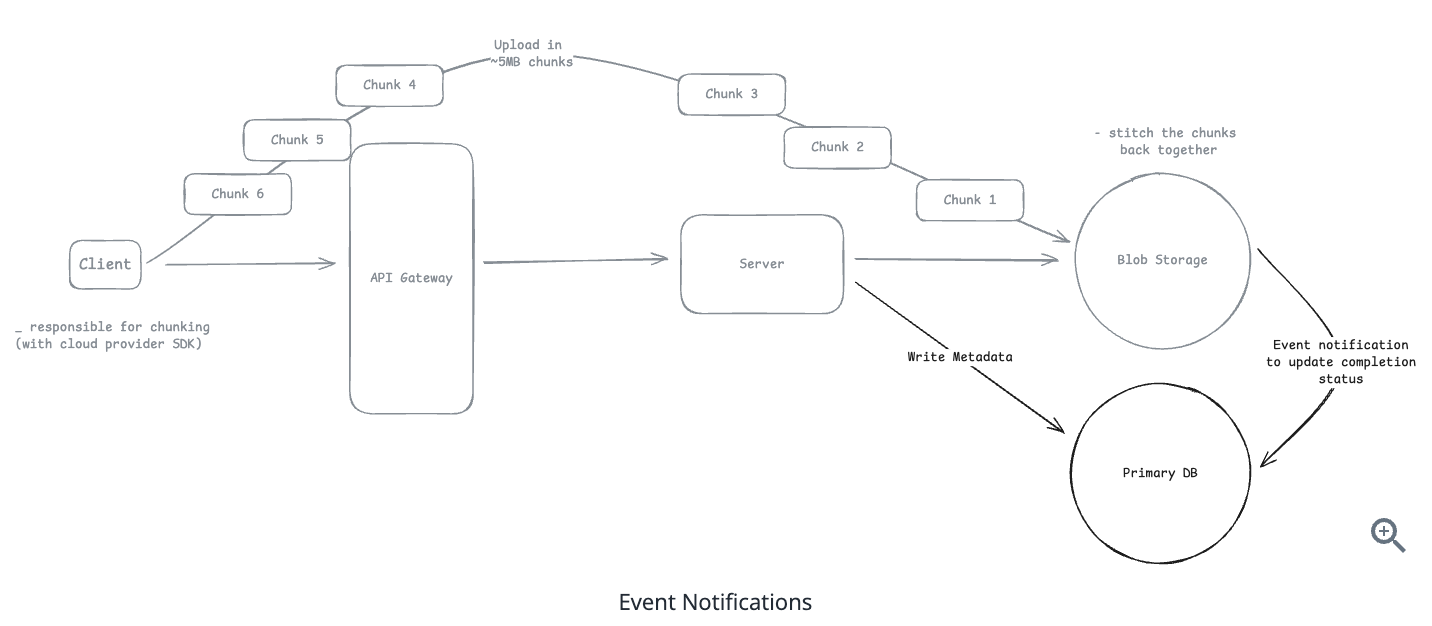

2.4. How will your system handle uploading large files (up to 50GB)

- Upload by each chunk, each chunk has its pre-signed url.

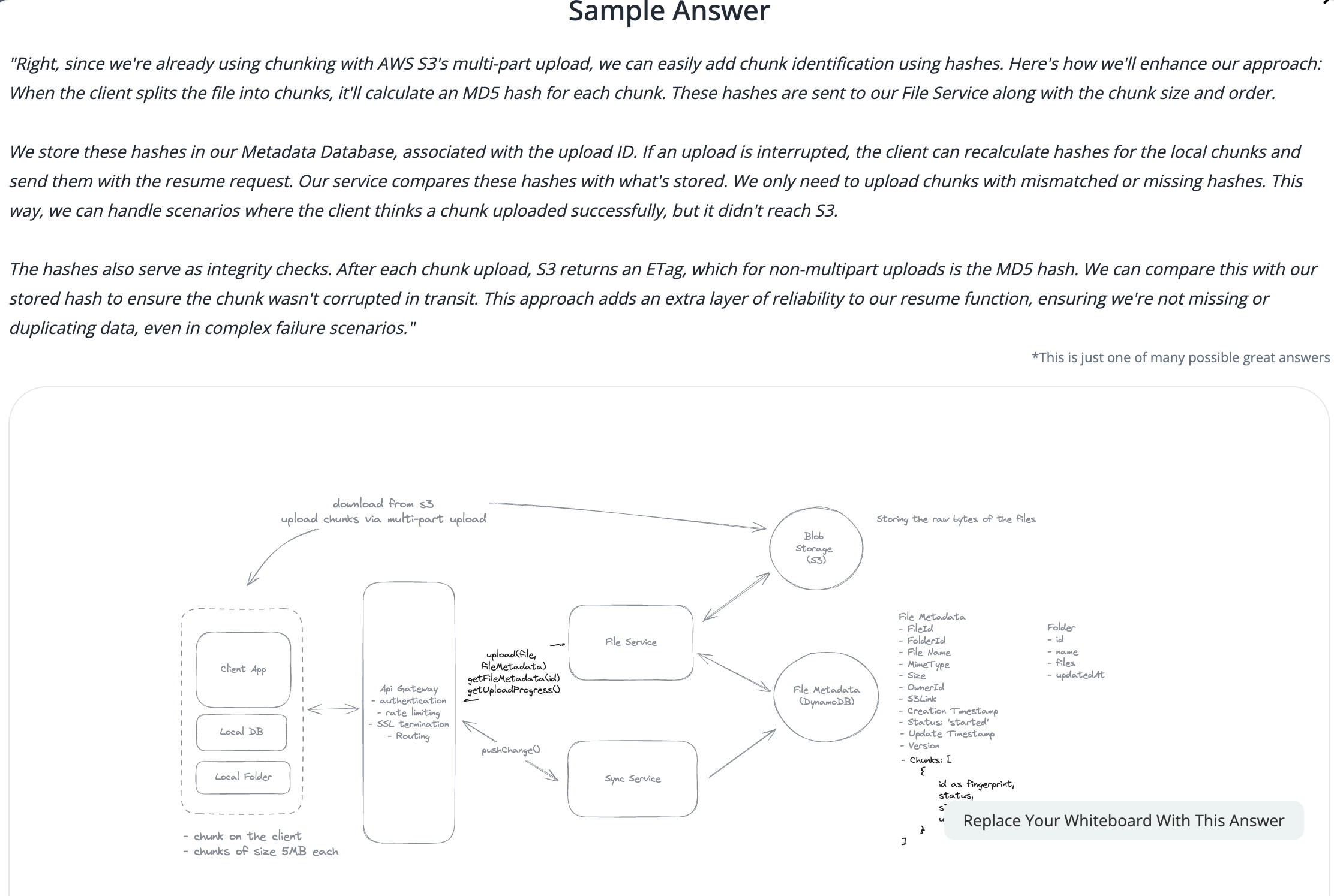

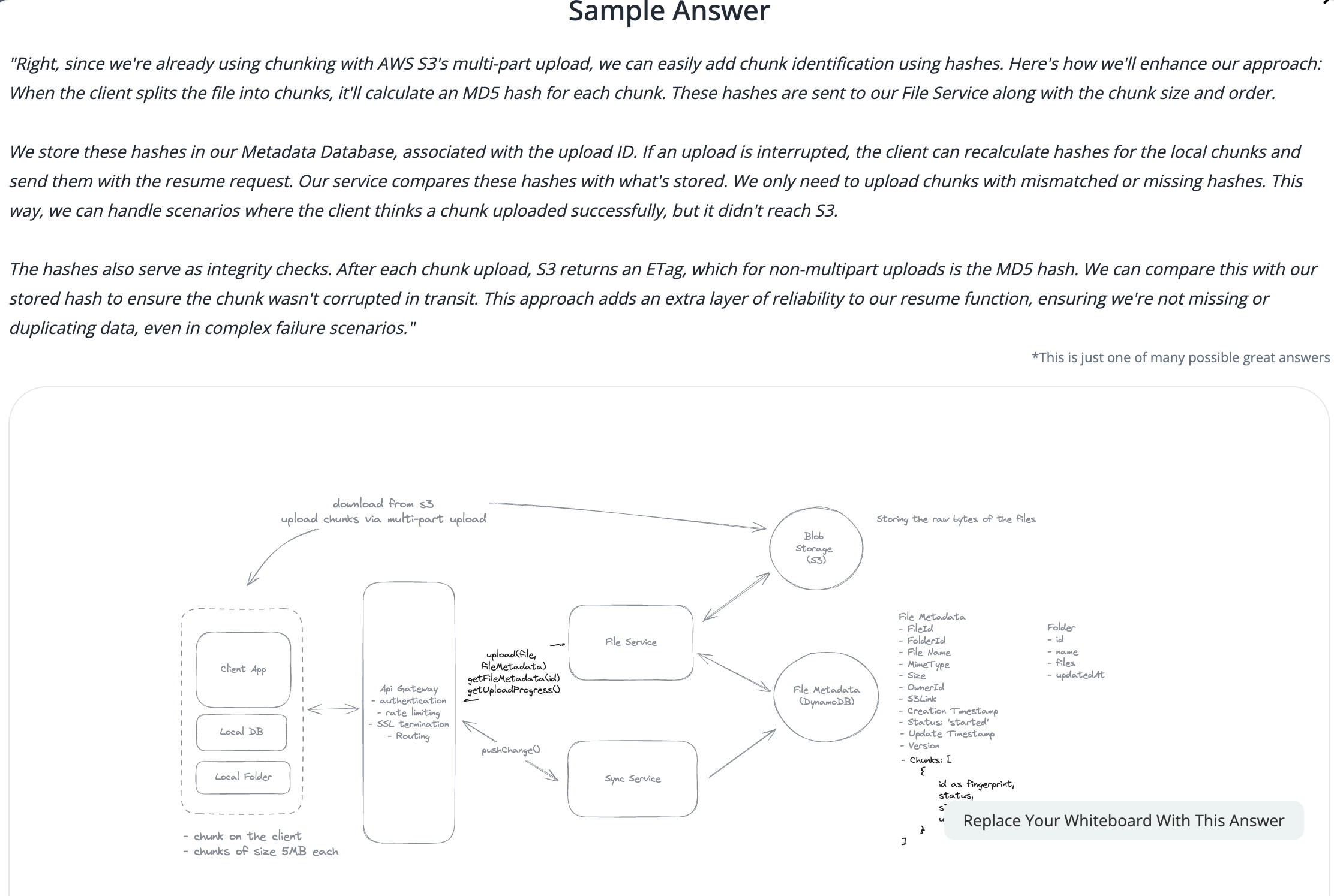

2.5. If you have a network interruptions, how it resume to upload the image but not start from scratch

2.6. How to sync file faster by reducing bandwidth

- Only sync the modified chunks, compress images and videos before transfer.

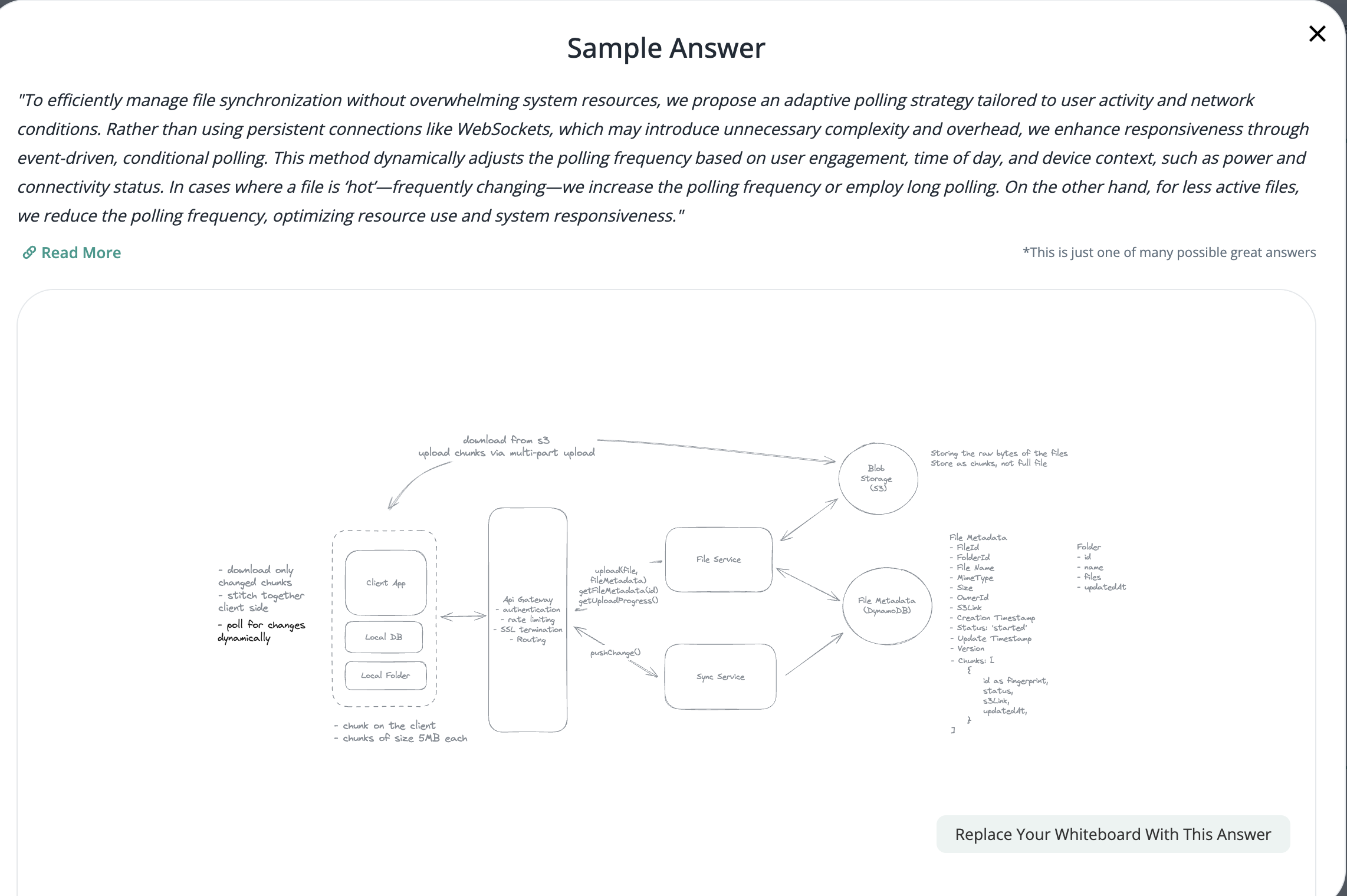

2.7. How can we minimize the time required to detect that a remote file has changed while not overloading the system with getChanges() requests?

- Using Polling Strategy instead of using WebSocket.

2.8. Notes:

-

Web Server and Browser prevent large file in requests (2GB request limits).

-

In file storage system, we need to prioritize Availability => User can access the file although network condition => Inconsistent Data is acceptable.

-

Compressing only work when: Time Transfer > Time compressing => If the image is already compressing, if you continue to compress it => It is counterproductive.

-

How to content-based file deduplication => Cryptographic fingerprinting (Hashing) => filenames + location.

-

We can use parallel chunking technique.

-

When the file is already upload, S3 trigger events => SQS => Amazon Dynamo DB to update status of the file to Completed.

-

Hybrid client-side for real-time update:

- Web Socket: active file.

- Pooling: in-active file.

-

‘Last Write Win’ improve the eventually consistency.

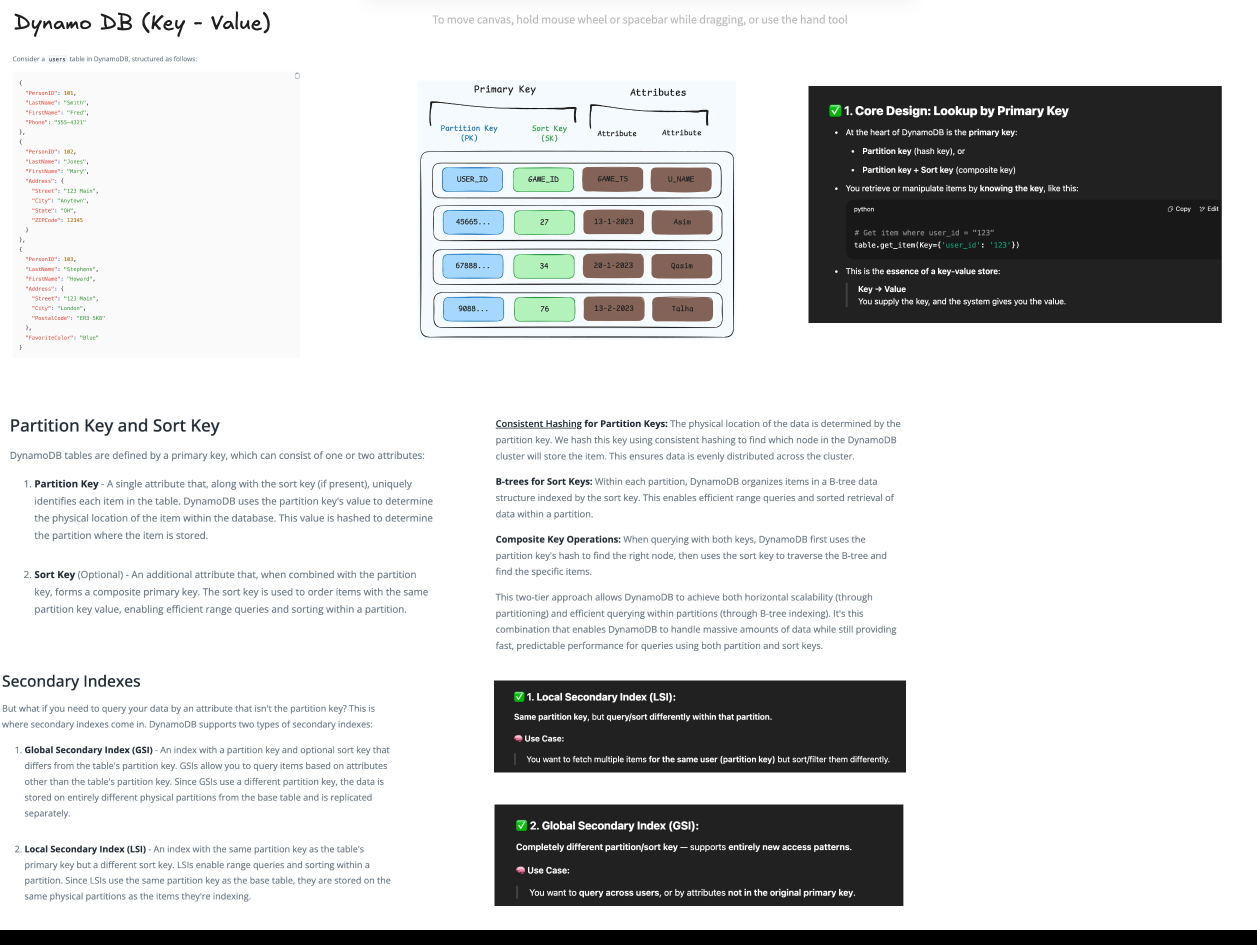

3. NoSQL Design

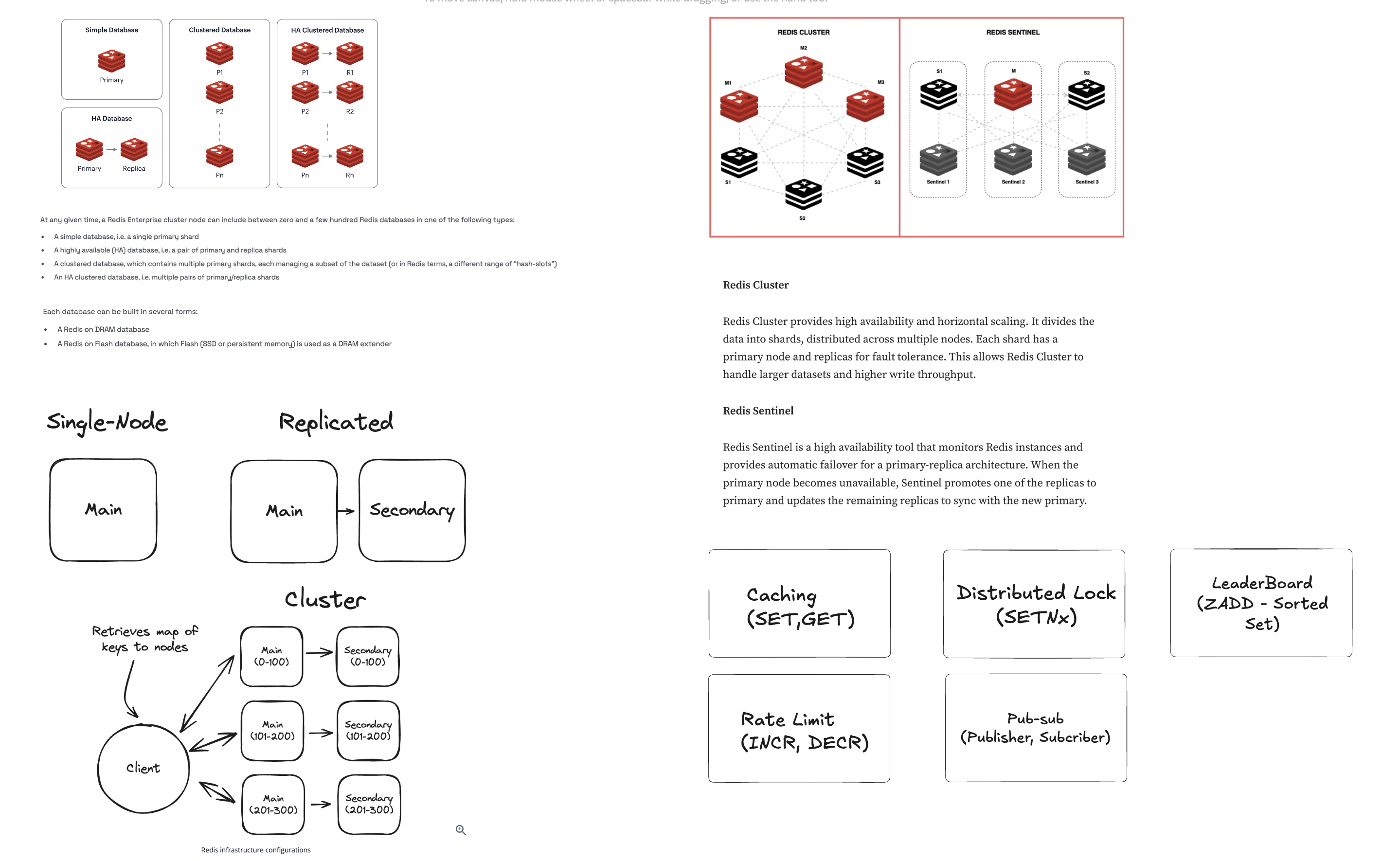

3.1. Redis

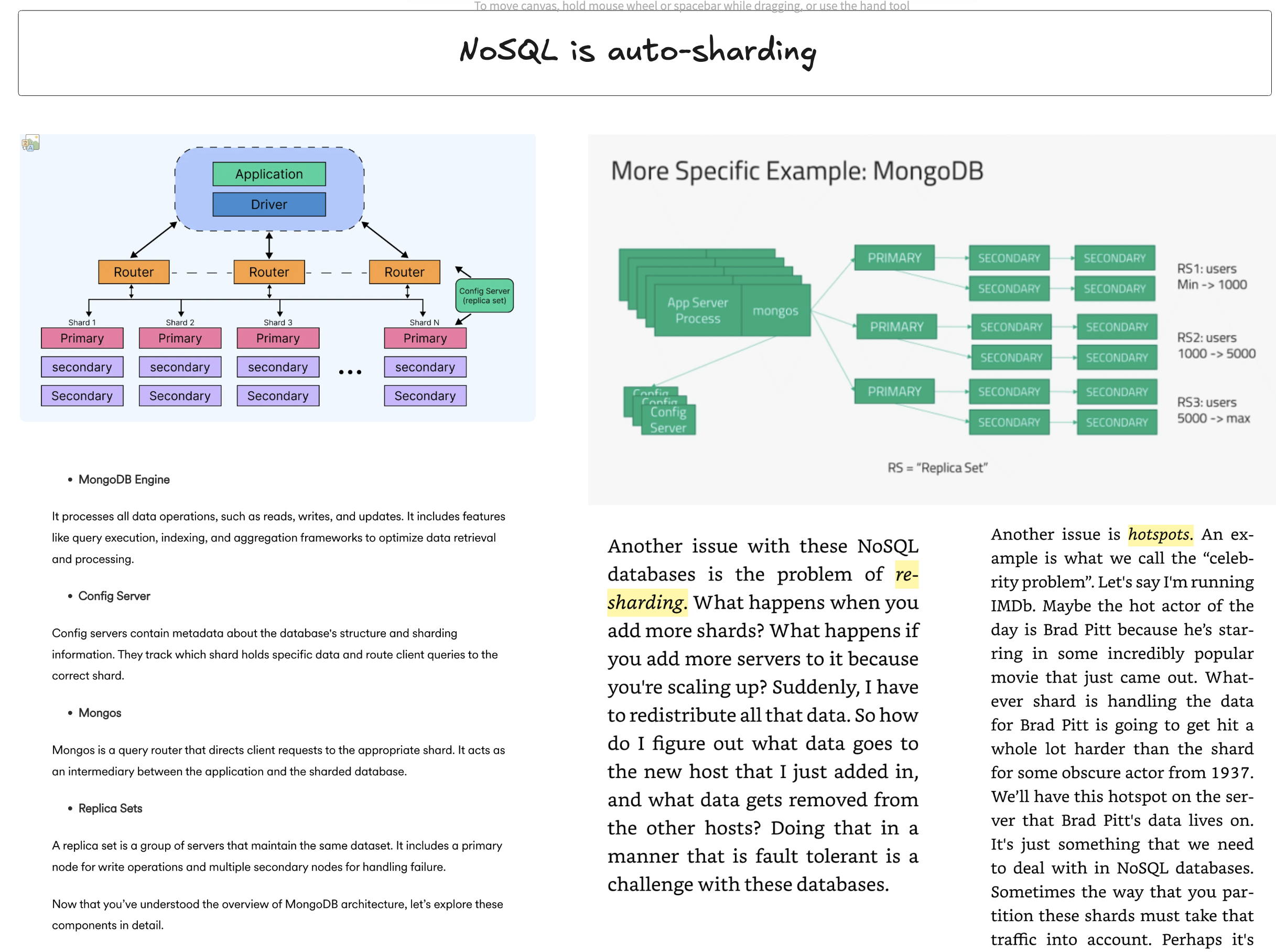

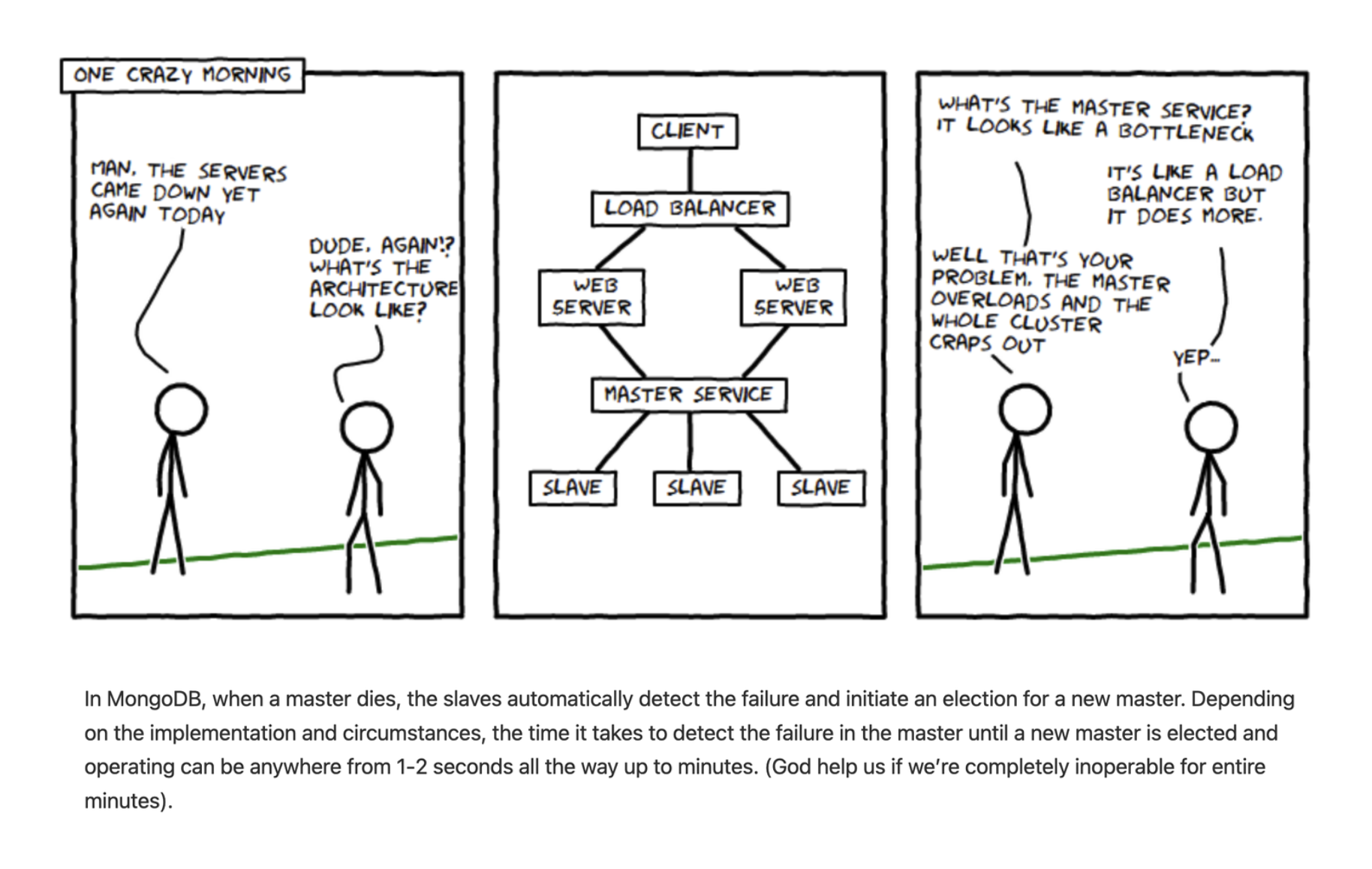

3.2. MongoDB

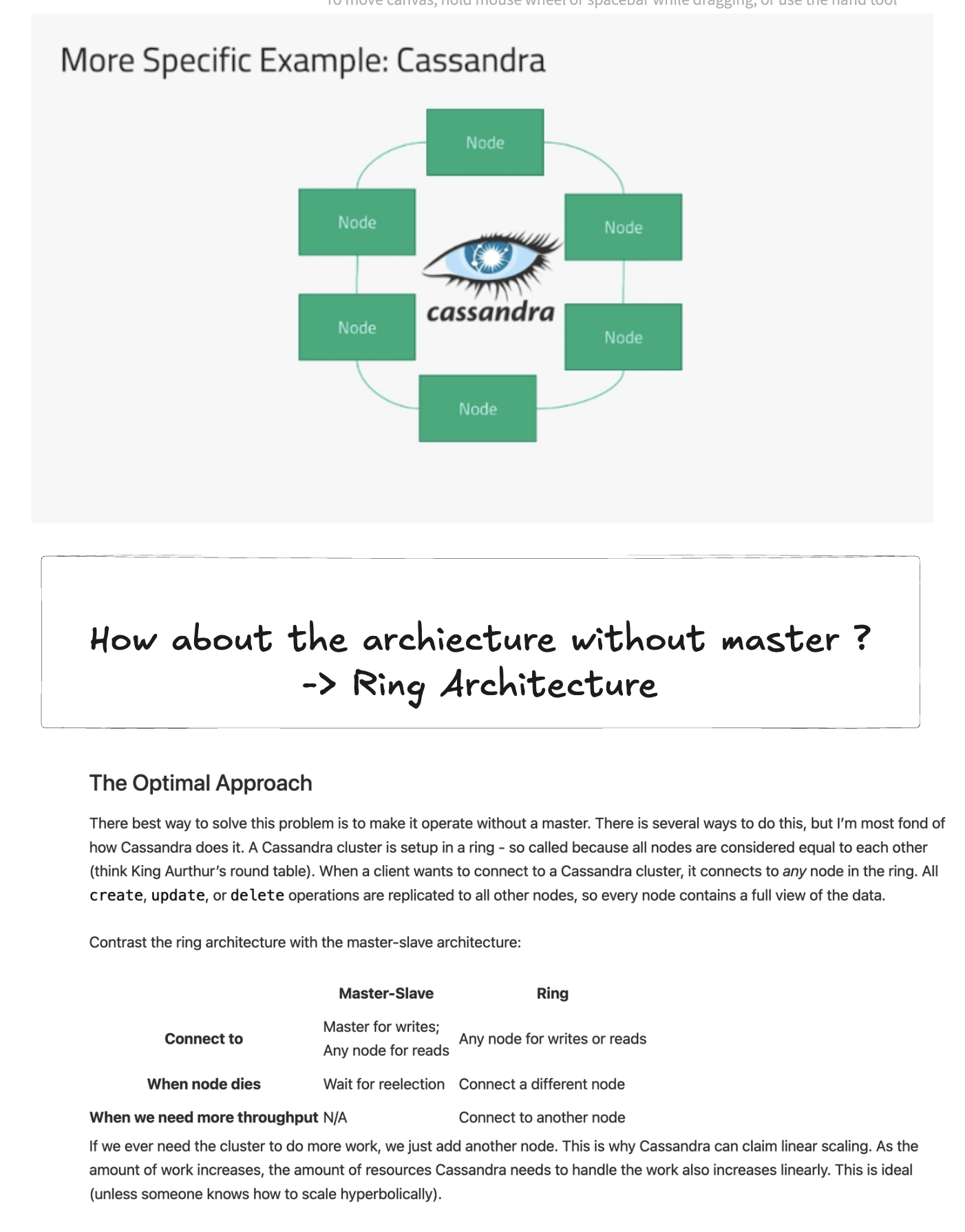

3.3. Cassandra

3.4. DynamoDB

3.5. Lock, concurrency, index, partition DBMS: Redis, MongoDB, Cassandra, DynamoDB, SQL

4. SQL Design

4.1. Lock Database

-

Khi select need to WHERE from ‘index’ => Không là nó scan DB.

-

Khi select * => auto lock bảng => Write vào không được.

When SELECT 1 billions records => What is lock db ?

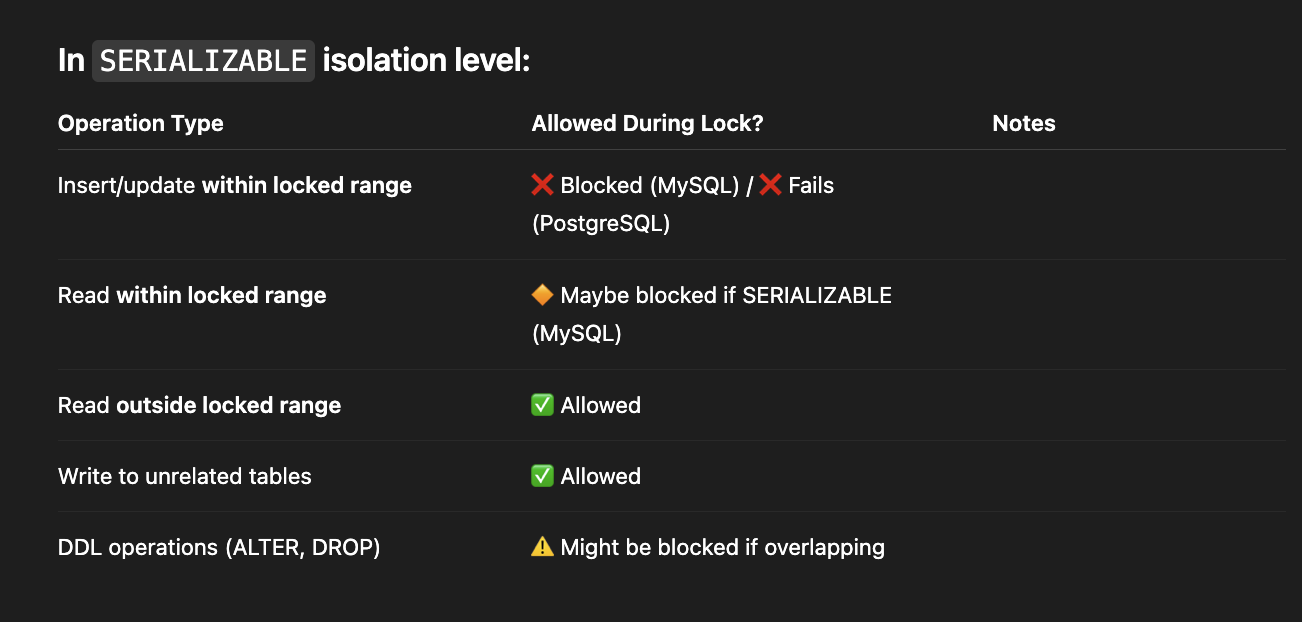

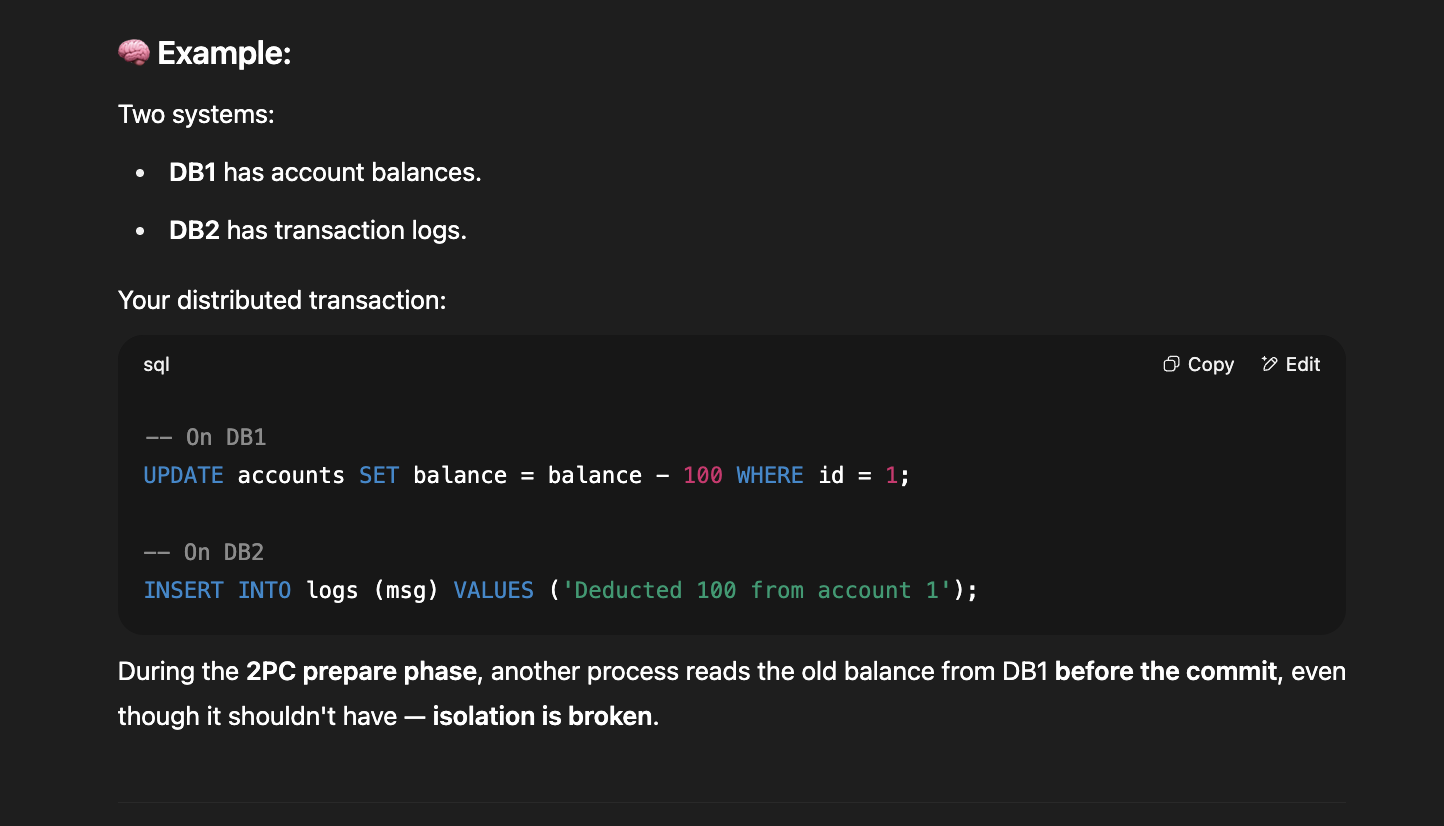

4.2. Isolation Lock happen in ACID

-

In ACID, Isolation Level in ACID

-

3 types of READ:

-

Dirty Read: Read without commit.

-

Non-repeatable Read: In the same trasaction, read the same row 2 times => Return 2 different value because other transaction has commited it.

-

Phanrom Read: Read same time with different numbers of row.

-

-

4 Isolation of Level:

-

Read Uncommited

-

Read Commited.

-

Repeatable Read.

-

Seralizable

-

4.3. Hadoop (File System)

-

Hadoop: Distributed File System from Data Warehouse.

-

Data Warehouse: Using Hive to query Hadoop.

-

Data Lakehouse: versioning, metadata, only has ACID, still distributed => Keep ACID by using distributed patterns: 2-Phrase-Commit, transaction logs, slower than warehouse.

-

DBMS: File System + Query Engine + Metadata.

4.4. Hive:

- Hive: Store metadata for Hadoop (File System)

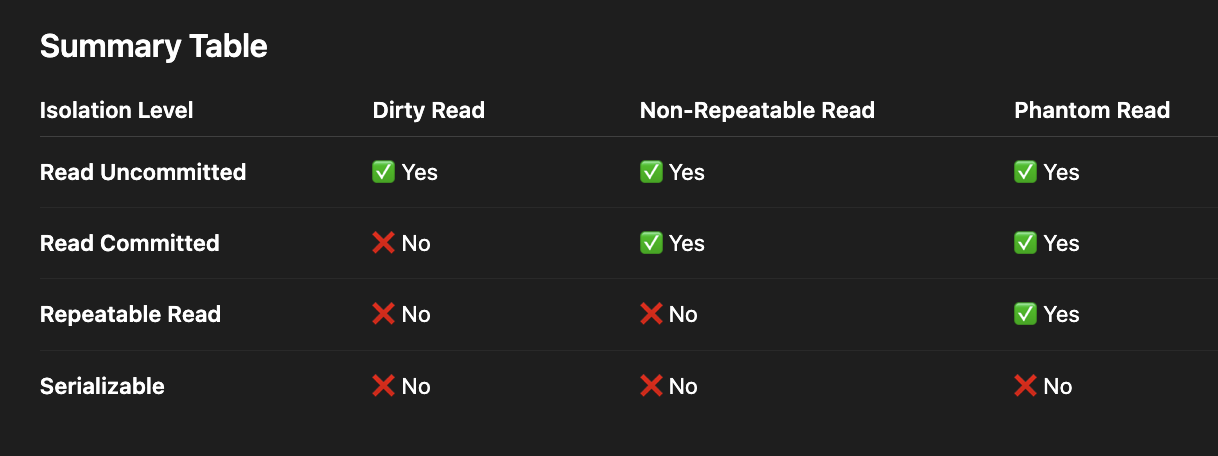

4.5. Two-phrase commit

- Failed in ISOLATION level.

4.6. Airflow

-

Using cronjob to schedule job to query data.

-

CI/CD of data.

4.7. Flink & Spark

-

Compute Engine

-

Spark: batch processing, read batch from Kafka => Count enough records to use.

-

Flink: Read real-time, read data from Kafka => Compute to predict the fraud detection real-time, costly and less to use.

-

Depend on jobs => write a cron job with Spark, Flink => DE write cronjob.

4.8. OLAP

-

Using query engine to read data in data warehouse.

-

PostgreSQL

-

Redshift

-

Lakehouse -> trino

4.9. Superset

-

Superset -> Trino -> Query data in lakehouse (warehouse) -> Red-shift.

-

Warehouse: SQL

-

Lakehouse.

-

Object: metadata -> ETL -> Read from S3 -> Tool make it (abstract from sql -> query data in engine).

-

Data will store in file and partitioning.

-

Everything is a distributed file system -> Partition -> Abstract by sql query in tool.

4.10. Write-Ahead Log

-

A Write-Ahead Log is a sequential log file that records every intended modification to the database before the actual data is written.

-

Use to store history action of DBMS => Use for rollback, migration when change data capture,…

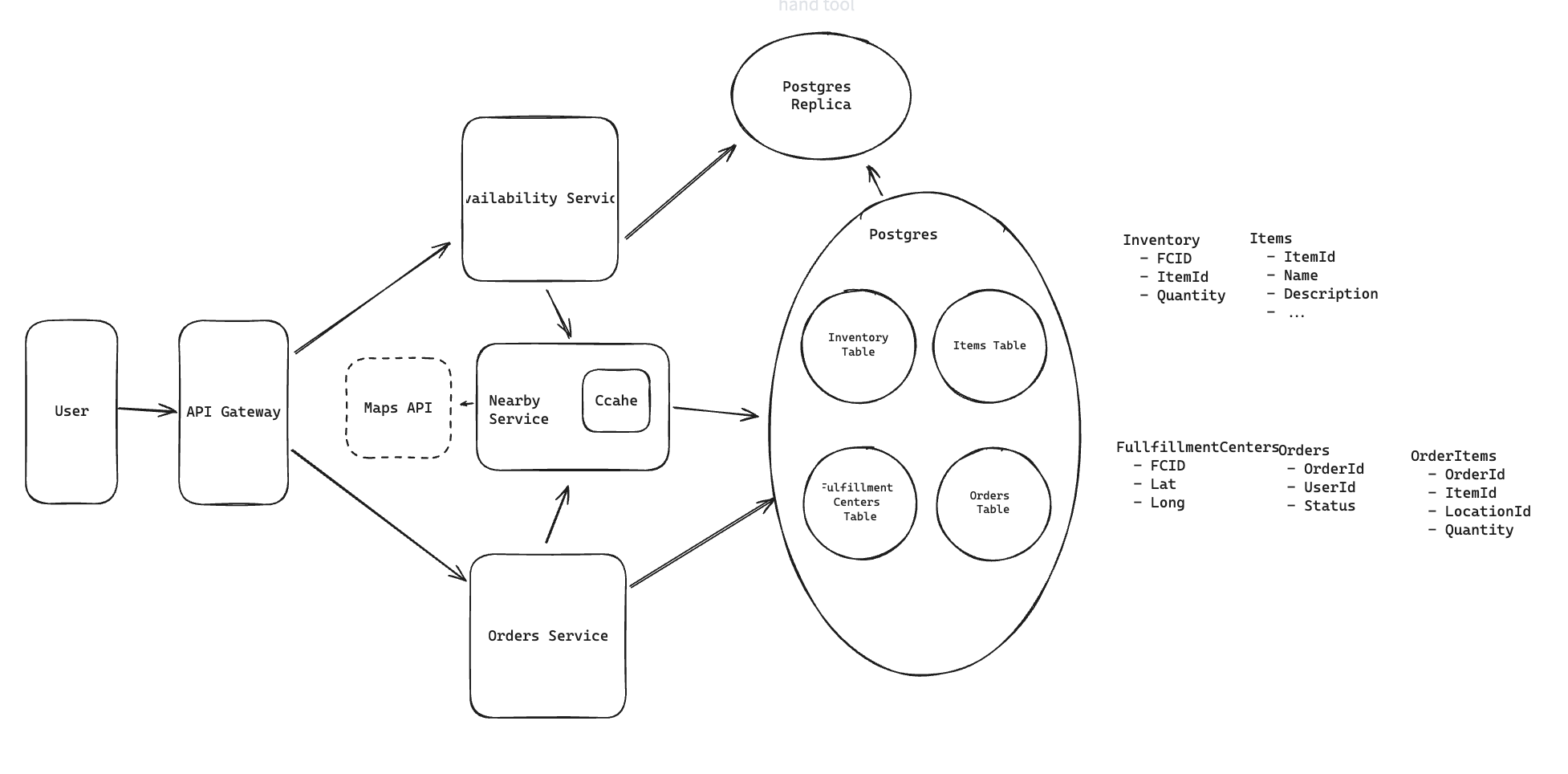

5. Design a Local Delivery Service like Gopuff

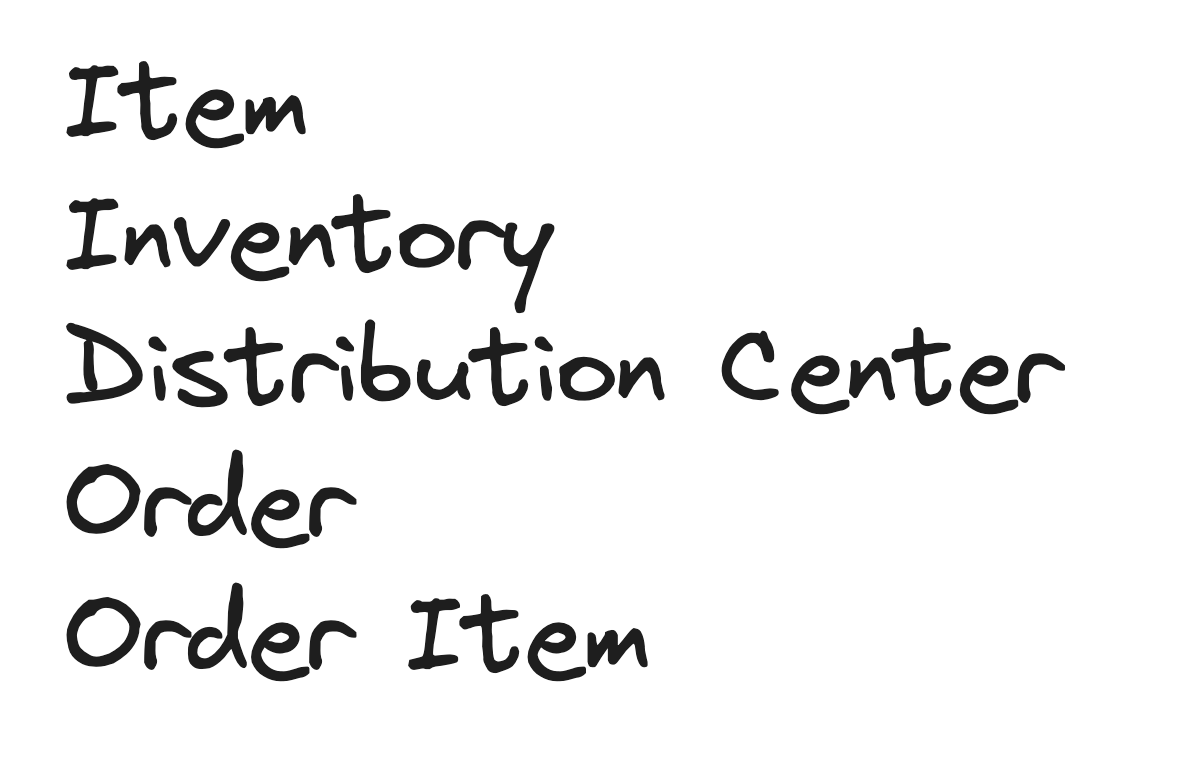

5.1. Entities:

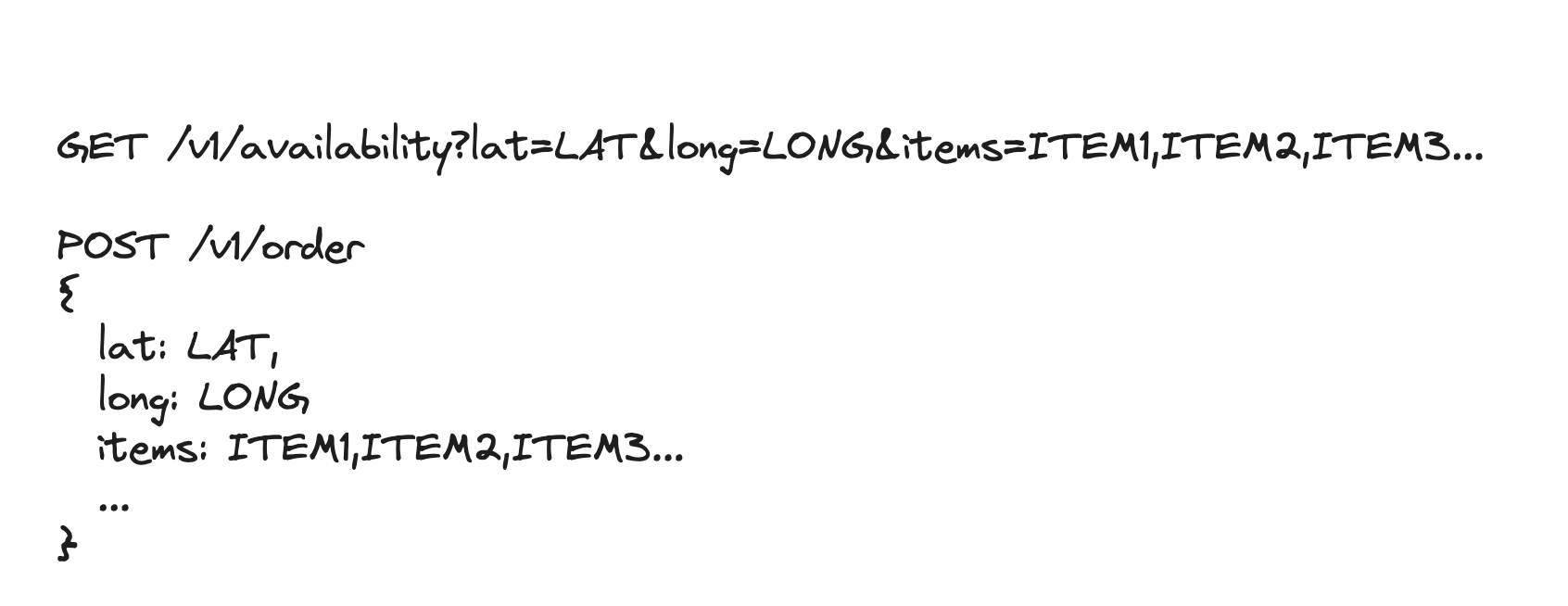

5.2. API Design

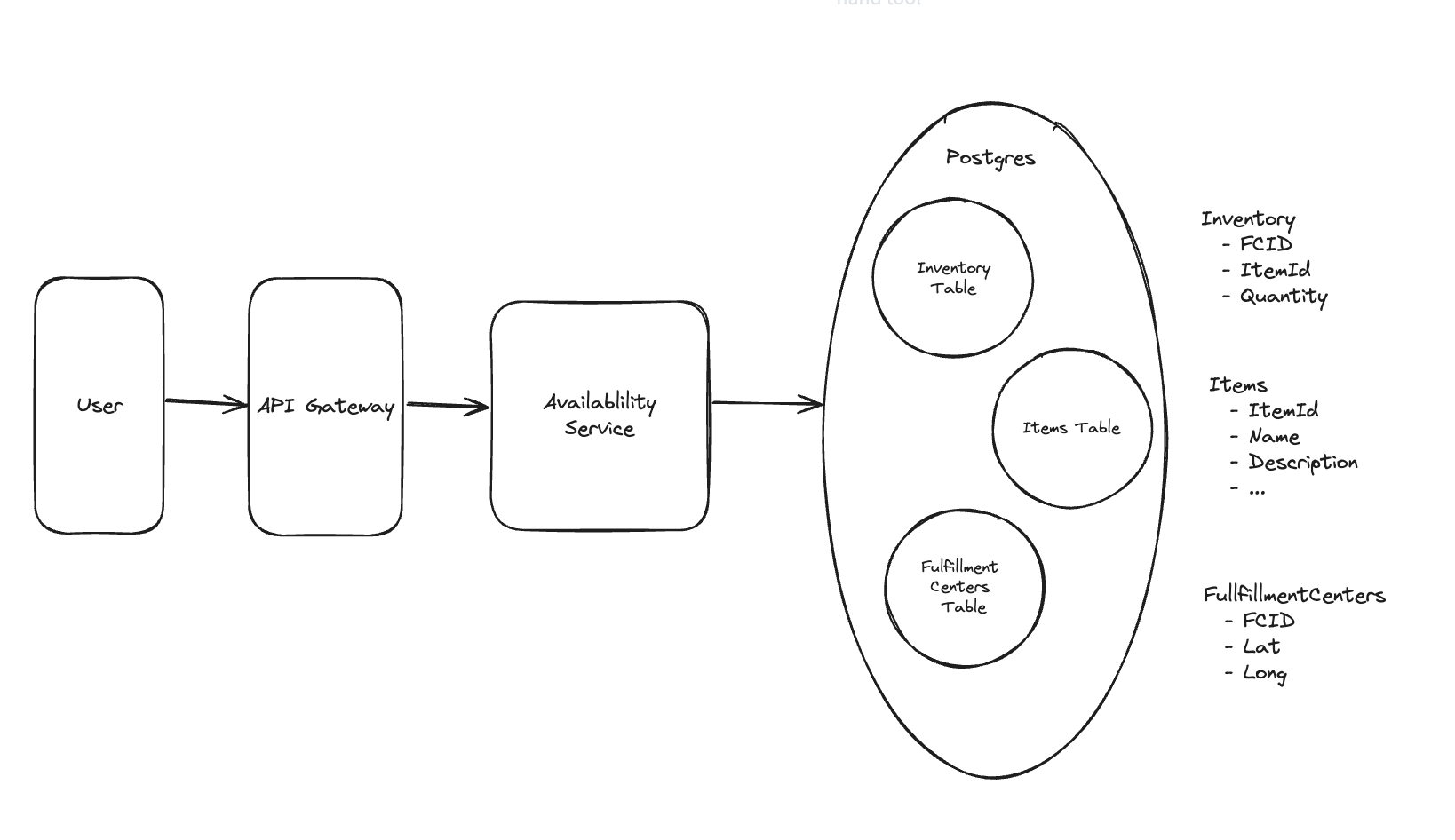

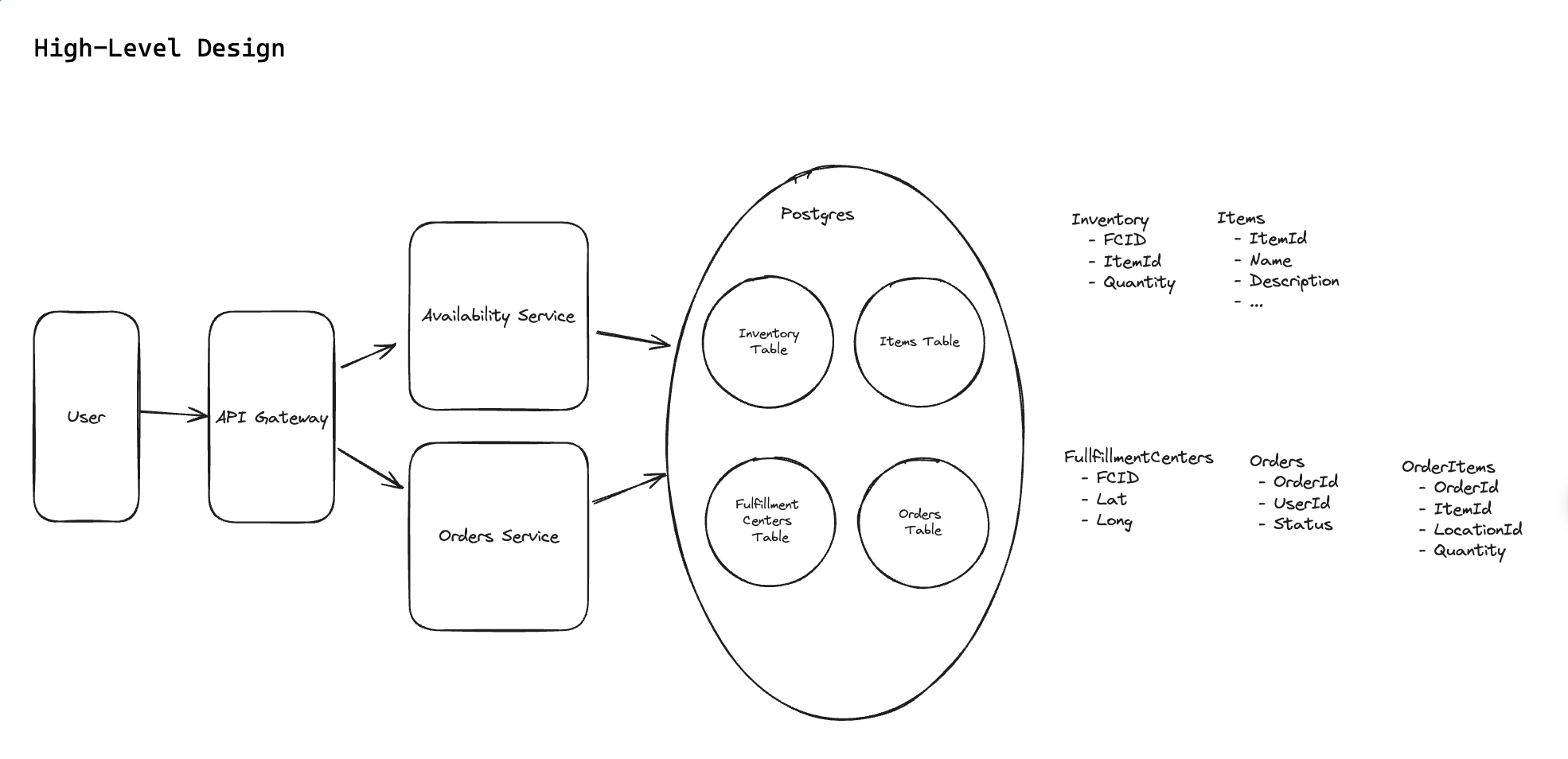

5.3. How will customers be able to query the availability of items within a fixed distance (e.g. 30 miles)?

5.4. How can we extend the design so that we only return items which can be delivered within 1 hour?

5.5. How can we ensure users cannot order items which are unavailable? How do we avoid race conditions?

“To avoid both race conditions and customers ordering out of stock inventory, we’ll use a transaction on our Postgres database. In this transaction we’ll:

(1) Check the inventory items are still available at the locations we determined when we checked availability. (2) Decrement the inventory counts at those locations. (3) Create a new Order record with the associated items.”

5.6. How can we ensure availability lookups are fast and available?

(1) We can use the prefix of the geohash of a location as the cache key so small changes in the location still hit the same fulfillment centers.

(2) Each nearby service instance can maintain a local cache of the fulfillment centers so that location search can be done without an external call. Since fulfillment centers aren’t changing often, we can make the cache TTL long.

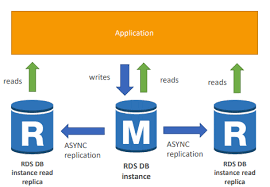

(3) We can replicate our Postgres instance and have the availability service read from replicas. This may mean availability lookups are slightly stale, but we’re ok with this.”

Idea: The location only store data for it, do not scan all table.

5.7. How do delivery systems efficiently aggregate inventory across multiple warehouse locations?

- Sum quantities from nearby warehouses

5.8. Travel time estimation services provide more accurate delivery zones than simple distance calculations

- Travel time services account for real-world factors like traffic, road conditions, and geographic barriers, providing more accurate delivery feasibility than straight-line distance calculations that ignore these constraints.

5.9. Which technique is MOST effective for preventing concurrent resource allocation conflicts?

- Atomic transactions.

5.10. Implement database read performance

-

Read replica: Use for master slave, write in master, read from slave. But replica can serve stale data.

-

Query Caching.

-

Index.

-

Write-ahead logging: keep track all actions of DBMS, use for migration data.

5.11. Which algorithm accounts for Earth’s curvature when calculating geographic distances?

- The Haversine formula.

5.12. Two-phase filtering

-

Two-phase filtering first applies cheap local filters (like simple distance calculations) to reduce the candidate set before making expensive external API calls (like travel time estimation).

-

Step 1: Pre-computed radius filtering

5.13. Paritition strategy

-

Round-robin distribution

-

Geographic sharding

-

Hash partitioning

-

Timestamp-based splitting

5.14. What happens when cached inventory data becomes stale after concurrent orders?

- Overselling inventory

5.15. Which isolation level BEST prevents phantom reads in concurrent transactions?

-

Two read query -> Call different numbers of rows.

-

Only serializable solve phantom read.

5.16. Eventual consistency and Partition Tolerance

-

Eventual consistency: Parts may show different data briefly, but will match soon.

-

Partition Tolerance: Partition tolerance means a system can work even when some parts can’t talk to each other => Using Data Replication or Local Cache.

5.17. Reduce external service

-

Batch Processing.

-

Result Caching.

-

Local pre-filtering.

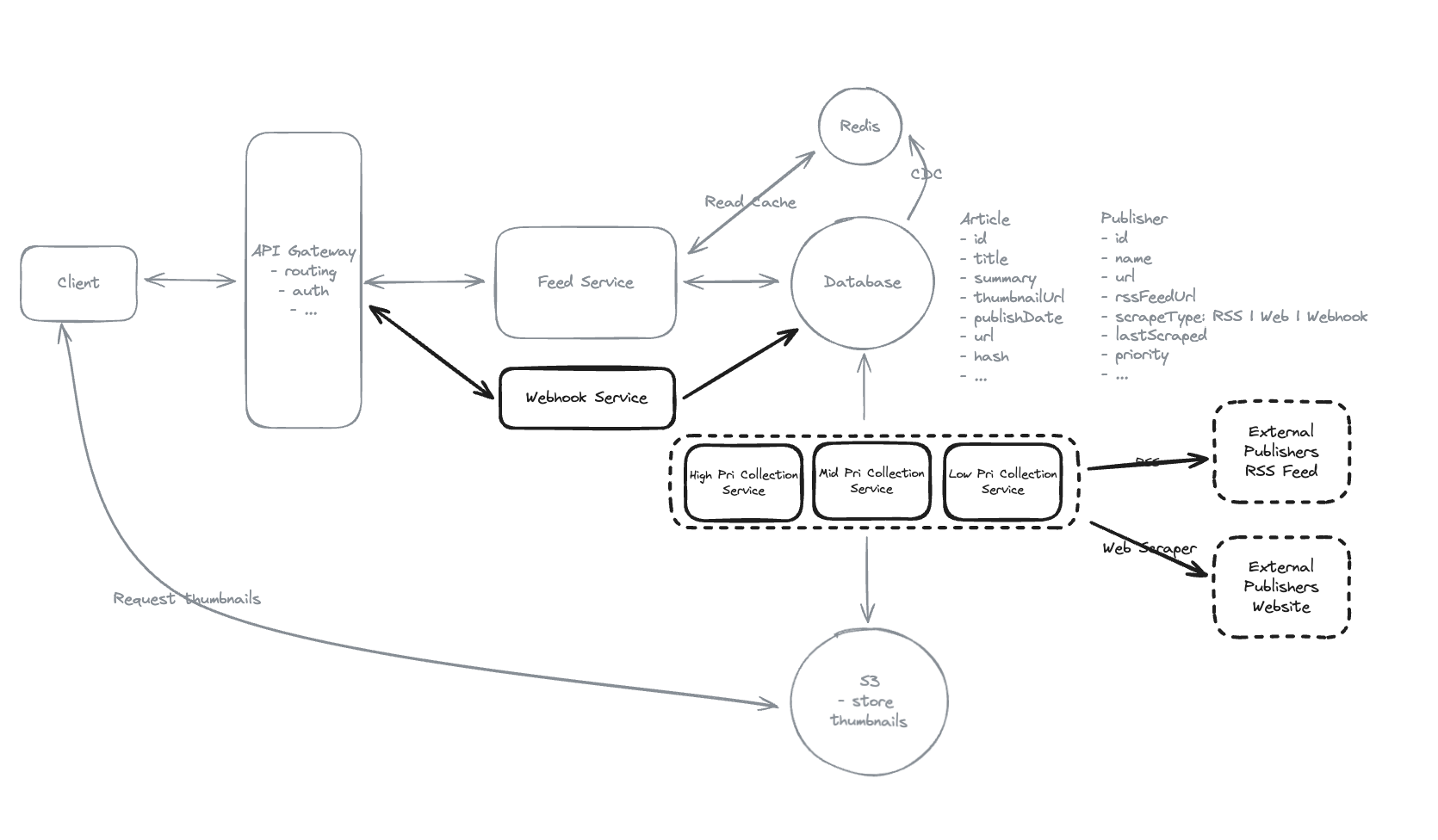

6. Design a News Aggregator (Example dev.to)

6.1. Non-requirements

6.2. Entities

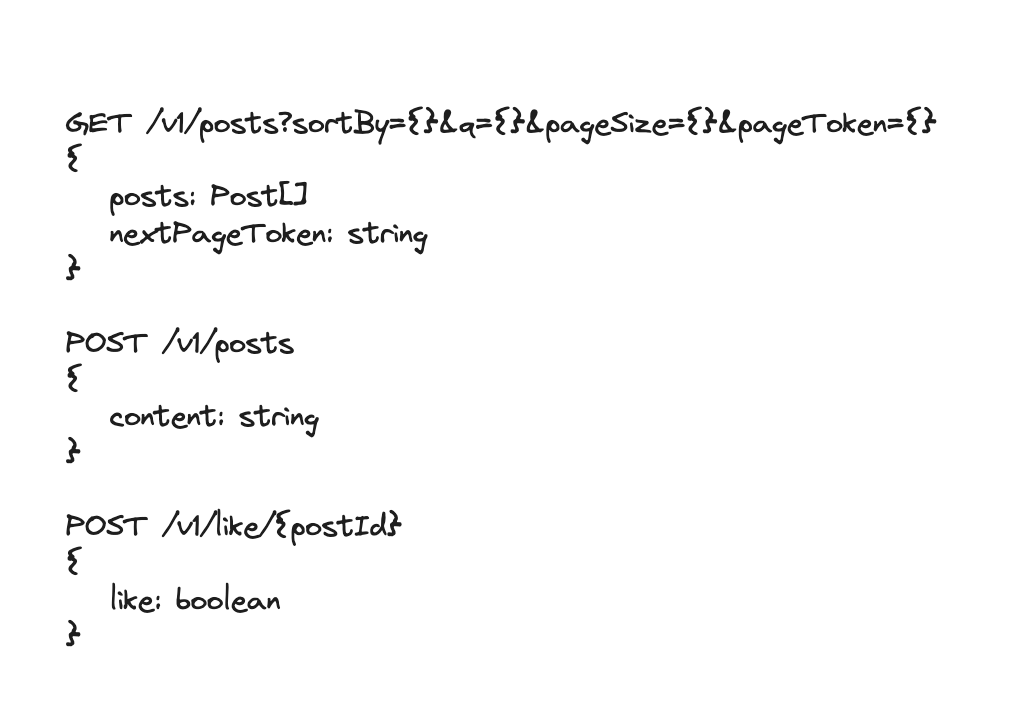

6.3. API Design

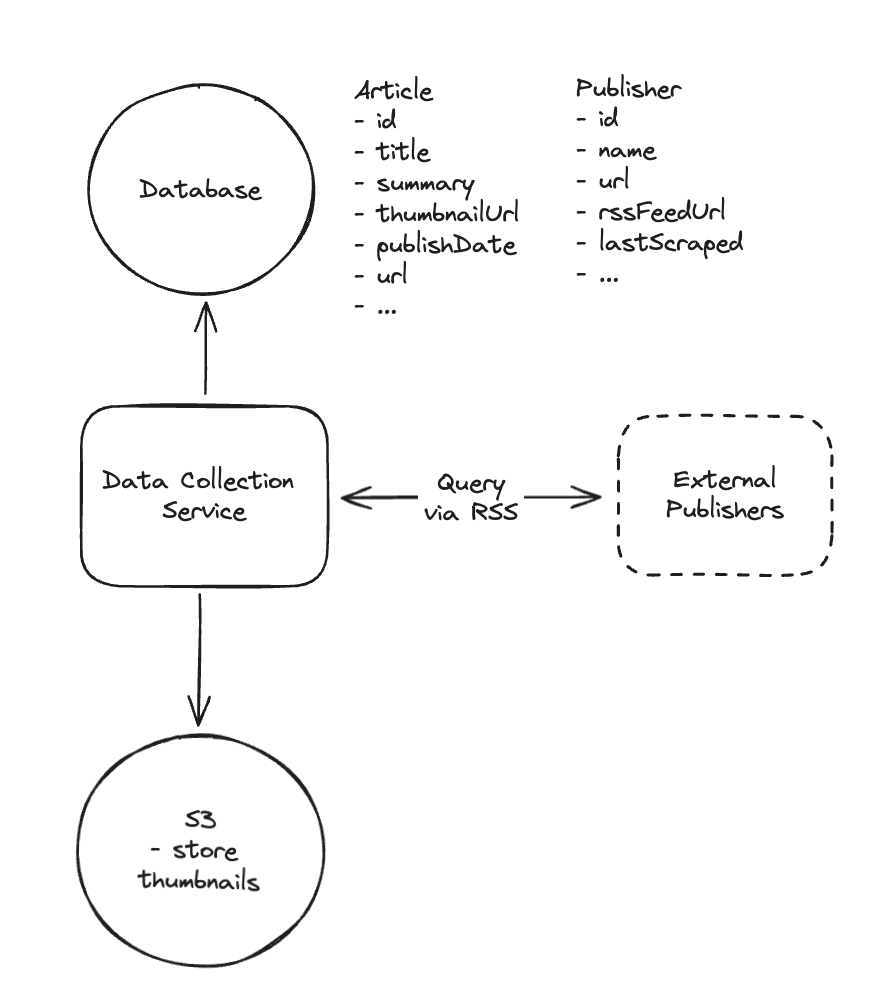

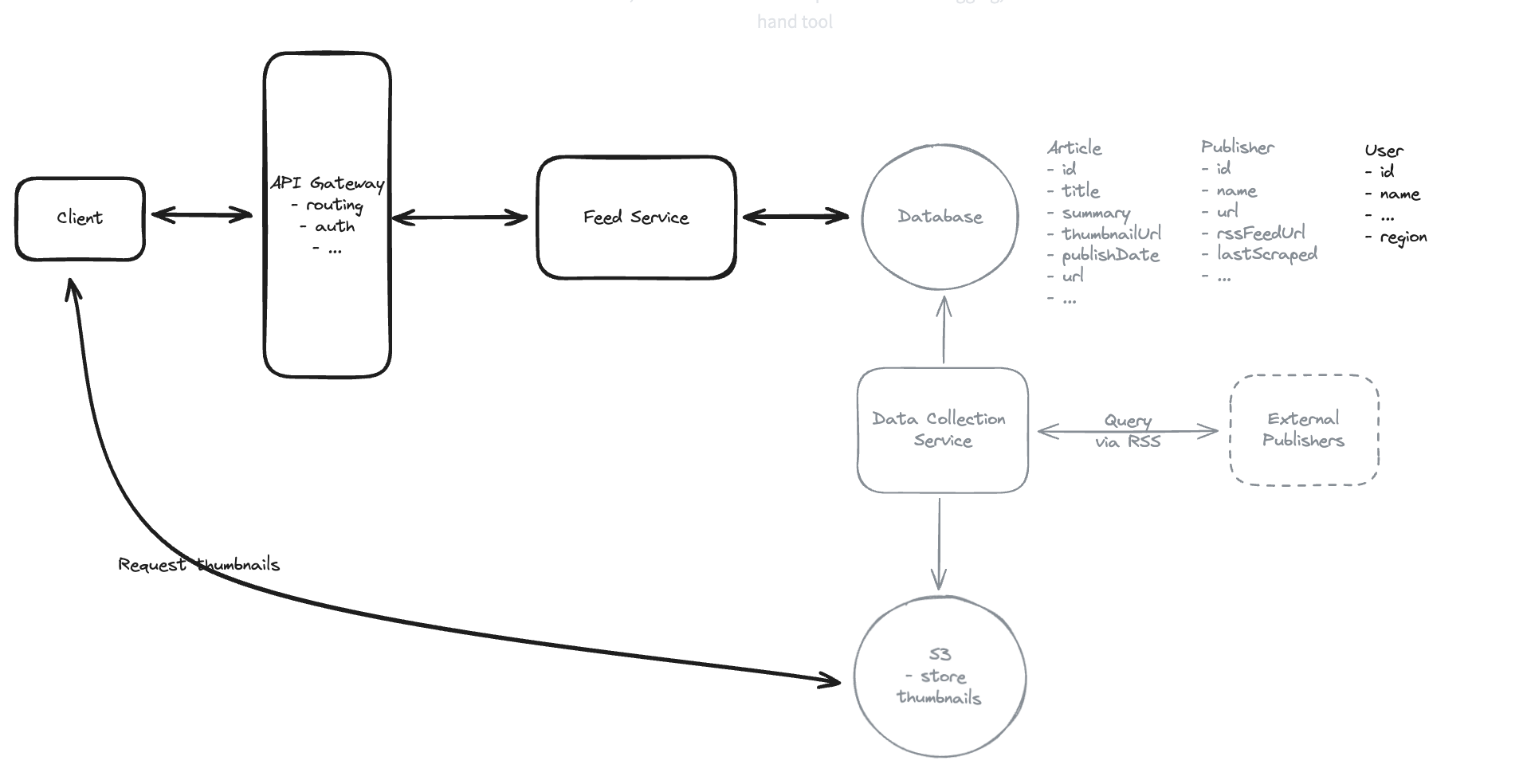

6.4. How will your system collect and store news articles from thousands of publishers worldwide via their RSS feeds?

6.5. How will your system show users a regional feed of news articles?

6.6. Scroll through the feed ‘infinitely’?

-

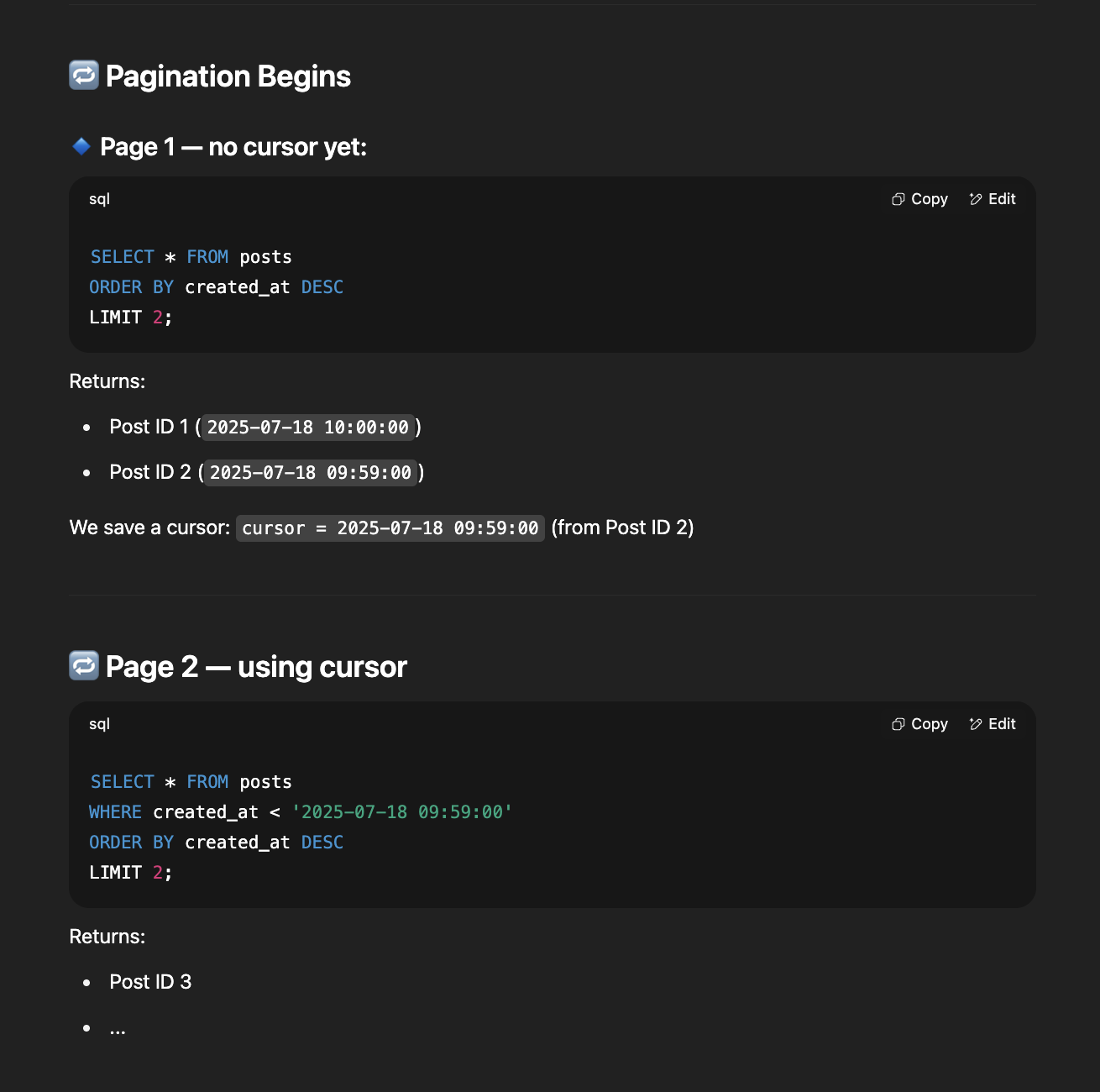

An optimal solution could use monotonically increasing article IDs (like ULIDs) as cursors, eliminating timestamp collisions entirely.

-

This makes pagination incredibly simple: we just query for articles with IDs less than the cursor value => Scroll from the highest ID to the lowest one. We just store the last article we saw client side as the cursor and pass it along with each API request.

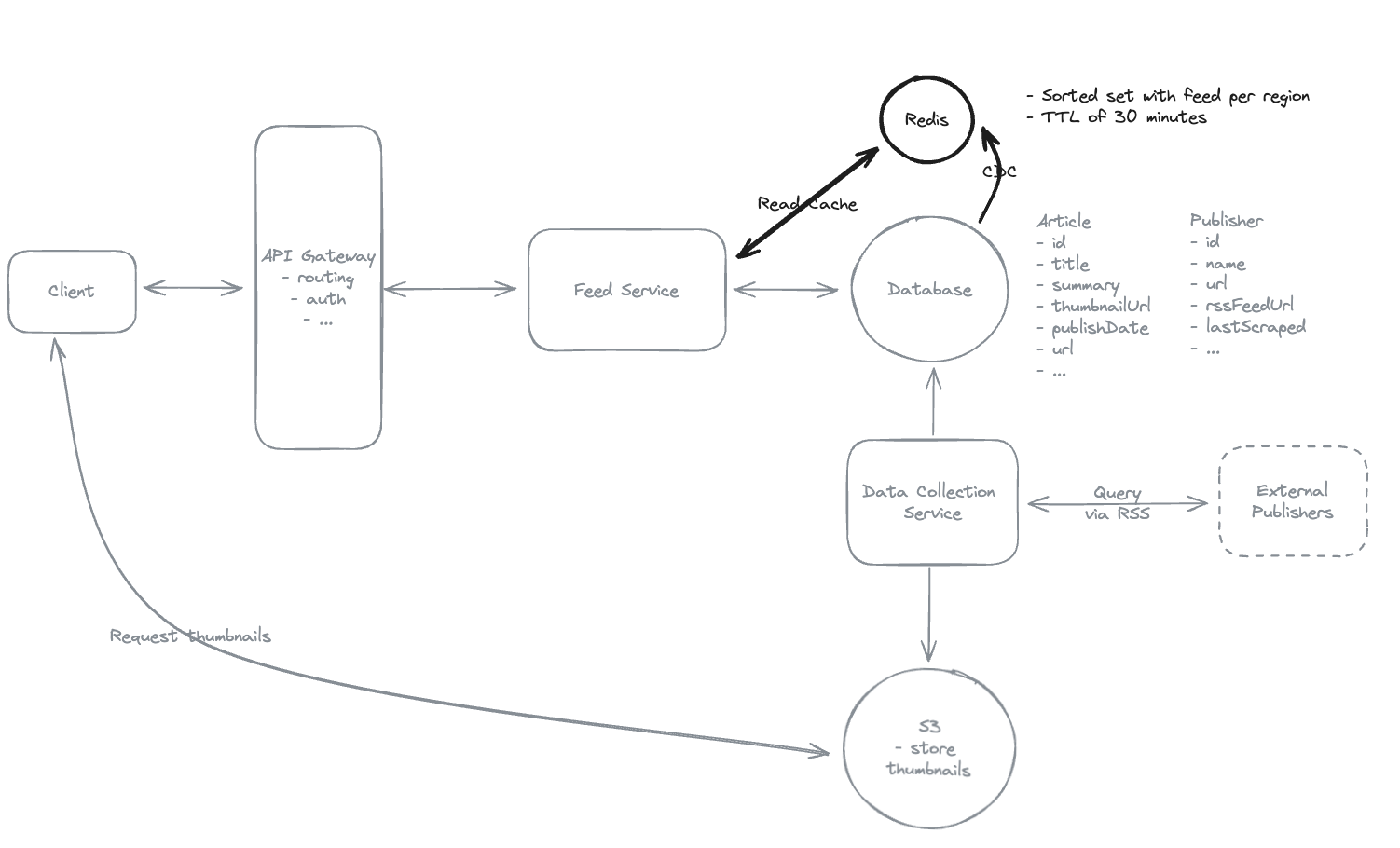

6.7. How do you ensure that users feeds load quickly (< 200ms)?

-

Using CDC (Change Data Capture) from database to Redis.

-

Update pre-computed value to Redis, using ZREVRANGE, maintain only the most recent 1,000-2,000 articles per region.

6.8. How do we ensure articles appear in feeds within 30 minutes of publication? Even for publishers that don’t have RSS feeds.

-

Integrate with Webhook of platforms.

-

Using 3 methods: frequent RSS polling, Web Scraper, Webhook.

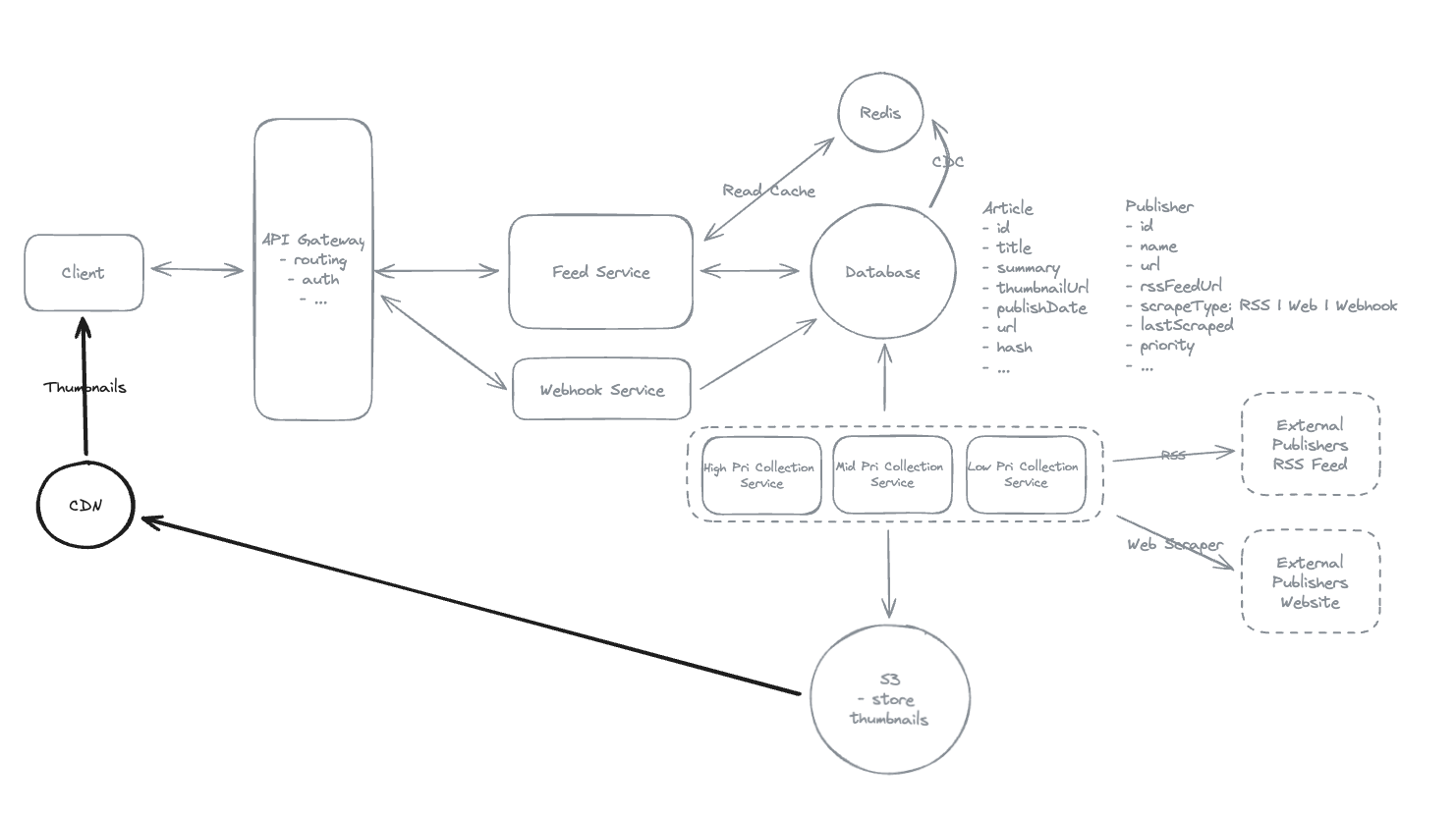

6.9. How do you efficiently deliver thumbnail images for millions of news articles in user feeds?

- Using thumbnail in region of the client-side.

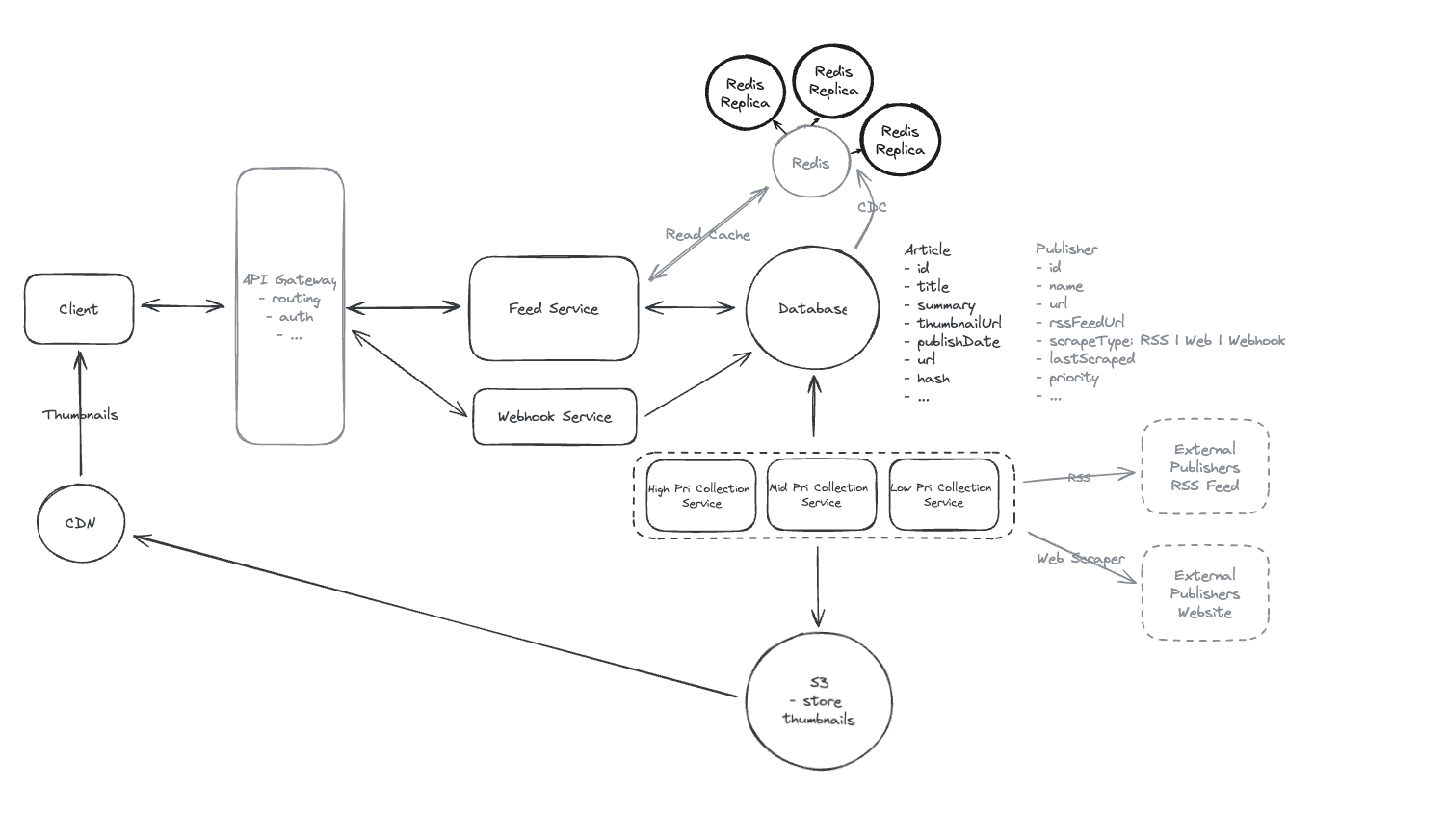

6.10. How do you scale your cache infrastructure to handle 10M concurrent users requesting feeds?

- With 10M concurrent users requesting feeds, a single cache instance can only handle ~100k requests per second, creating a massive bottleneck

=> Scale the redis, each instance 100k rps -> 100 instance can handle 10M rps.

6.11. Why do news aggregators like Google News download and store their own copies of publisher thumbnails rather than linking directly to publisher images?

- Publisher images can be slow to load, change URLs, or become unavailable, which would break the feed experience => Storing local copies ensures consistent load and latency.

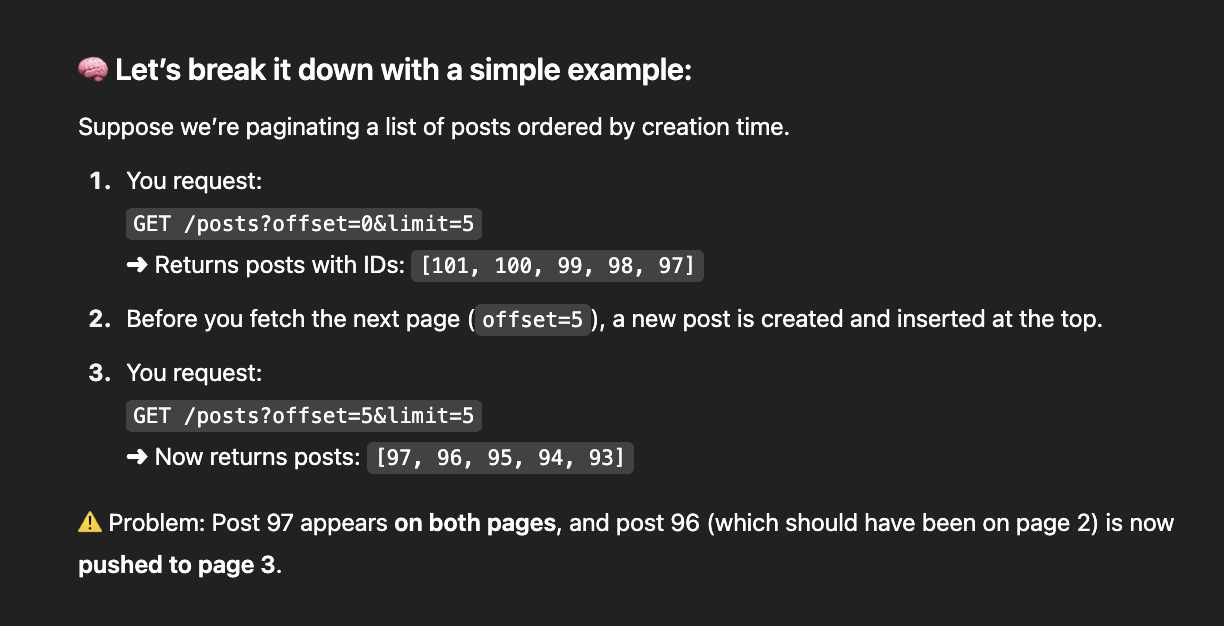

6.12. Cursor-based pagination & Offset-page Approach

-

Cursor-based pagination -> decrease down ID from the cursor.

-

Offset page -> Rerender when publishers have new data.

6.13. Why might implementing webhooks from publishers be preferred over frequent RSS polling for breaking news delivery?

- Webhooks provide immediate notification when content is published, reducing discovery latency from minutes to seconds.

6.14. When implementing personalized news feeds, why might ‘pre-computed user caches’ be worse than ‘dynamic feed assembly’ despite being faster?

- Pre-computed user caches require enormous additional memory and introduce complex cache invalidation logic

6.15. Why do news aggregators implement Change Data Capture (CDC) instead of simple database polling for cache updates?

-

CDC triggers cache updates immediately when new articles are inserted, providing sub-second freshness.

-

Pooling require a high database load.

6.16. During a major election, your Redis cache serving 100M users gets overwhelmed at 100k requests/second. What’s the BEST immediate scaling solution?

- Add read replicas.

6.17. A system’s database must serve 100,000 read requests per second. Which scaling approach handles this load most effectively?

- Implement read replicas.

6.18. A news publisher’s RSS feed is down for 2 hours during breaking news. What’s the BEST fallback strategy?

-

Context: 1 publisher source is lost => What do you do ?

-

Solution: Using Web Scraping technique if the RSS feeds is failed.

6.19. What happens when cached data becomes stale in high-frequency update systems?

- Users see outdated information

6.20. All of the following improve content freshness

-

Real-time webhooks

-

Frequent polling

-

Change data capture

6.21. Geographic data distribution reduces latency by serving content from nearby locations.

- Yes

6.22. During traffic spikes, which component typically becomes the bottleneck in read-heavy systems?

-

In read-heavy systems, the database or cache layer typically becomes the bottleneck first -> Data retrieval operations.

-

About server: it is basic routing, and load balacers can usually be scaled easily than data layer.

6.24. Eventual consistency is acceptable for news feeds where availability matters more than perfect synchronization.

-

According to the CAP theorem, news aggregation systems often prioritize availability over strict consistency.

-

Users prefer access to slightly outdated content rather than no content at all.

6.25. When implementing category-based news feeds (Sports, Politics, Tech), which approach provides the best balance of performance and resource efficiency?

-

Cache metadata in regional caches (like feed:US) => Filtering from this key.

-

Pros: This avoids the memory explosion of separate category caches (25 categories × 10 regions = 250 cache keys) => Reading 1,000 articles from Redis takes ~10ms, and in-memory filtering adds only 1-2ms.

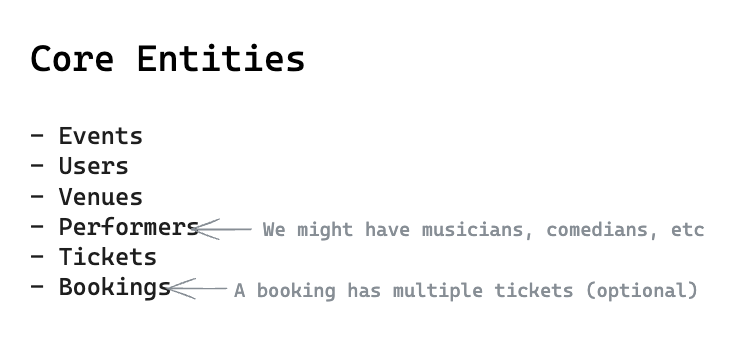

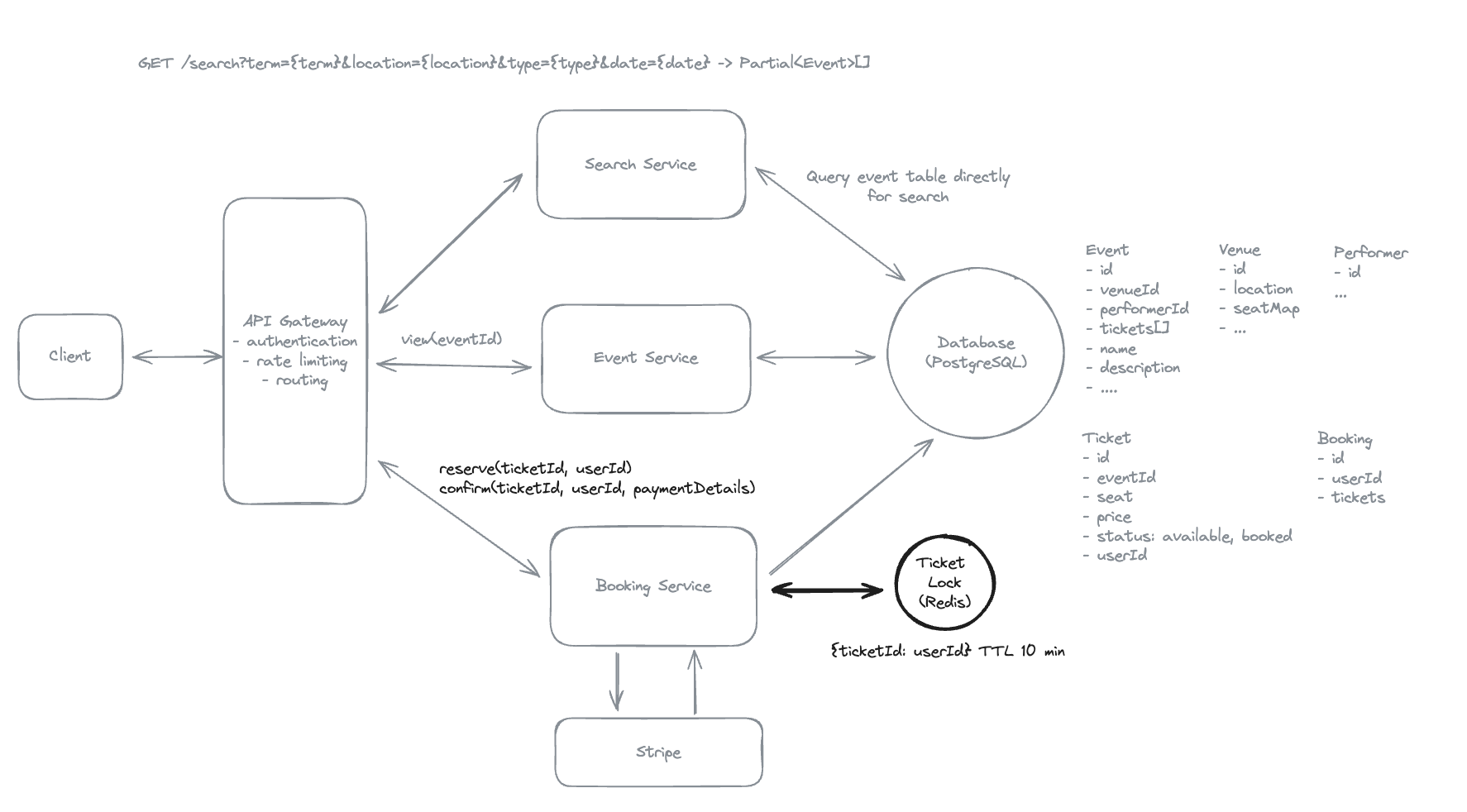

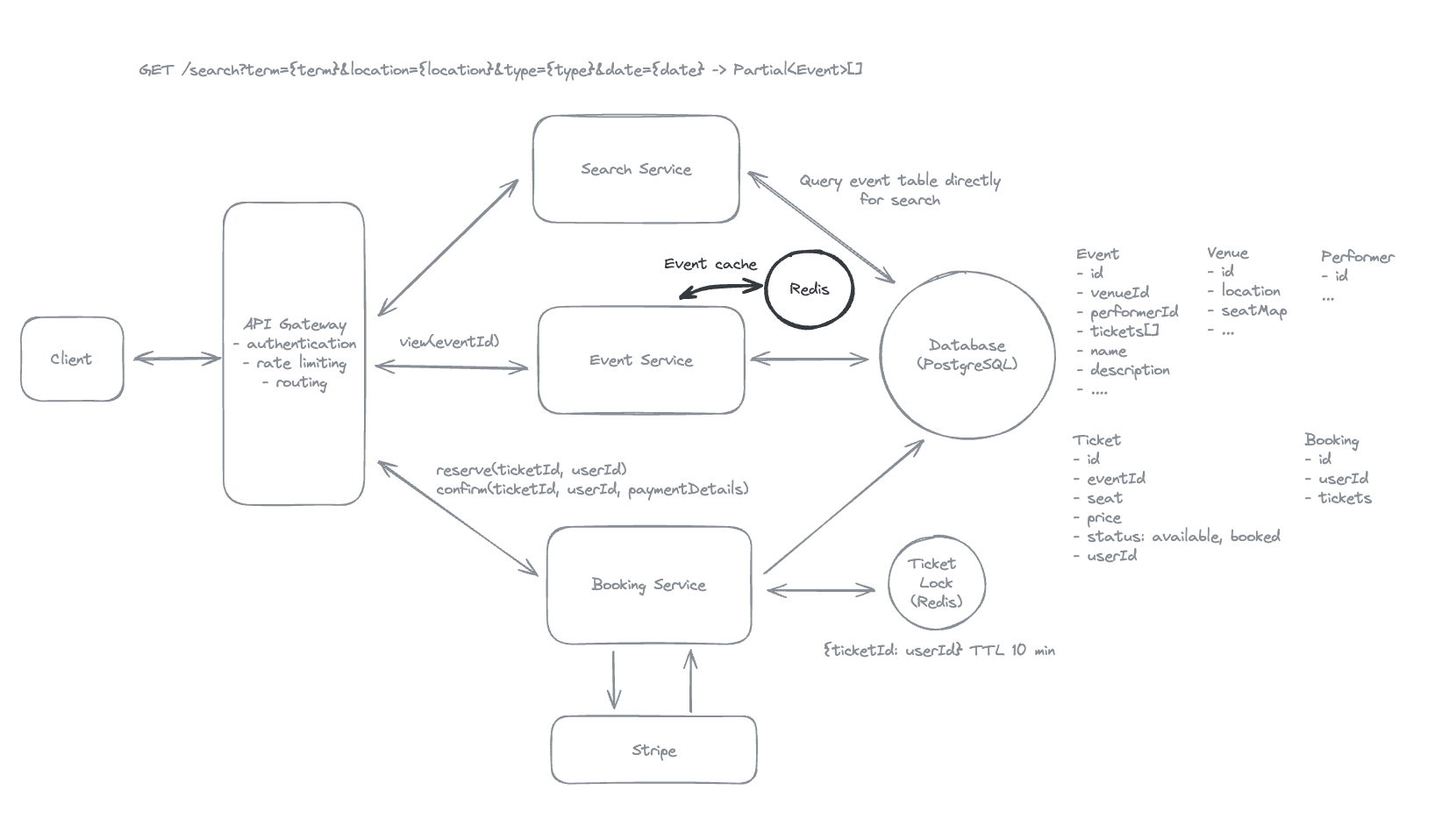

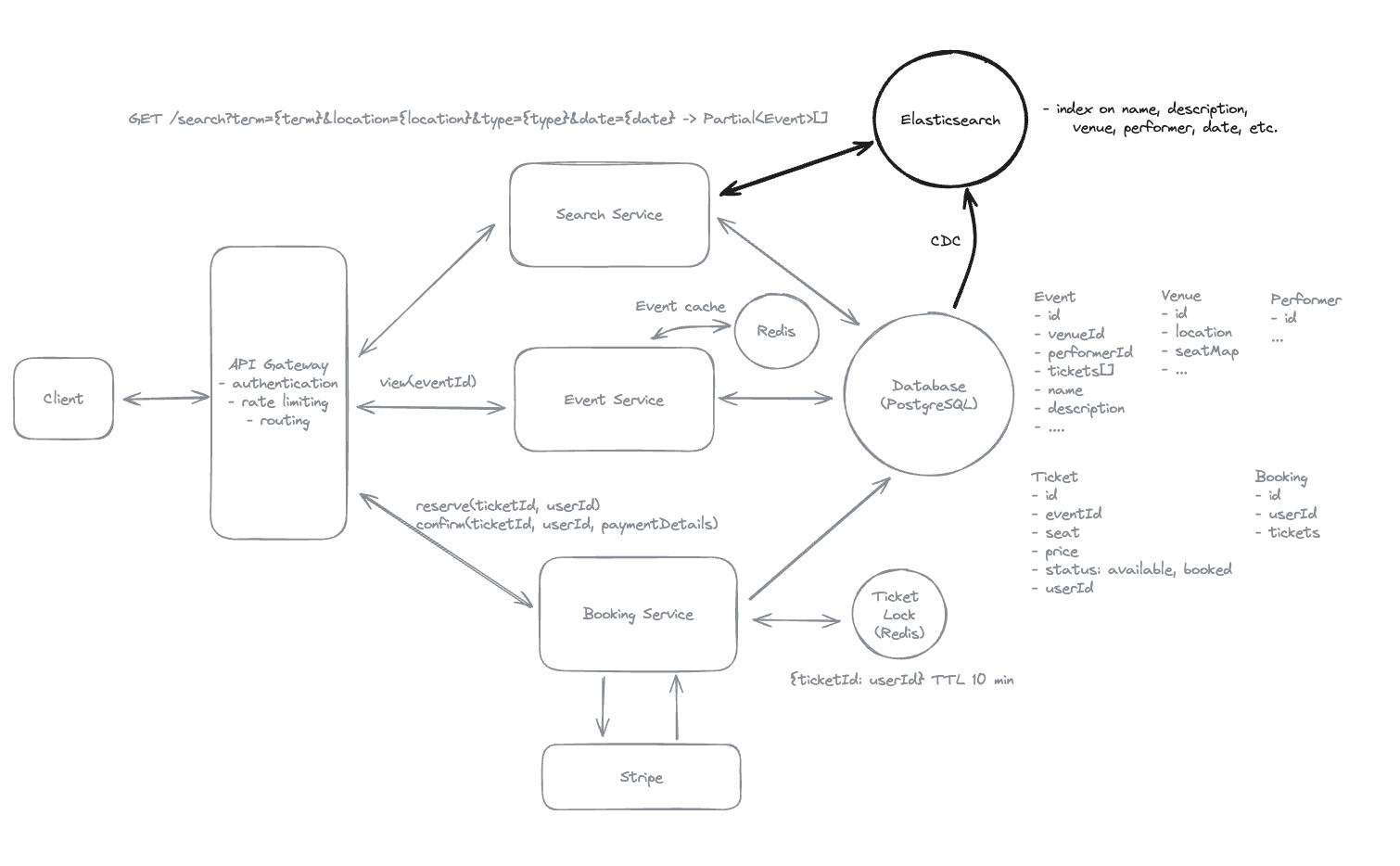

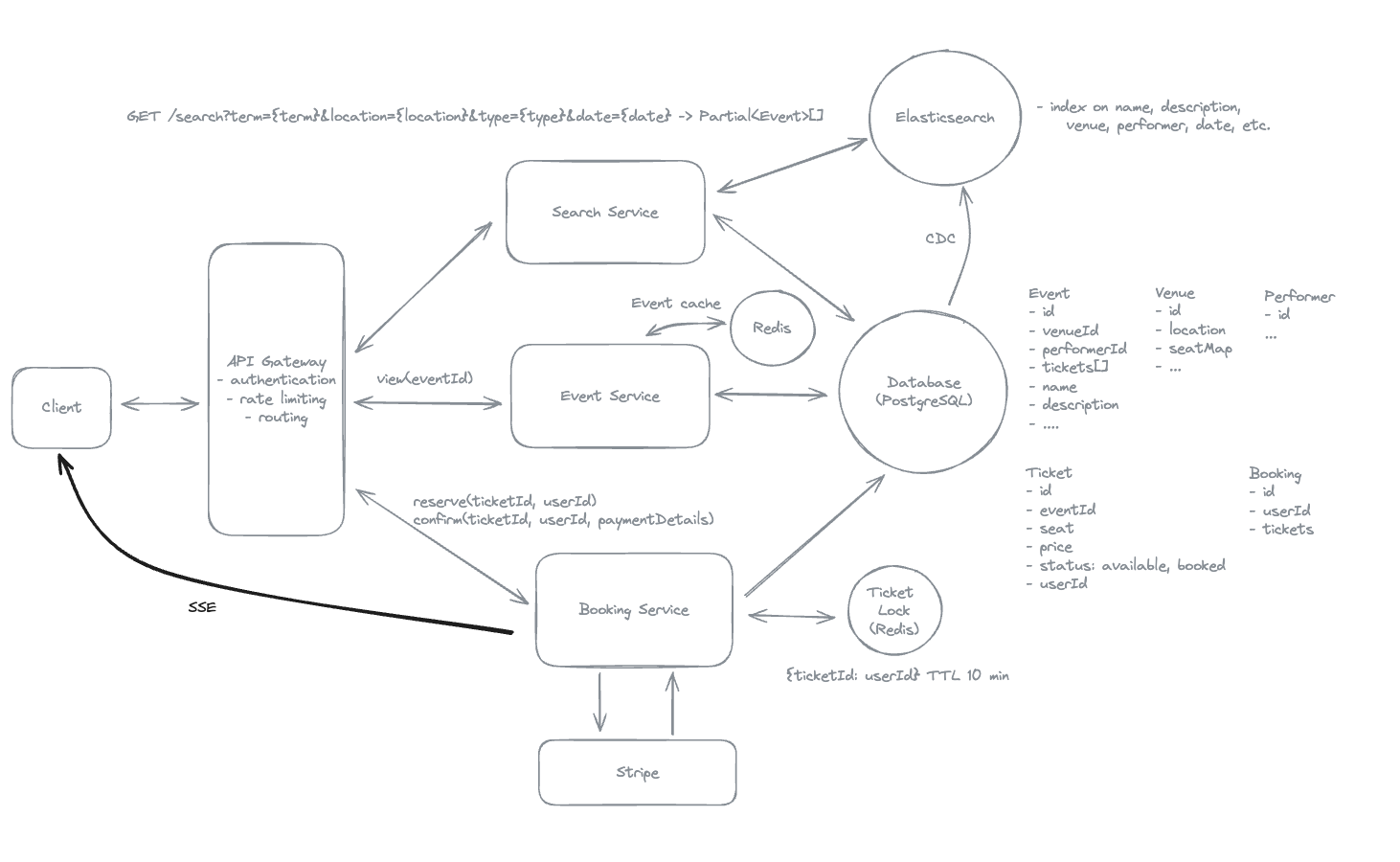

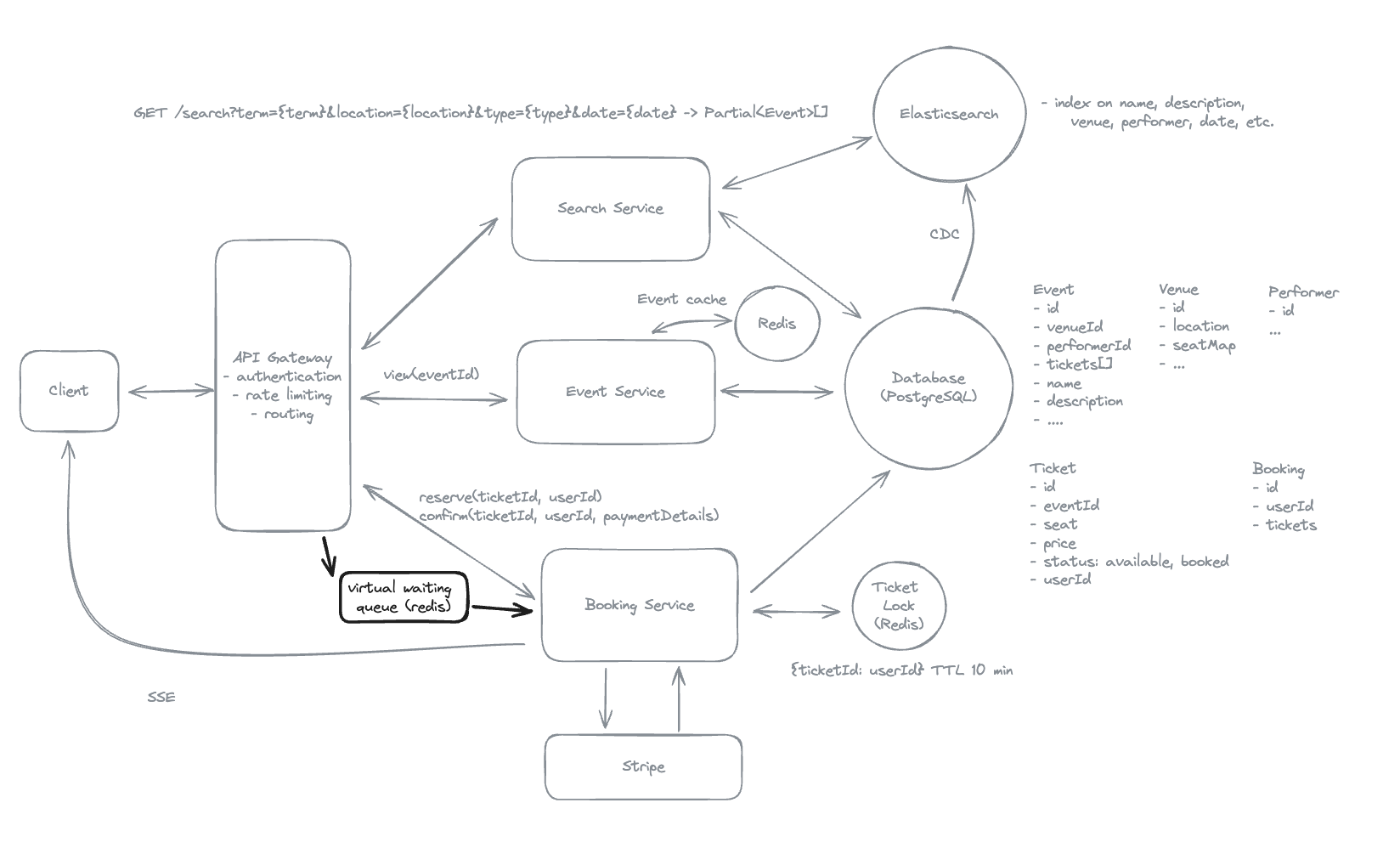

7. Design Ticketmaster

7.1. Non-functional requirements

-

Consistency for booking events.

-

Availability for searching events.

-

Scalability for handle popular events.

7.2. Entities

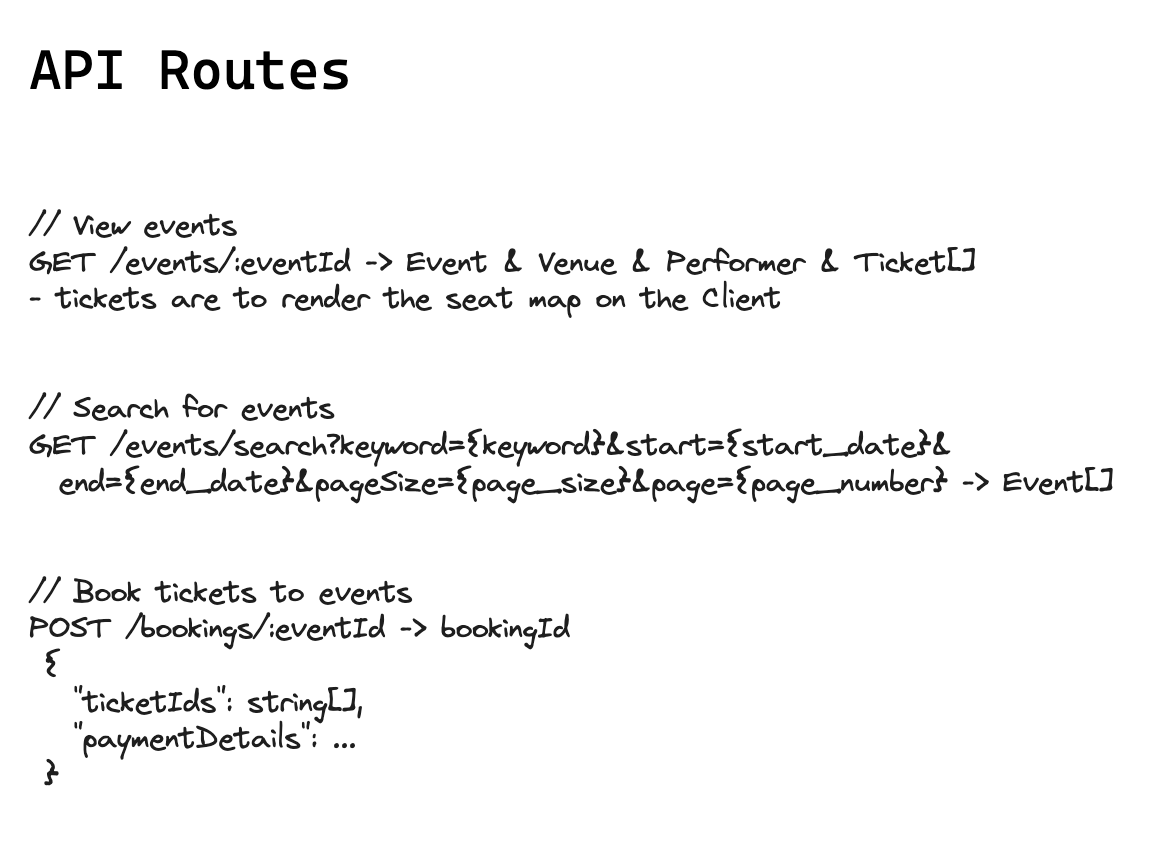

7.3. API Design

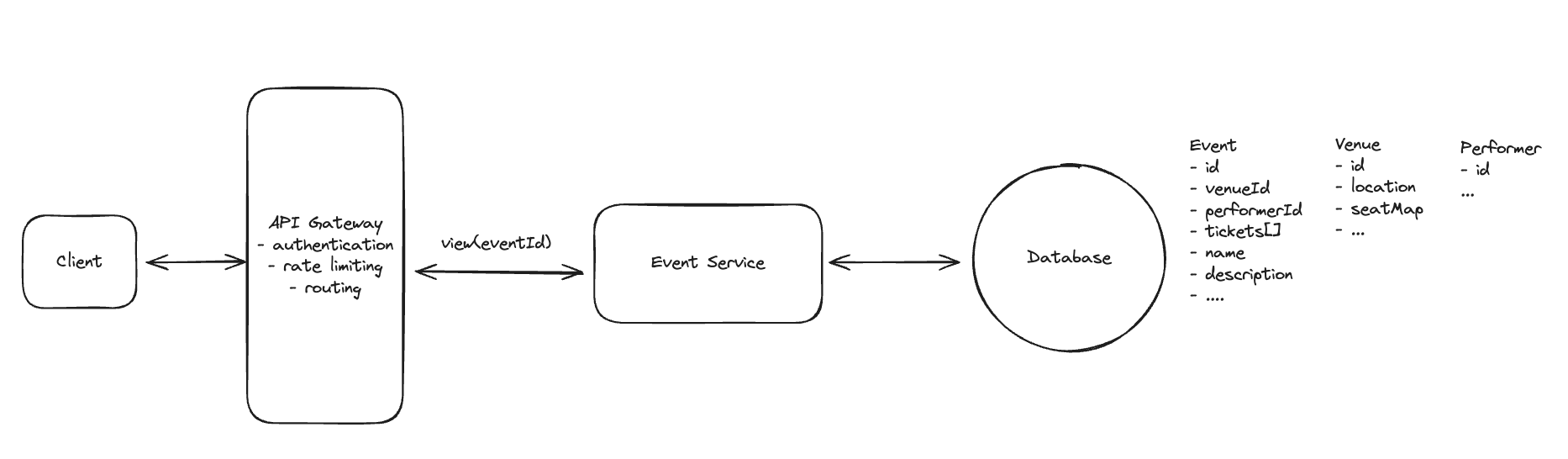

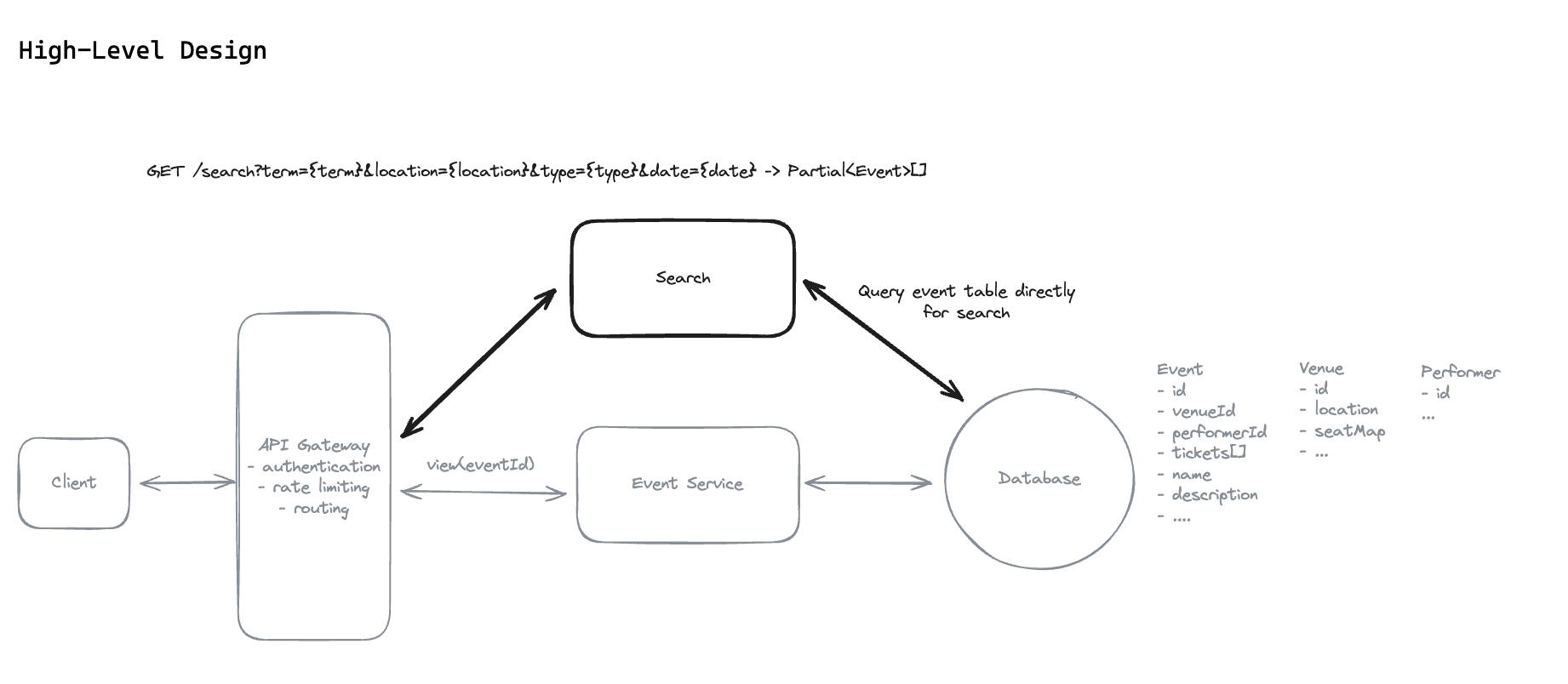

7.4. How will users be able to view simple event details when clicking on an event? ie. name, description, location, date, etc.

7.5. How will users be able to search for events?

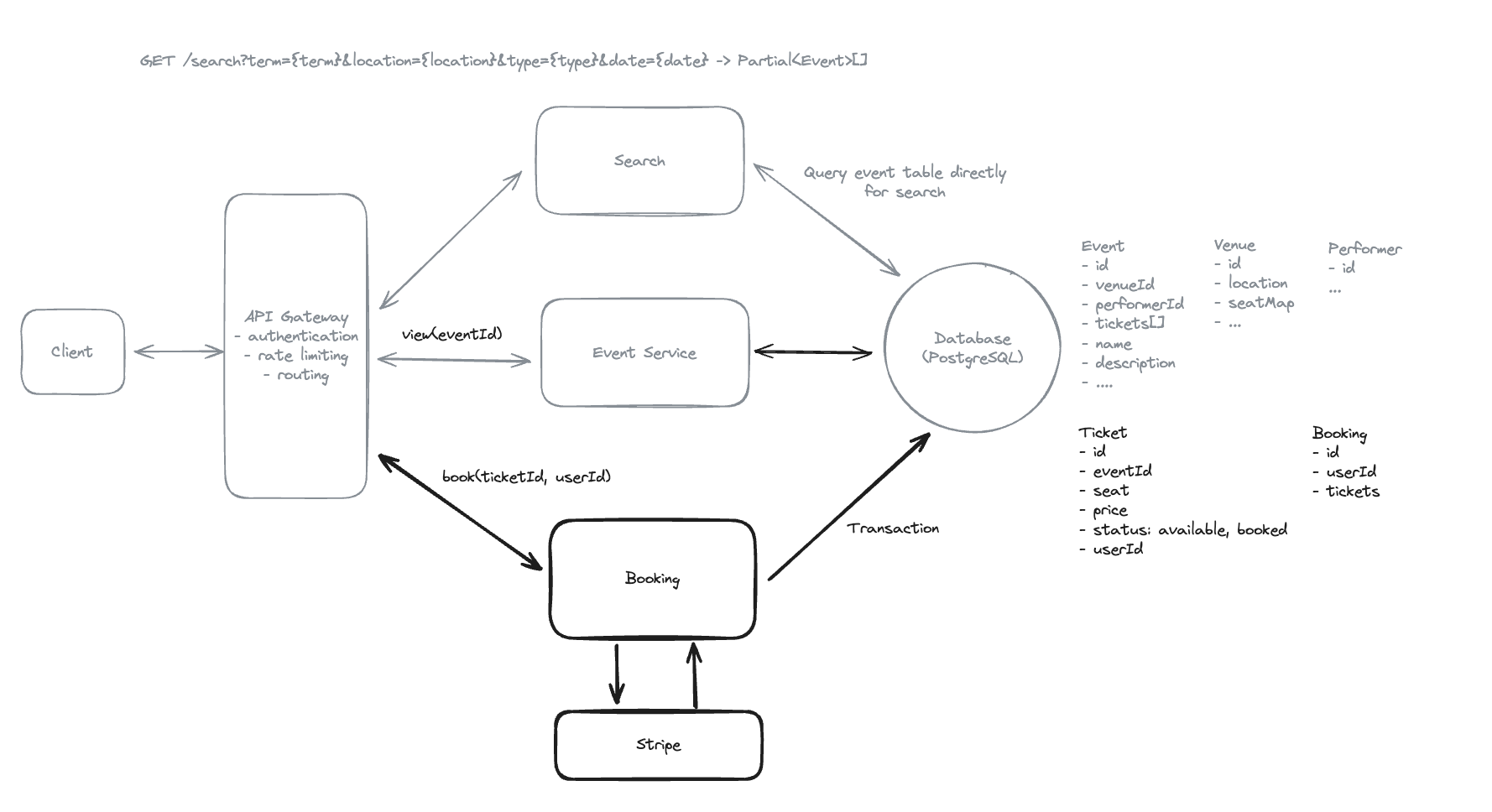

7.6. How will users be able to book specific seats for events? Each physical seat has exactly one ticket. Do not consider General Admission or section-based booking.

7.7. Implement a two-phase booking process:

- Seat Reservation: Temporarily hold selected seats.

- Booking Confirmation: Finalize purchase within a time limit.

How would you design this to prevent users from losing seats during checkout?

-

To implement the two-phase booking process and prevent users from losing seats during checkout, we’ll use a distributed lock system with Redis and a 10-minute TTL (Time to Live). When a user selects seats to reserve, the client sends a POST request to the Booking Service with the selected ticketIds. The Booking Service attempts to acquire locks for these seats in Redis, setting a TTL of 10 minutes for each lock. This reservation ensures that no other user can reserve or book the same seats during this period.

-

If the user completes the purchase within the 10-minute window, the Booking Service finalizes the booking by updating the ticket statuses to “booked”. If the user does not complete the purchase in time, the locks automatically expire due to the TTL, and the seats become available for others to reserve. This mechanism effectively prevents seat loss during checkout by exclusively holding seats for the user and automatically releasing them if the reservation times out.

7.8. How can your design scale to support up to 10M concurrent users reading event data? Focus on optimizing the database and read flow for this high volume of requests.

- Read from cache => Read replicas, because Database engine need time to query.

7.9. How can you improve search to handle complex queries more efficiently. If you think your design already handles this well, explain how.

- When search it return data from Elastic Search, rather than the database.

7.10. How can you make the seat map on the event page automatically refresh to display the latest seat availability in real time?

-

We can implement Server-Sent Events (SSE). When a user views the seat map on the event page, the client establishes an SSE connection to the Booking Service. The server then pushes updates to the client whenever there’s a change in seat availability—such as seats being reserved or booked by other users. On the client, we’ll receive these updates and block off the seats in the seat map accordingly.

-

As we scale, we may not be able to fit all connections for a single event on a single Booking Service instance. In this case, we can introduce pub/sub to broadcast changes or add a dispatcher that utilizes Zookeeper or a similar service to know which server to send updates to.

7.11. How would you implement a virtual waiting room that queues users for popular events and grants access based on their queue position?

-

We can implement a virtual waiting room using Redis’ Sorted Sets data structure. When users attempt to access the event page during peak times, they are redirected to a waiting room, and their session IDs are added to a Redis Sorted Set with their timestamp as the score, ensuring first-come-first-served order.

-

Every N minutes, or based on the number of completed bookings, we use ZRANGE to pull users from the front of the queue and grant them access to the event details page in a controlled manner, throttling the number of concurrent bookings and preventing system overload. This approach ensures fairness by serving users in the order they arrived and provides scalability to handle surges in traffic.

Example:

ZADD booking_queue 1625380000 user1

ZADD booking_queue 1625380001 user2

ZADD booking_queue 1625380002 user3

Top 100 users from 0 to 99.

ZRANGE booking_queue 0 99

-- KEYS[1] = ZSET key (e.g., "booking_queue")

-- ARGV[1] = user_id

-- ARGV[2] = score (e.g., timestamp)

-- ARGV[3] = max size (e.g., 100)

local zset = KEYS[1]

local user = ARGV[1]

local score = tonumber(ARGV[2])

local max_size = tonumber(ARGV[3])

-- Get current size

local current_size = redis.call("ZCARD", zset)

if current_size < max_size then

redis.call("ZADD", zset, score, user)

return "ADDED"

else

-- Get the lowest score entry

local lowest = redis.call("ZRANGE", zset, 0, 0, "WITHSCORES")

local lowest_user = lowest[1]

local lowest_score = tonumber(lowest[2])

if score > lowest_score then

-- Remove the oldest

redis.call("ZREM", zset, lowest_user)

-- Add the new user

redis.call("ZADD", zset, score, user)

return "REPLACED"

else

return "REJECTED"

end

end

7.12. Redis faster than Disk-based access

-

Because Redis is in-memory access.

-

DBMS is Disk-based access

7.13. Which consistency model prevents concurrent processes from allocating the same resource?

- Strong Consistency

7.14. Which approach works BEST for efficient partial text matching in search queries?

Full-text search engines

-

SQL Like: full table scans.

-

Elastic Search: Inverted Index, Fuzzy Matching.

7.15. Distributed locks prevent multiple processes from accessing shared resources simultaneously.

- Yes

7.16. A system needs to prevent double resource allocation, which database property is most essential?

- Transactions ensure that operations like checking availability and marking resources as allocated happen atomically.

=> Preventing race conditions where multiple users could claim the same resource simultaneously.

7.17. Horizontal Scale

- Stateless service.

7.18. Which technology enables real-time server-to-client data streaming without client polling?

- SSE.

7.19. What happens when a distributed lock’s TTL expires before the operation completes?

- Lock becomes available to other processes

7.20. Which strategy works BEST for managing millions of simultaneous users during high-demand events?

- Implement virtual waiting queues

7.21. Inverted indexes improve full-text search performance by mapping words to documents.

- True

7.22. When designing for high availability, which system component should prioritize consistency over availability?

- Payment processing must prioritize consistency to prevent double charges, financial discrepancies, and fraud.

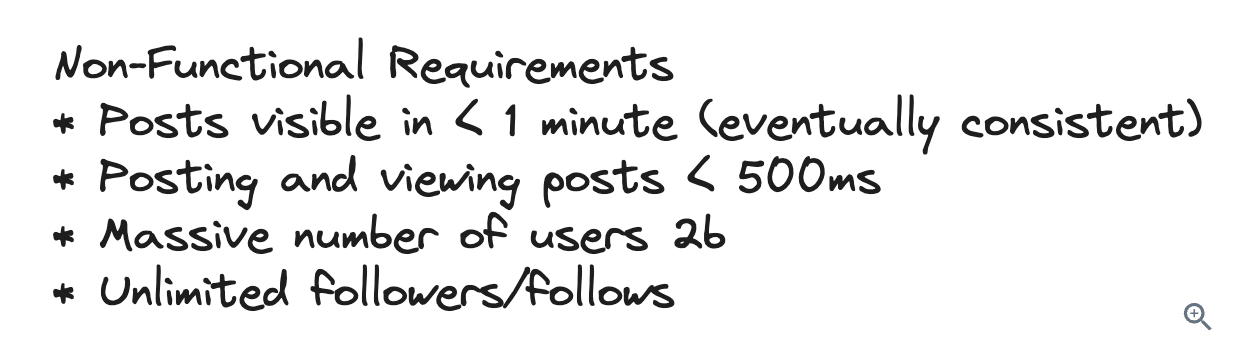

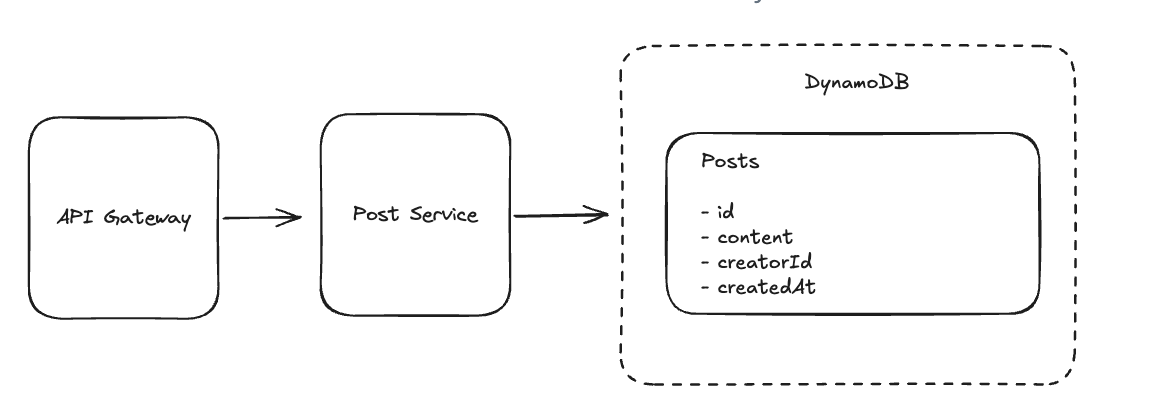

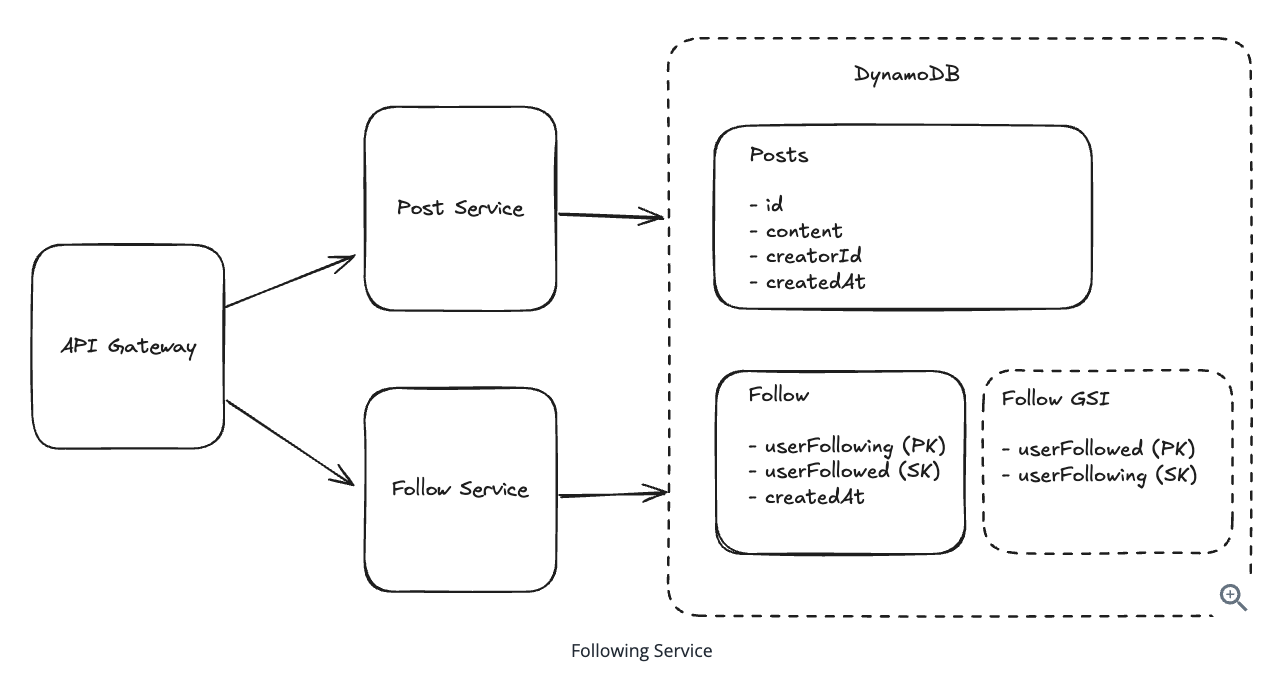

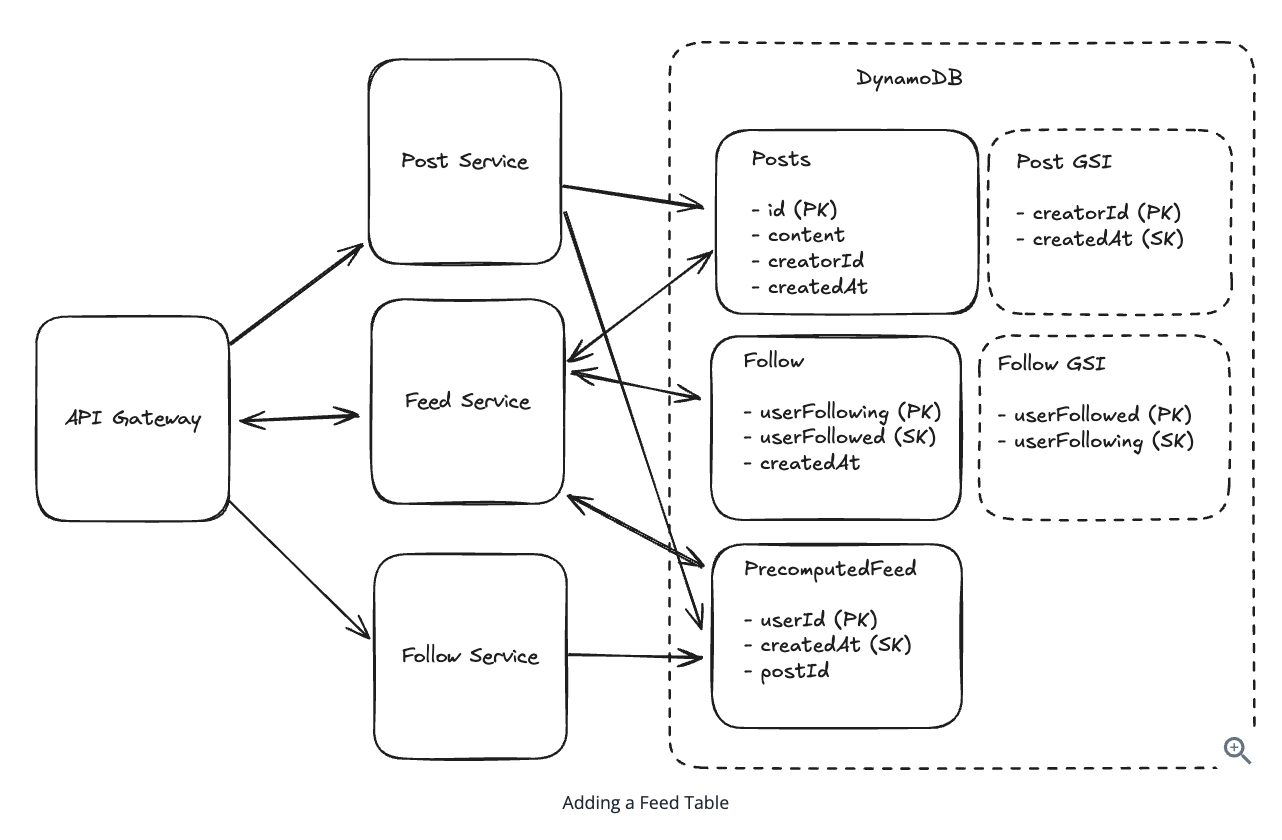

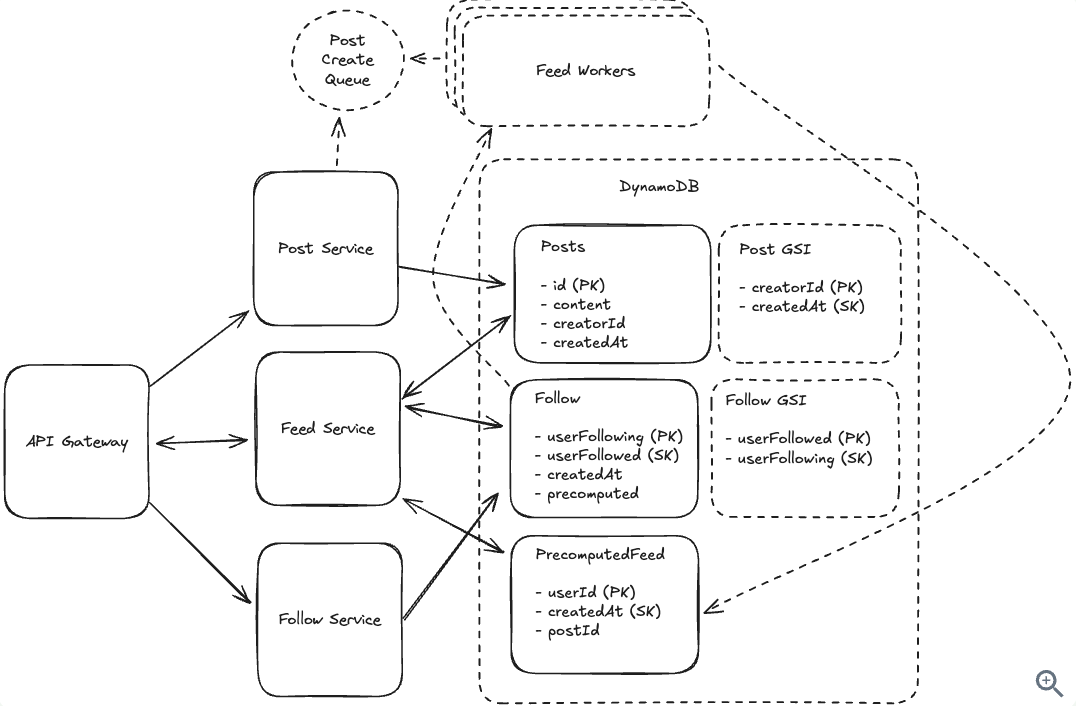

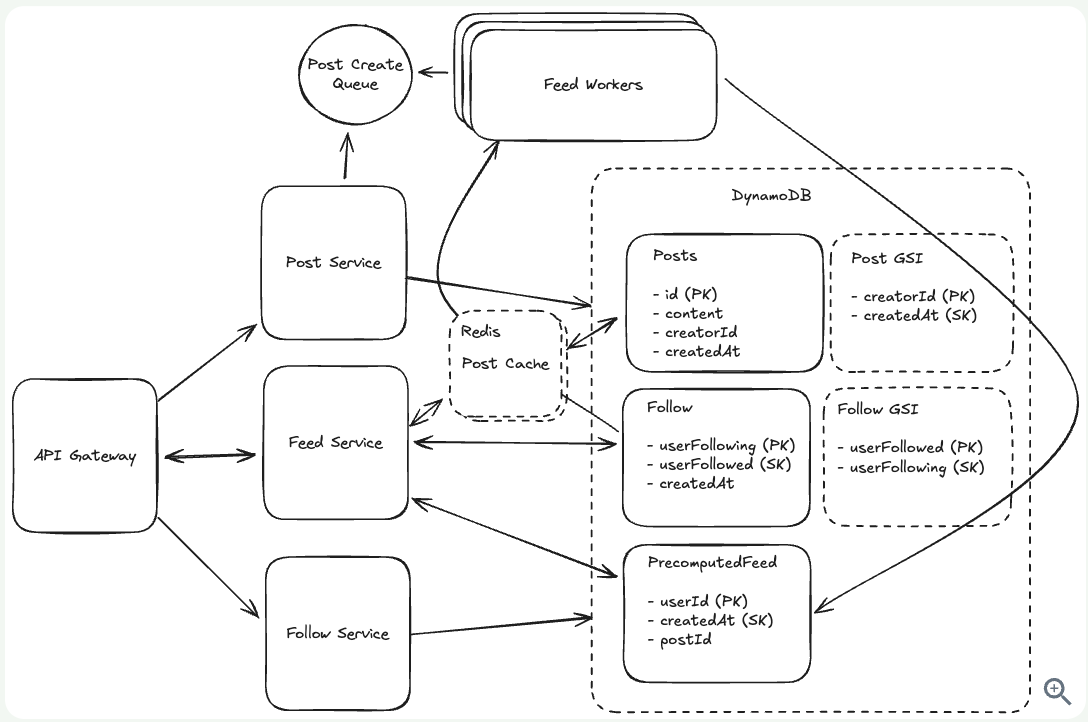

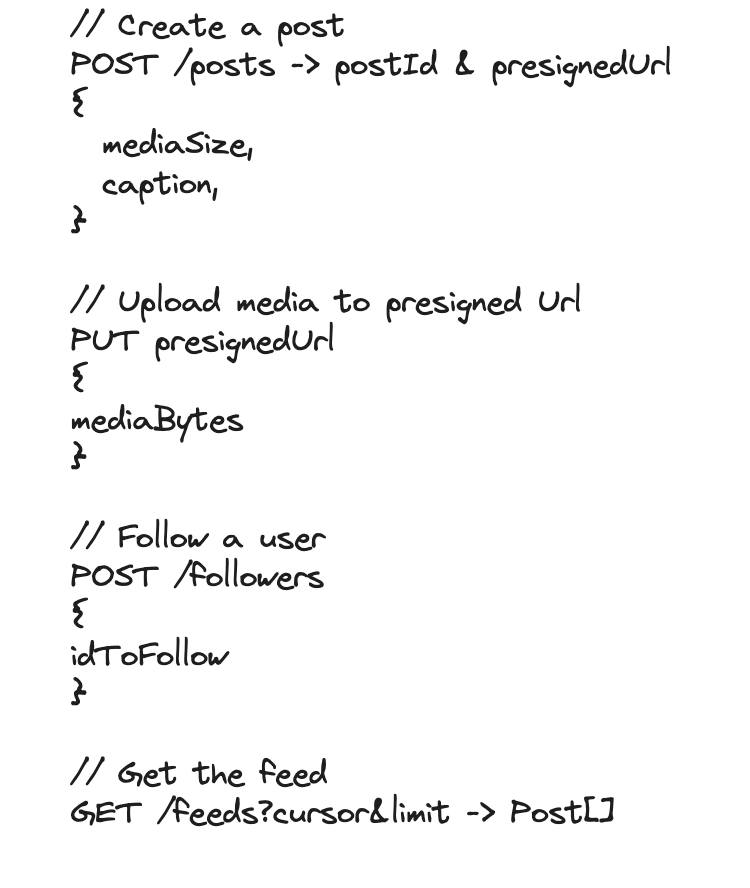

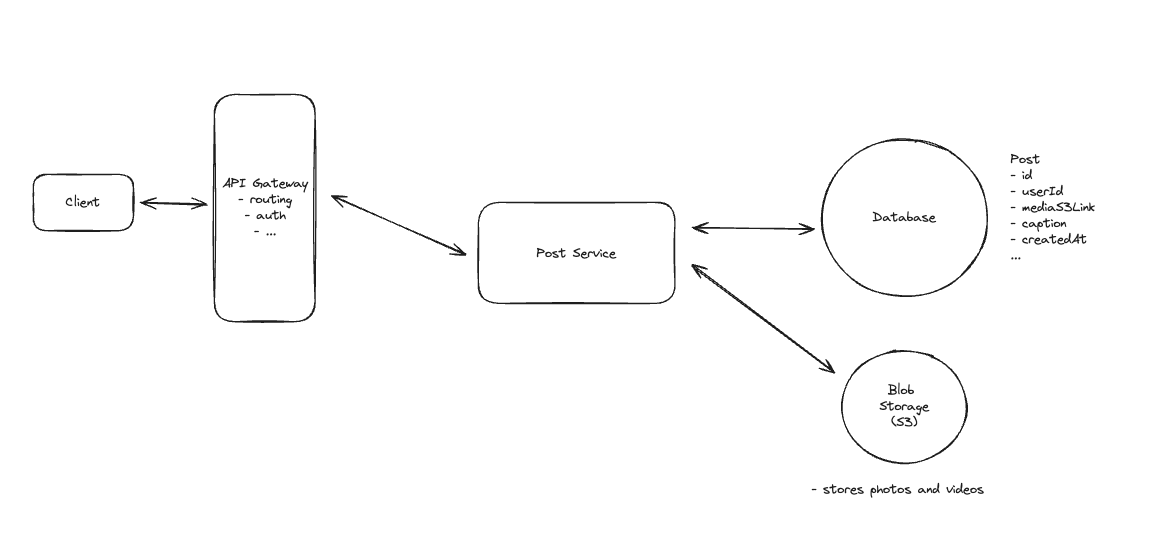

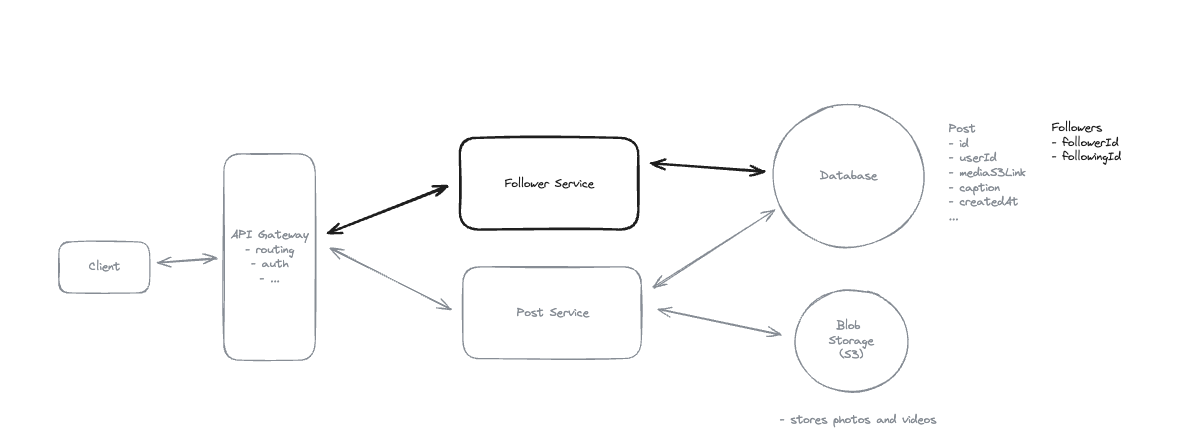

8. Design FB News Feed

8.1. Non-functional Requirements

8.2. Entities

8.3. Users should be able to create posts

8.4. Users should be able to friend/follow people.

8.5. How do we handle users who are following a large number of users? (Push Model)

- Push by create new record: UserID -> FollowID -> FeedID

8.6. How do we handle users with a large number of followers?

- Celebrity Problem: Pull Model.

8.7. How can we handle heavy-read and unread of posts?

- Using Redis for heavy-read posts.

8.8. Fan-out on write means aggregating data at read time when a user requests their feed.

-

Fan-out on write means pre-aggregating data when posts are created (at write time).

-

While fan-out on read means aggregating data when users request their feed (at read time).

8.9. Key-value stores can scale infinitely regardless of how requests are distributed across the keyspace.

-

Key-value stores require even load distribution across partitions to scale effectively.

-

If certain keys get much more traffic (hot keys), those partitions become bottlenecks, limiting scalability.

8.10. Secondary indexes in databases are primarily used to improve write performance rather than enable different query patterns.

- Secodary Index: Support read, but longer write.

8.11. What is the most effective approach to handle hot keys in a distributed cache system?

- Implement redundant caching where multiple nodes can serve the same popular keys

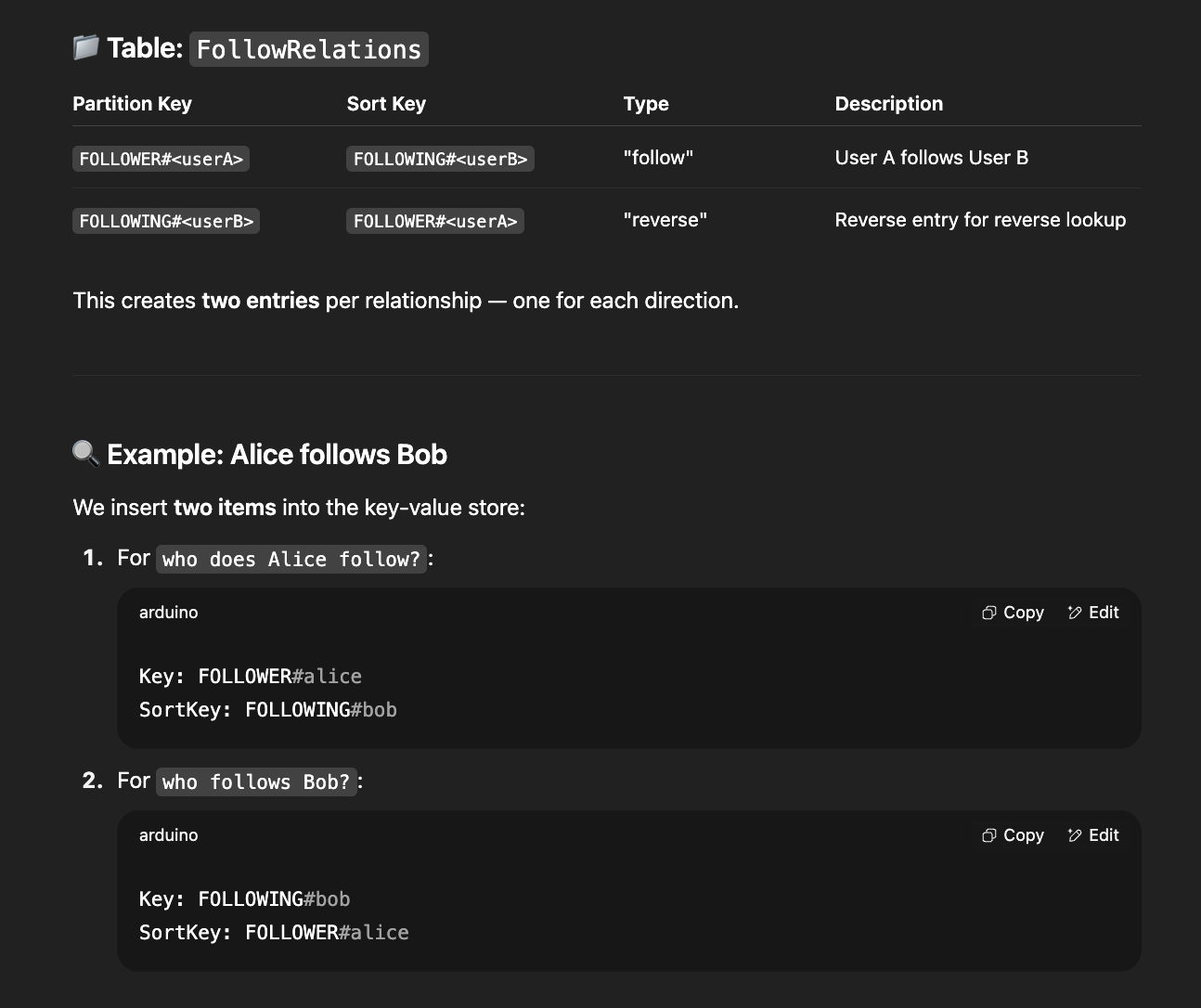

8.12. For modeling follow relationships in a social network using a key-value store, what is the most efficient approach for supporting both ‘who does user X follow’ and ‘who follows user X’ queries?

-

Create a table with composite keys and a secondary index with reversed keys

-

Idea: Using composite keys (follower:following) with a secondary index that reverses the key order (following:follower) allows efficient querying of both access patterns in a single table structure, which is optimal for key-value stores.

- Efficient lookups: All queries are direct key-prefix scans (ideal for key-value stores).

🔑 Partition Key & Sort Key

- Partition Key: Determines which physical partition (node/shard) the item is stored on.

- Sort Key: Determines the order of items within a partition.

8.13. What is the primary benefit of maintaining precomputed feeds for users in a social media system?

- Cache is pre-computed.

8.14. In the context of the CAP theorem, a social media feed system that tolerates up to 1 minute of post staleness is prioritizing which combination?

- Availability and Partition tolerance

8.15. In a hybrid fan-out strategy for social feeds, what is the most practical approach for handling celebrity accounts with millions of followers?

- Skipping fan-out for celebrity accounts (not writing to millions of feeds) and instead merging their recent posts during read operations is more efficient than trying to update millions of precomputed feeds.

8.16. Why are stateless services easier to scale horizontally compared to stateful services?

-

Any instance can handle any request without needing to maintain session data

-

Idea: Horizontal Scaling.

8.17. When using message queues to handle posts from users with varying follower counts, what is a key consideration for queue design?

- Posts from users with few followers require little work (updating few feeds), while posts from users with millions of followers require massive work. The queue system needs to account for this variable workload to avoid bottlenecks.

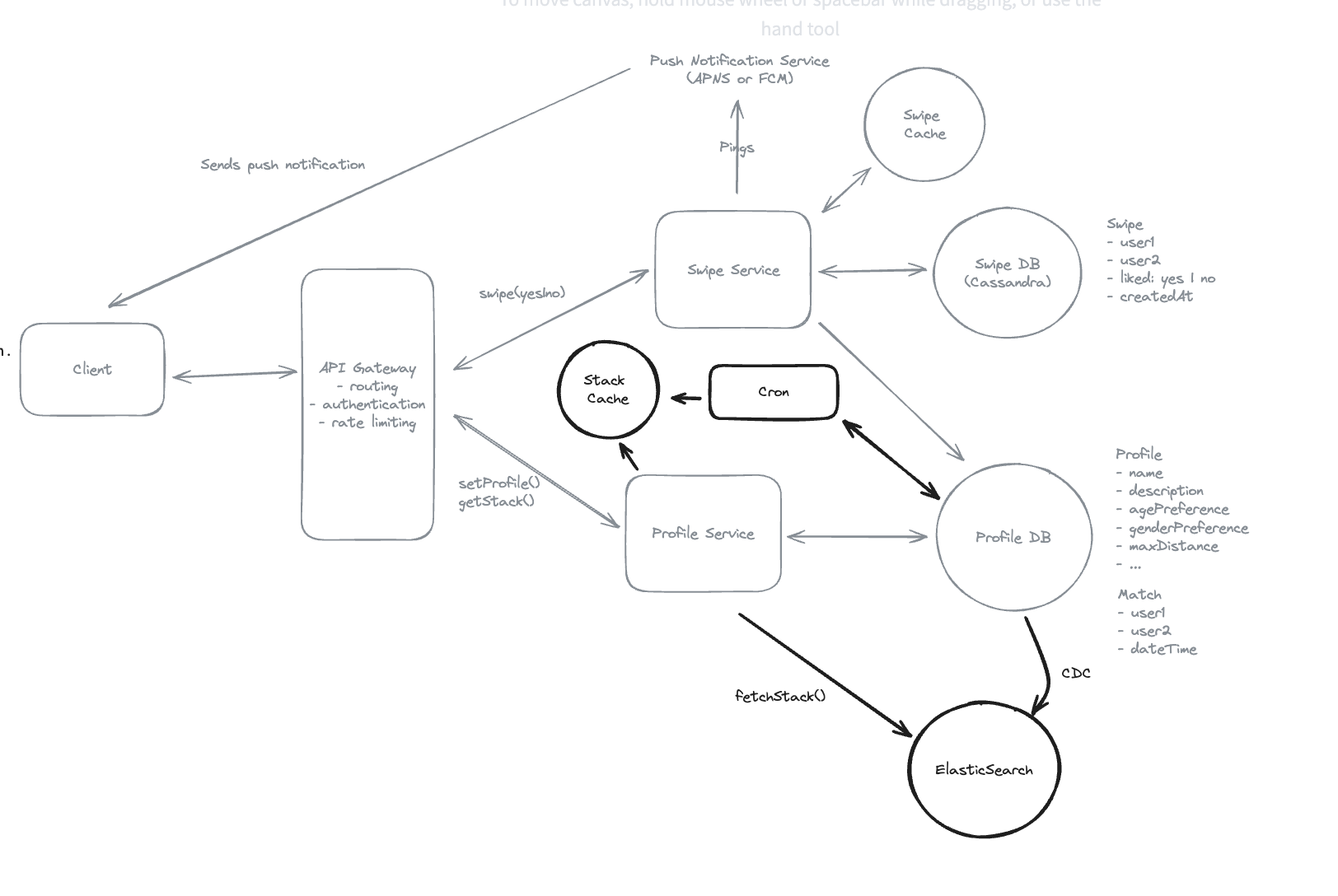

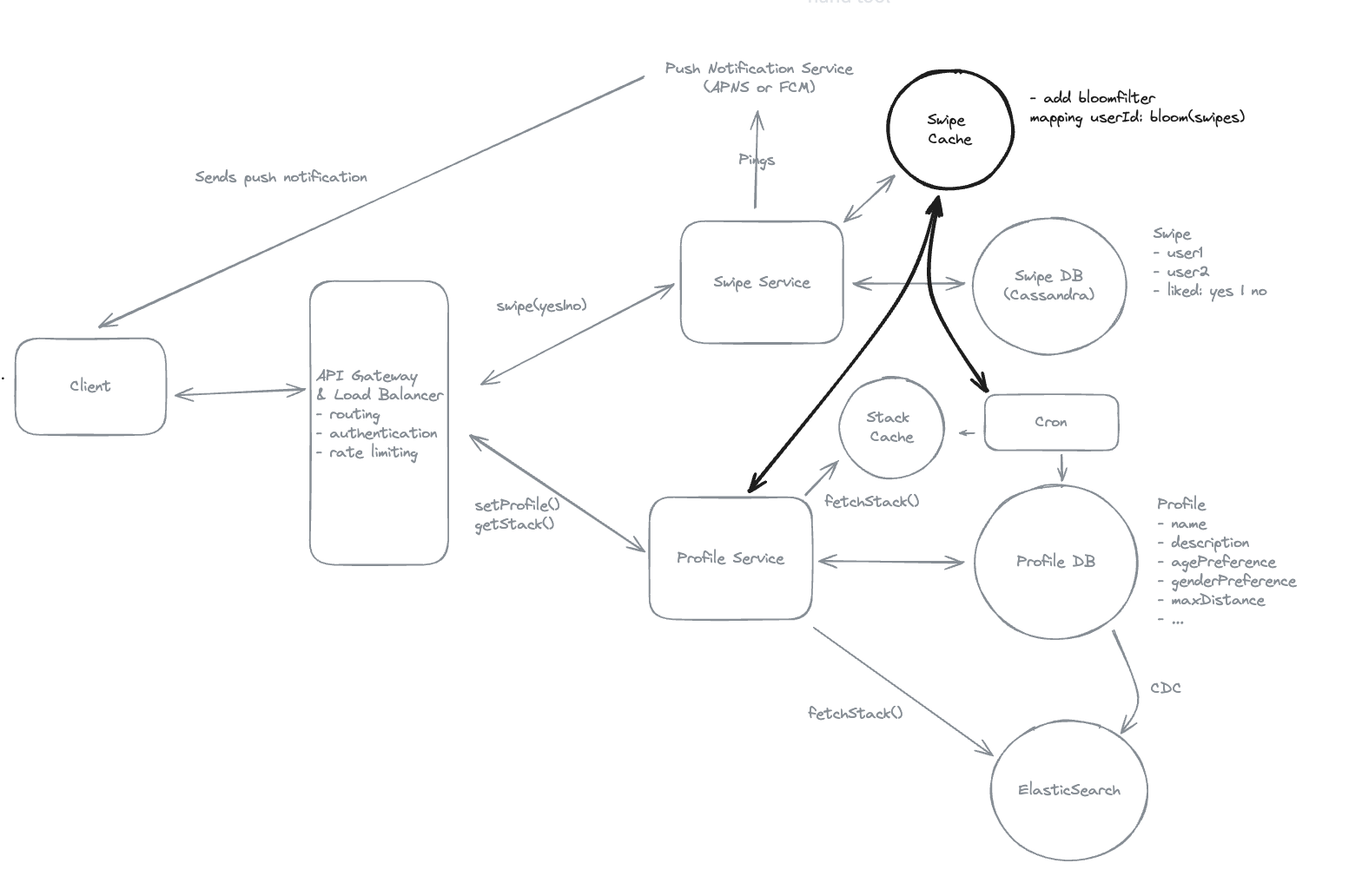

9. Design Tinder

9.1. Functional Requirements

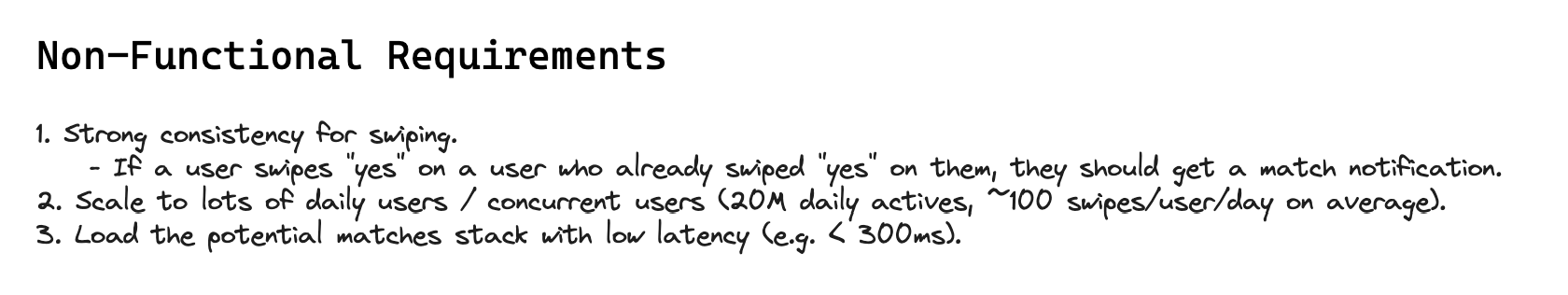

9.2. Non-functional Requirements

9.3. Entities

- Actor of your system, discover later.

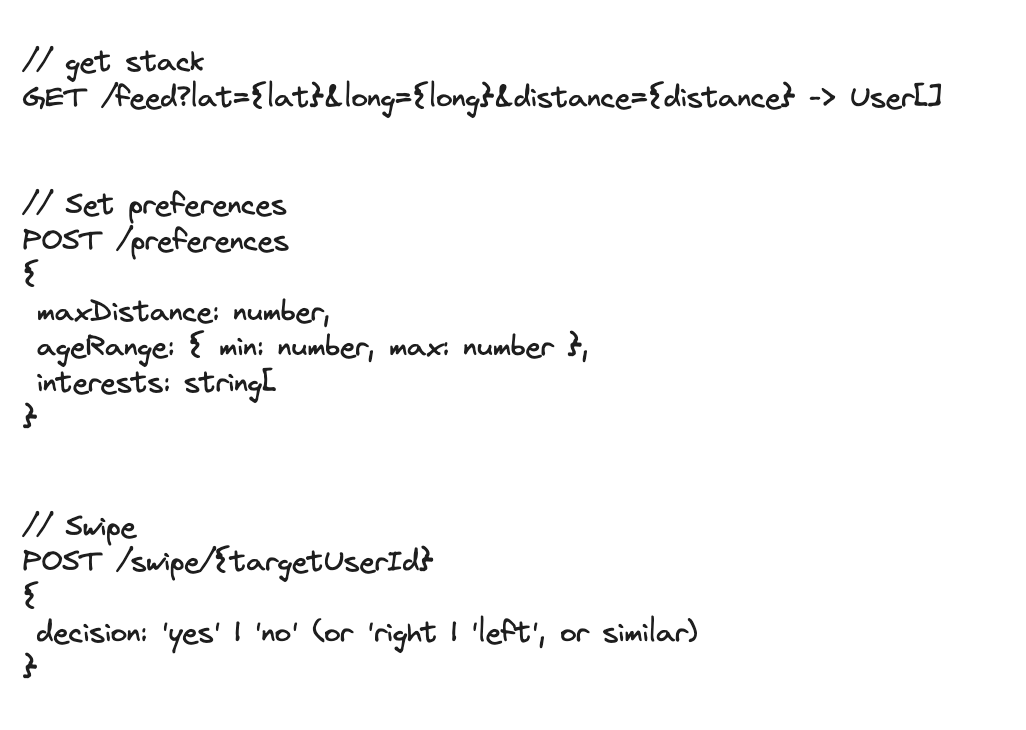

9.4. API Design

- Each demand (requirement) => 1 Endpoint

- View and Actions

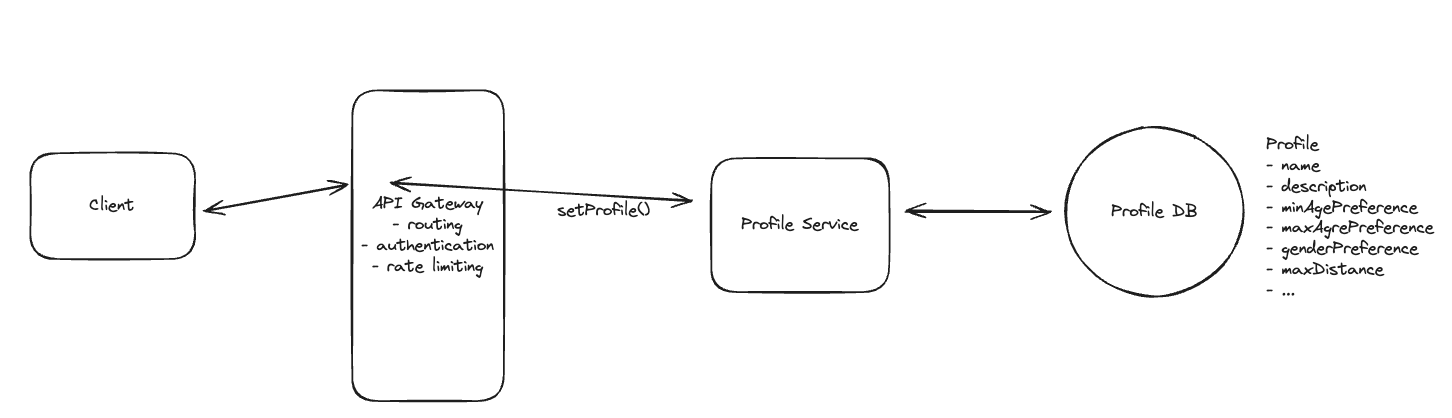

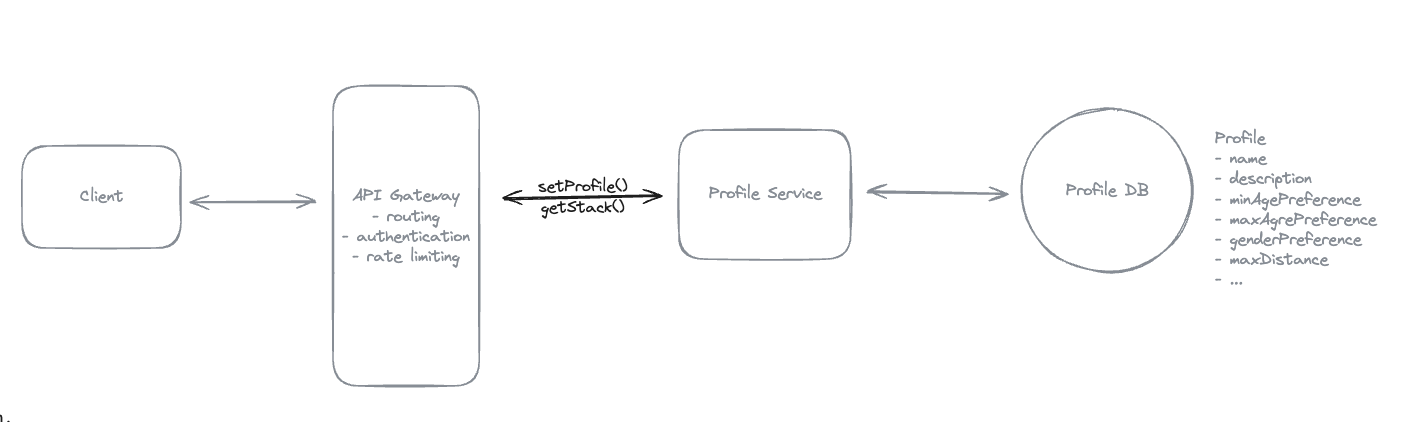

9.5. How will users be able to create a profile and set their preferences?

9.6. How will users be able to get a stack of recommended matches based on their preferences?

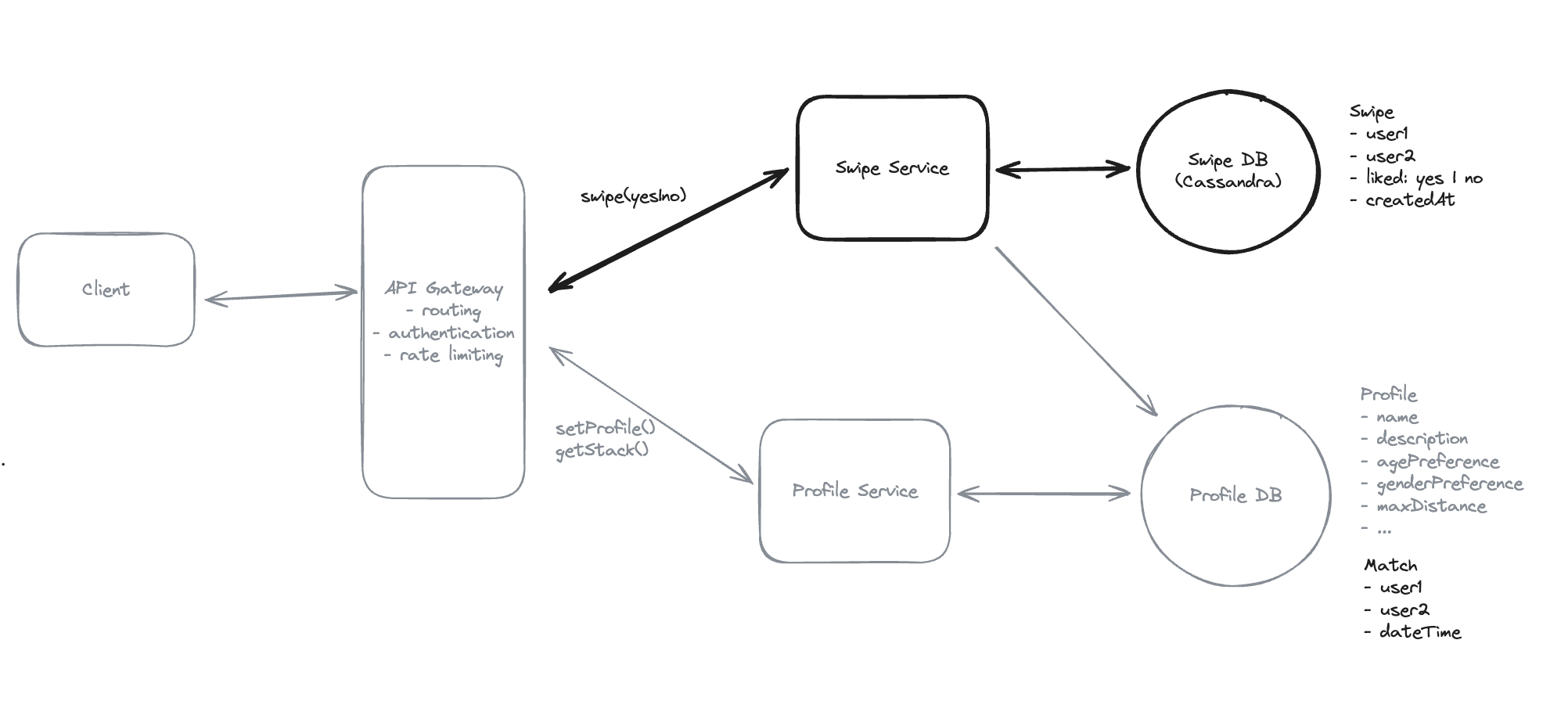

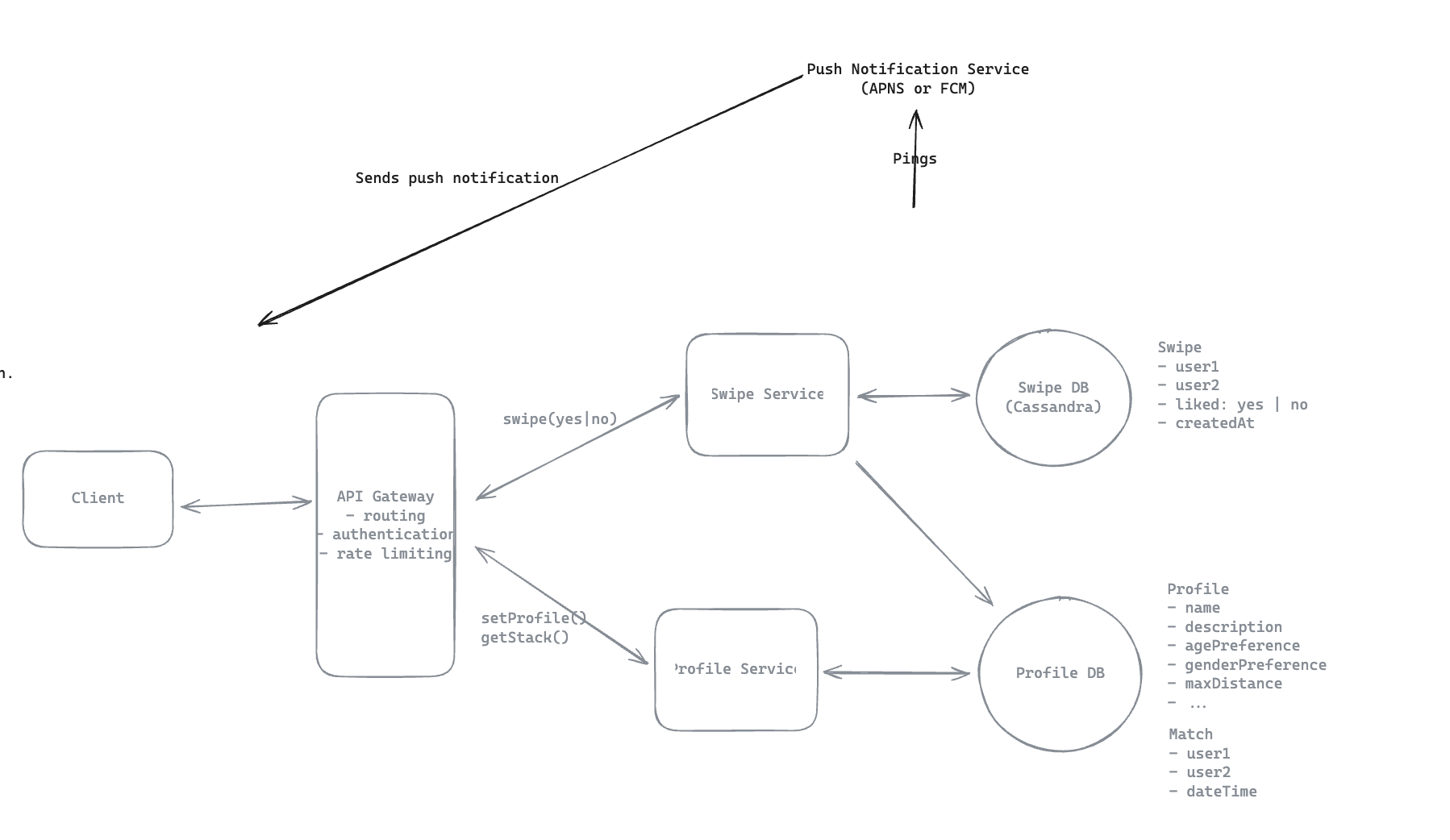

9.7. How will the system register and process user swipes (right/left) to express interest in other users, showing a match if you swipe right (like) on someone who already liked you?

- Using Cassandra for heavy-write.

9.8. The other user needs to know that they have a new match as well, how will your system notify them?

- Using: Apple Push Notification Service (APNs) for iOS or Firebase Cloud Messaging (FCM) for Android

9.9. How would you design the system to ensure that swipe actions are processed both consistently and rapidly, so that when a user likes someone who has already liked them—even if only moments before—they are immediately notified of the match?

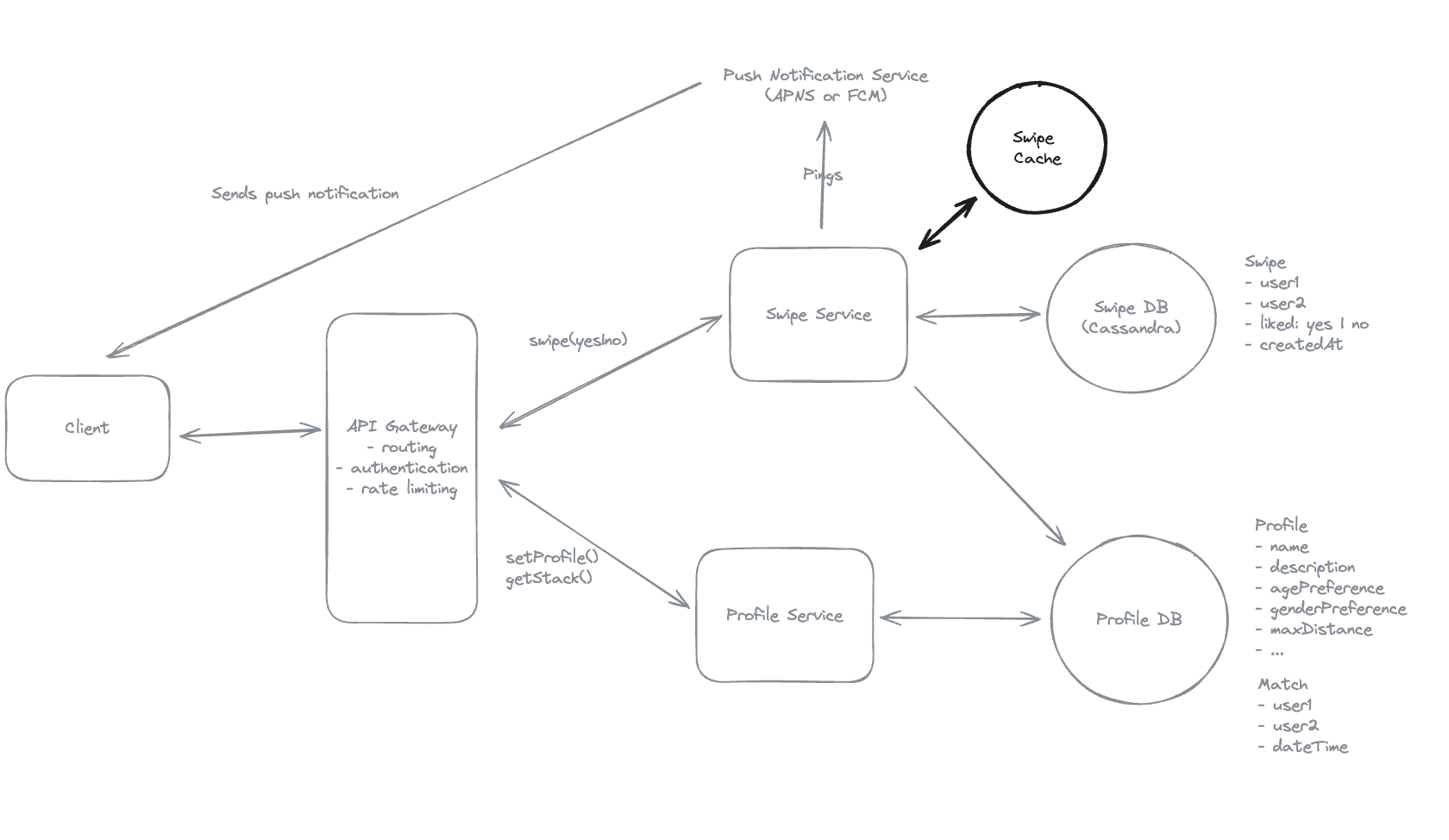

9.10. How can we ensure low latency for feed/stack generation?

9.11. How can the system avoid showing user profiles that the user has previously swiped on?

-

For users with relatively small swipe histories: Using cache to filter out.

-

For users with larger swipe histories: Using bloom filter to check if a profile has likely been swiped on.

-

One trade-off with bloom filters is that they can produce false positives but never false negatives.

-

Bloom Filters: If the users have swiped on => must be never show => pass requirements, but maybe filter out some users have not swiped on.

=> If the Bloom filter says the element is NOT in the set, then it’s definitely not => print(bf.might_contain(“grape”)) == False, it is definately not have in the set. => If the bloom filter says yes, maybe yes or no.

9.12. Redis is single-threaded. Atomic operations prevent race conditions when multiple processes access shared resources concurrently.

- True, Single-threaded do not need to worry about conflicts.

9.13. Which data structure efficiently handles 2D proximity searches for location-based queries?

- Geospatial Index

9.14. Write-optimized databases like Cassandra sacrifice immediate consistency for higher write throughput.

- Write-optimized databases typically use append-only structures (like commit logs) and eventual consistency models to maximize write performance.

9.15. Write buffer size optimization improves write performance by batching disk writes, but doesn’t directly improve read performance.

- Yes

9.16. Bloom filters can produce false positives but never false negatives when testing set membership.

- Yes

9.17. Why do systems use Lua scripts in Redis for atomic operations like swipe matching?

-

Lua scripts in Redis execute atomically on the server side => ensure it happen as a single atomic unit.

-

This prevents race conditions where two users might swipe simultaneously and miss detecting a match.

9.18. Pre-computing and caching data reduces query latency at the cost of potentially serving stale information.

- Yes

9.19. Why do dating apps partition swipe data by sorted user ID pairs (e.g., ‘user123:user456’)?

-

By creating partition keys from sorted user IDs, systems ensure that swipes between any two users (A→B and B→A) always land in the same database partition.

-

This enables single-partition transactions for atomic match detection, avoiding the complexity and performance overhead of distributed transactions.

9.20. Bloom filters are ideal for preventing users from seeing profiles they’ve already swiped on because they never produce false negatives.

- Show the items that have not existed => Bloom Filters.

9.21 (Hay). In systems with high write loads, which consistency model is typically chosen and why?

- Eventual consistency, have bandwidth and resource for write.

9.22. Client-side caching can reduce server load but requires careful invalidation strategies to maintain data accuracy.

- Yes

9.23. Cache Staleness strategy

-

Time-based TTL.

-

Periodic background refresh.

-

Event-driven cache invalidation.

9.24. Consistent hashing minimizes data redistribution when nodes are added or removed from a distributed system.

- Yes

9.25 (Hay). Why do dating apps use geospatial indexes instead of simple latitude/longitude range queries?

-

Range queries don’t account for Earth’s curvature

-

Range queries only work in flat rectangle, not work for curve of the Earth.

-

Geospatial indexes like R-trees account for true geographic distance calculations.

9.26. Using Redis for match detection and Cassandra for durable swipe storage creates consistency challenges that require careful coordination.

-

This dual-storage approach gains Redis’s atomic operations for real-time matching.Cassandra’s durability for historical data, but creates a consistency gap.

-

If Redis fails after detecting a match but before Cassandra persists the swipe, the match could be lost.

10. Patterns

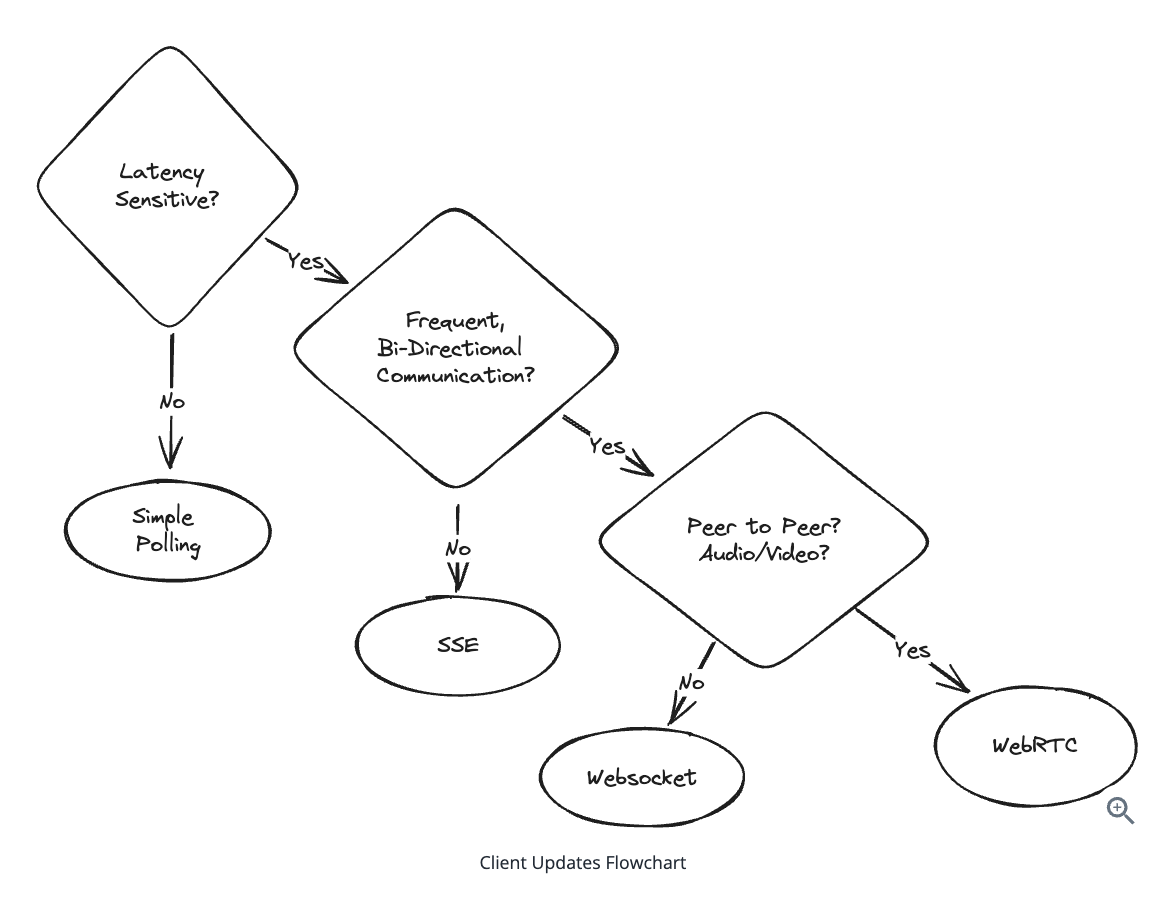

10.1. Real-time Updates

10.1.1. Network Protocol

-

Network Layer (Layer 3):

- Assigns IP addresses (IPv4 or IPv6) to devices.

- Splits large packets into smaller ones if needed.

-

Transport Layer (Layer 4):

-

TCP: connection-oriented protocol: before you can send data, you need to establish a connection with the other side.

-

UDP: connectionless protocol: you can send data to any other IP address on the network without any prior setup.

-

TCP tracks every byte sent and requires ACKs to confirm receipt. If packets get lost, TCP retransmits them. UDP simply doesn’t do this, TCP ensures data arrives in order.

-

-

Application Layer (Layer 7): At the final layer are the application protocols like DNS, HTTP, Websockets, WebRTC => DNS can choose to use TCP or UDP.

TCP Connection

-

When user make connections using TCP -> Three-way handshanking + Make requests + Finish.

-

When a user makes REST requests like GET, POST, or PUT, the underlying transport protocol is typically TCP, can use keep-alive for sessions.

Load Balancers

-

Layer 4 Load Balancers: AWS Network Load Balancer => Load to IP layer

-

Layer 7 Load Balancers: API Gateway => Load HTTP requests.

-

It works together

User Request --> Layer 4 Load Balancer --> Layer 7 Load Balancer --> Backend Server

Internet

↓

Layer 4 Load Balancer (e.g., AWS Network Load Balancer)

↓

Layer 7 Load Balancer (e.g., AWS Application Load Balancer, NGINX)

↓

Backend servers (web servers, APIs, etc.)

10.1.2. Client Updates

1. Simple Polling

Cons:

-

Can cause unnecessary network traffic (lots of requests with no new data).

-

Higher latency for real-time updates because client only checks periodically.

async function poll() {

const response = await fetch('/api/updates');

const data = await response.json();

processData(data);

}

// Poll every 2 seconds

setInterval(poll, 2000);

2. Long Polling

-

Server only requests when it have more data -> Else it hold the requests, client do not need to make requests.

- Step 1: Client makes HTTP request to server

- Step 2: Server holds request open until new data is available

- Step 3: Server responds with data

- Step 4: Client immediately makes new request

- Step 5: Process repeats

-

In simple polling, the client checks for new data only at fixed intervals (e.g., every 5 seconds). If new data arrives just after a check, the client has to wait until the next interval to find out — causing a delay that can be several seconds.

-

In long polling, the client sends a request and the server holds this request open until new data is available.

When the data arrives, the server immediately responds.

-

The client then instantly sends a new request to listen for more updates.

-

Because the server responds as soon as new data is available, the delay between data creation and client notification is minimized—usually just the network latency and processing time.

Cons:

-

More complex to implement.

-

Holding many open connections can strain the server.

Different Long Polling and Web Socket

-

Long polling: HTTP request/response cycle.

-

Web Socket: Persistent, full-duplex TCP

3. Server-Sent Events (SSE)

Cons:

-

Limited browser support EventSource.

-

One-way communication only: Server to Client.

4. WebSocket: The Full-Duplex Champion

Cons:

- More complex to implement.

- Requires special infrastructure.

- Stateful connections, can make load balancing and scaling more complex.

5. WebRTC: The Peer-to-Peer Solution

Use for video calling, peer-to-peer connection.

-

Peers discover each other through signaling server.

-

Exchange connection info (ICE candidates)

-

Establish direct peer connection, using STUN/TURN if needed

-

Stream audio/video or send data directly

WebSocket — When to Use

- Client-server communication: WebSocket creates a persistent, full-duplex connection between a client and a server.

WebRTC — When to Use

- Peer-to-peer communication: WebRTC enables direct, low-latency peer-to-peer connections between browsers/devices.

const config = {

iceServers: [{ urls: 'stun:stun.l.google.com:19302' }],

};

-

STUN server: Helps peers find their public IP addresses to connect through NATs/firewalls.

-

TURN server: Relays media/data if a direct peer-to-peer connection can’t be established (e.g., due to restrictive NATs).

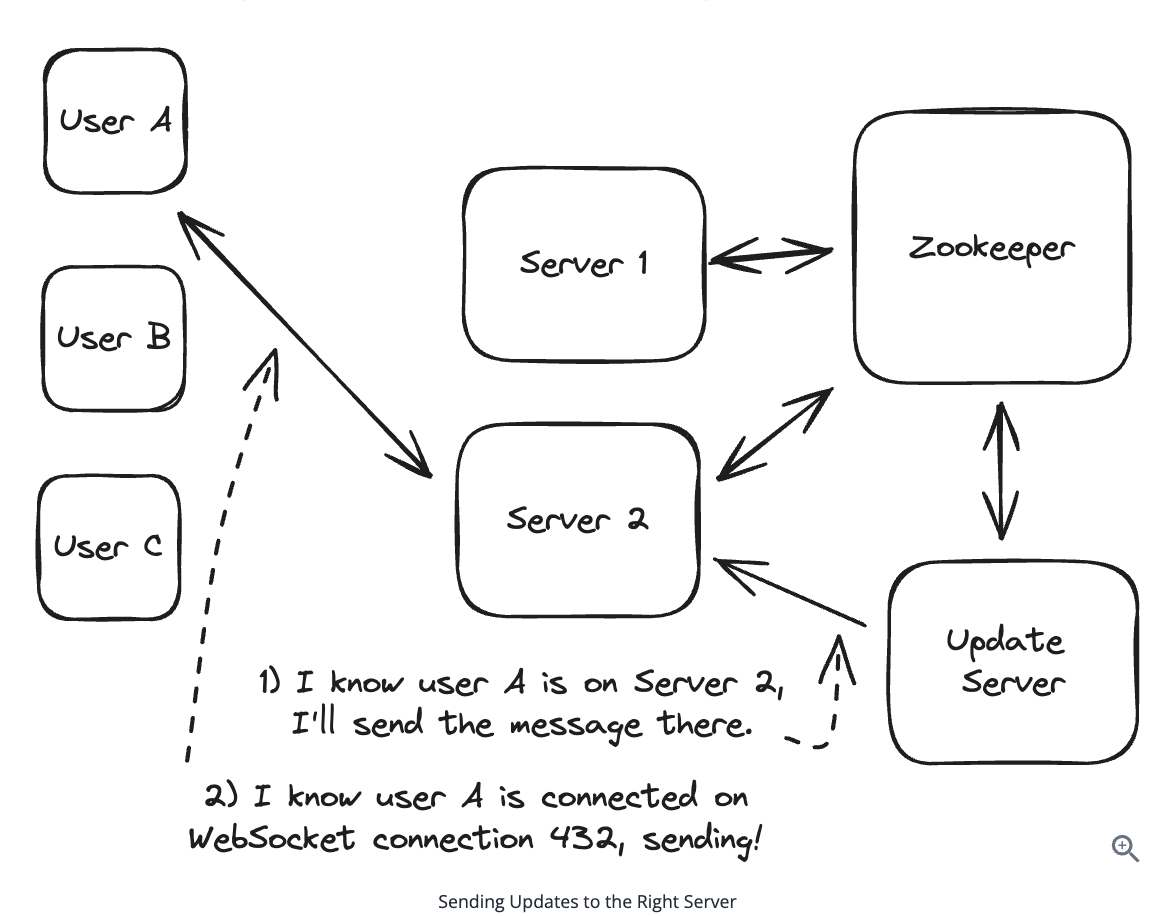

10.1.3. Server Pull/Push

- Pulling with Simple Polling

Cons:

- High latency.

- Excess DB load when updates are infrequent and polling is frequent.

- Pushing via Consistent Hashes

- Each server -> Each client fixed.

Zookeepers is used to store metadata of clients and servers.

Cons:

-

Complex to implement correctly

-

Requires coordination service (like Zookeeper)

-

All servers need to maintain routing information

When to use:

-

WebSocket: stateful

-

Consistent hashing is ideal when you need to maintain persistent connections (WebSocket/SSE) and your system needs to scale dynamically, state ‘on’, ‘off’.

- Pushing via Pub/Sub

Cons:

- The Pub/Sub service becomes a single point of failure and bottleneck.

10.1.4. WebSockets require every intermediary (load balancers, proxies) between client and server to support the upgrade handshake.

- Yes

10.1.5. Which load balancer type (per the OSI model) is generally preferred when terminating long-lived WebSocket connections?

- L4 balancers operate at the TCP layer and preserve the single underlying connection, avoiding issues some L7 proxies have with WebSocket stickiness.

10.1.6. Consistent hashing reduces connection churn when scaling endpoint servers up or down.

-

True because it auto sharding to another nodes in ring architecture.

-

Only the keys mapping to the portion of the hash ring owned by added/removed nodes move, so most clients stay put.

10.1.7. In a pub/sub architecture, which component is responsible for routing published messages to all active subscribers?

- Message Queue.

10.1.8. WebRTC always guarantees a direct peer-to-peer path between browsers without relays.

-

Success: STUN: Help a client find its public IP address and port as seen from the internet (outside its NAT) - Session Traversal Utilities for NAT

-

Failed (Fallback): TURN: Relay data between peers when direct connection isn’t possible - Traversal Using Relays around NAT

-

When NAT traversal fails, TURN relays forward traffic, so not all flows are purely peer-to-peer.

10.1.9. Using a “least connections” strategy at the load balancer helps distribute WebSocket clients more evenly across endpoint servers.

- Yes

10.1.10. When would you MOST LIKELY choose consistent hashing over a pub/sub approach on the server side?

- If per-client state is heavy, pinning that user to one server (via hashing) avoids expensive state transfer and cache misses => Fixed some client state to server to save resources.

10.1.11. SSE streams can be buffered by misconfigured proxies, delaying updates to clients even though the server flushes chunks immediately.

- Proxies that don’t support Transfer-Encoding: chunked may wait for the full response before forwarding, defeating streaming semantics.

10.2. Dealing with Contention

10.2.1. Transaction

BEGIN TRANSACTION;

-- Debit Alice's account

UPDATE accounts SET balance = balance - 100 WHERE user_id = 'alice';

-- Credit Bob's account

UPDATE accounts SET balance = balance + 100 WHERE user_id = 'bob';

COMMIT; -- Both operations succeed together

Transaction

BEGIN TRANSACTION;

-- Check and reserve the seat

UPDATE concerts

SET available_seats = available_seats - 1

WHERE concert_id = 'weeknd_tour'

AND available_seats >= 1;

-- Create the ticket record

INSERT INTO tickets (user_id, concert_id, seat_number, purchase_time)

VALUES ('user123', 'weeknd_tour', 'A15', NOW());

COMMIT;

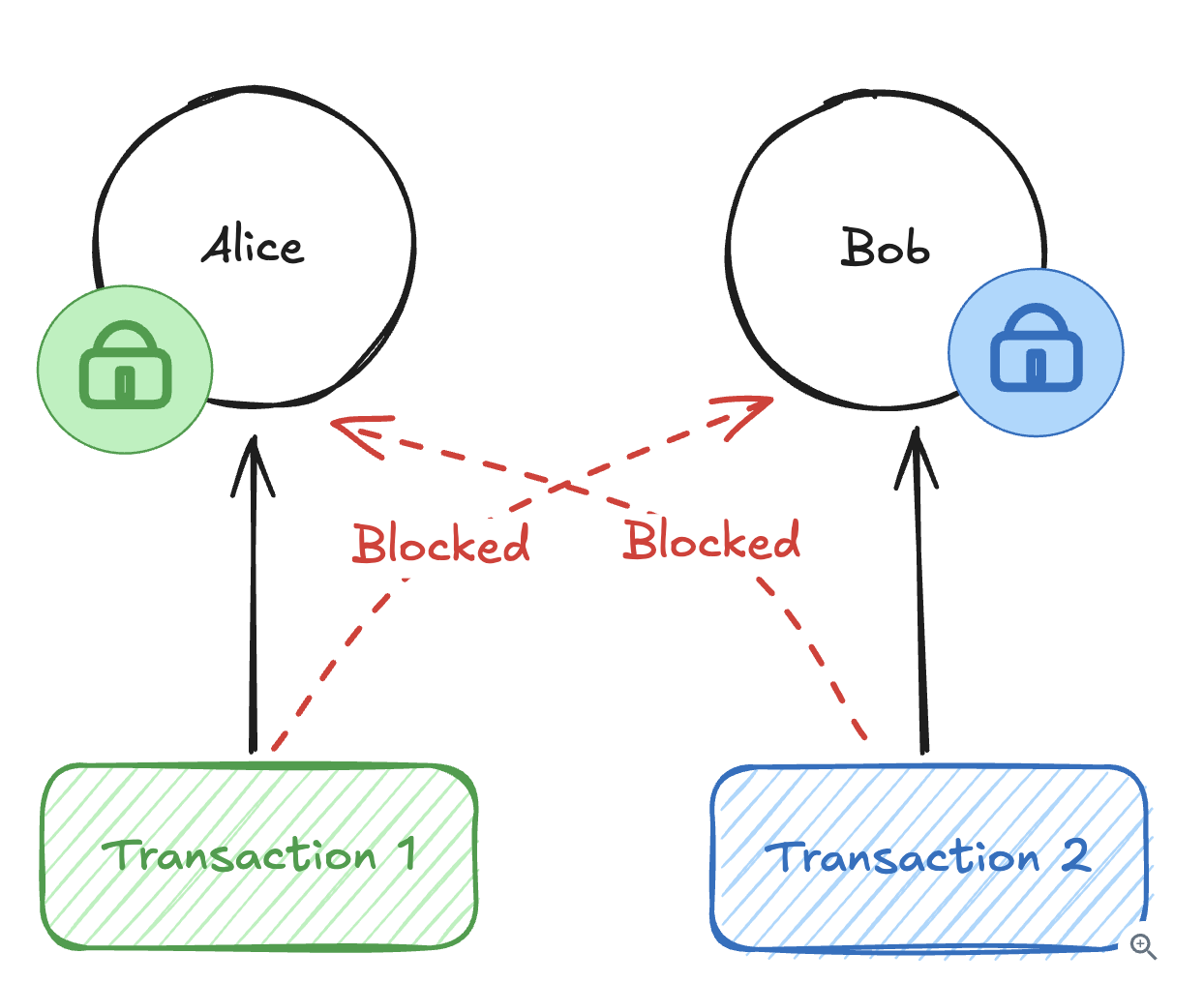

10.2.2. Pessimistic Locking

- “Pessimistic” about conflicts - assuming they will happen and preventing them.

When a transaction or thread wants to access a resource (like a row in a table), it locks it before reading or writing. Other transactions/threads must wait until the lock is released.

Types of Locks:

-

Read Lock (Shared Lock): Others can also read, but not write.

-

Write Lock (Exclusive Lock): Others can’t read or write.

BEGIN;

SELECT * FROM accounts WHERE id = 101 FOR UPDATE;

-- Do some update here

UPDATE accounts SET balance = balance - 100 WHERE id = 101;

COMMIT;

optimi

10.2.3. Optimistic locking

Update success

UPDATE accounts

SET balance = 900, version = 4

WHERE id = 1 AND version = 3;

Update failed

UPDATE accounts

SET balance = 800, version = 4

WHERE id = 1 AND version = 3;

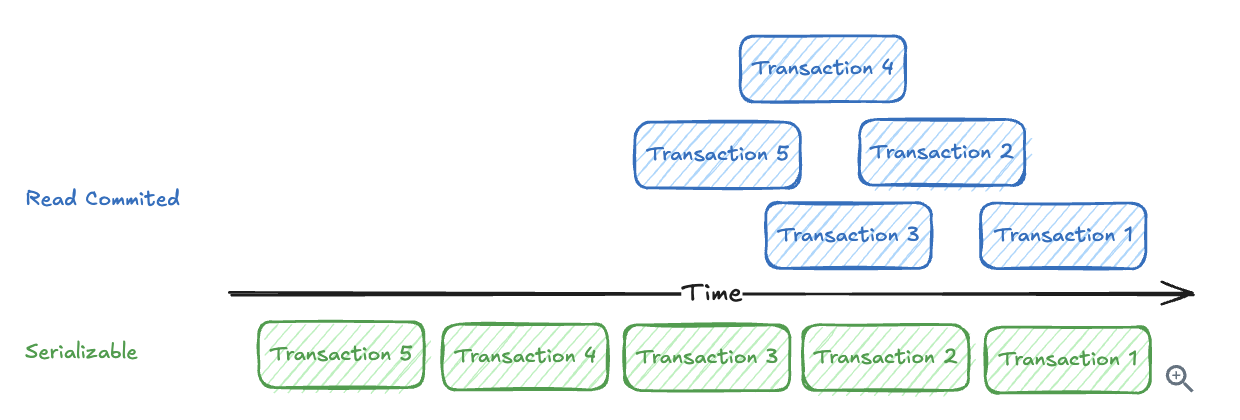

10.2.4. Isolation Levels

-

READ UNCOMMITTED: Can see uncommitted changes from other transactions (rarely used)

-

READ COMMITTED: Can only see committed changes (default in PostgreSQL)

-

REPEATABLE READ: Same data read multiple times within a transaction stays consistent (default in MySQL)

-

SERIALIZABLE: Strongest isolation, transactions appear to run one after another

The defaults of either READ COMMITTED or REPEATABLE READ => still allows our concert ticket race condition because both Alice and Bob can read “1 seat available” simultaneously before updating.

Solution: Using SERIALIZABLE

-- Set isolation level for this transaction

BEGIN TRANSACTION ISOLATION LEVEL SERIALIZABLE;

UPDATE concerts

SET available_seats = available_seats - 1

WHERE concert_id = 'weeknd_tour'

AND available_seats >= 1;

-- Create the ticket record

INSERT INTO tickets (user_id, concert_id, seat_number, purchase_time)

VALUES ('user123', 'weeknd_tour', 'A15', NOW());

COMMIT;

With SERIALIZABLE, the database automatically detects conflicts and aborts one transaction if they would interfere with each other.

10.2.5. Optimistic Concurrency Control

-- Alice reads: concert has 1 seat, version 42

-- Bob reads: concert has 1 seat, version 42

-- Alice tries to update first:

BEGIN TRANSACTION;

UPDATE concerts

SET available_seats = available_seats - 1, version = version + 1

WHERE concert_id = 'weeknd_tour'

AND version = 42; -- Expected version

INSERT INTO tickets (user_id, concert_id, seat_number, purchase_time)

VALUES ('alice', 'weeknd_tour', 'A15', NOW());

COMMIT;

-- Alice's update succeeds, seats = 0, version = 43

-- Bob tries to update:

BEGIN TRANSACTION;

UPDATE concerts

SET available_seats = available_seats - 1, version = version + 1

WHERE concert_id = 'weeknd_tour'

AND version = 42; -- Stale version!

-- Bob's update affects 0 rows - conflict detected, transaction rolls back

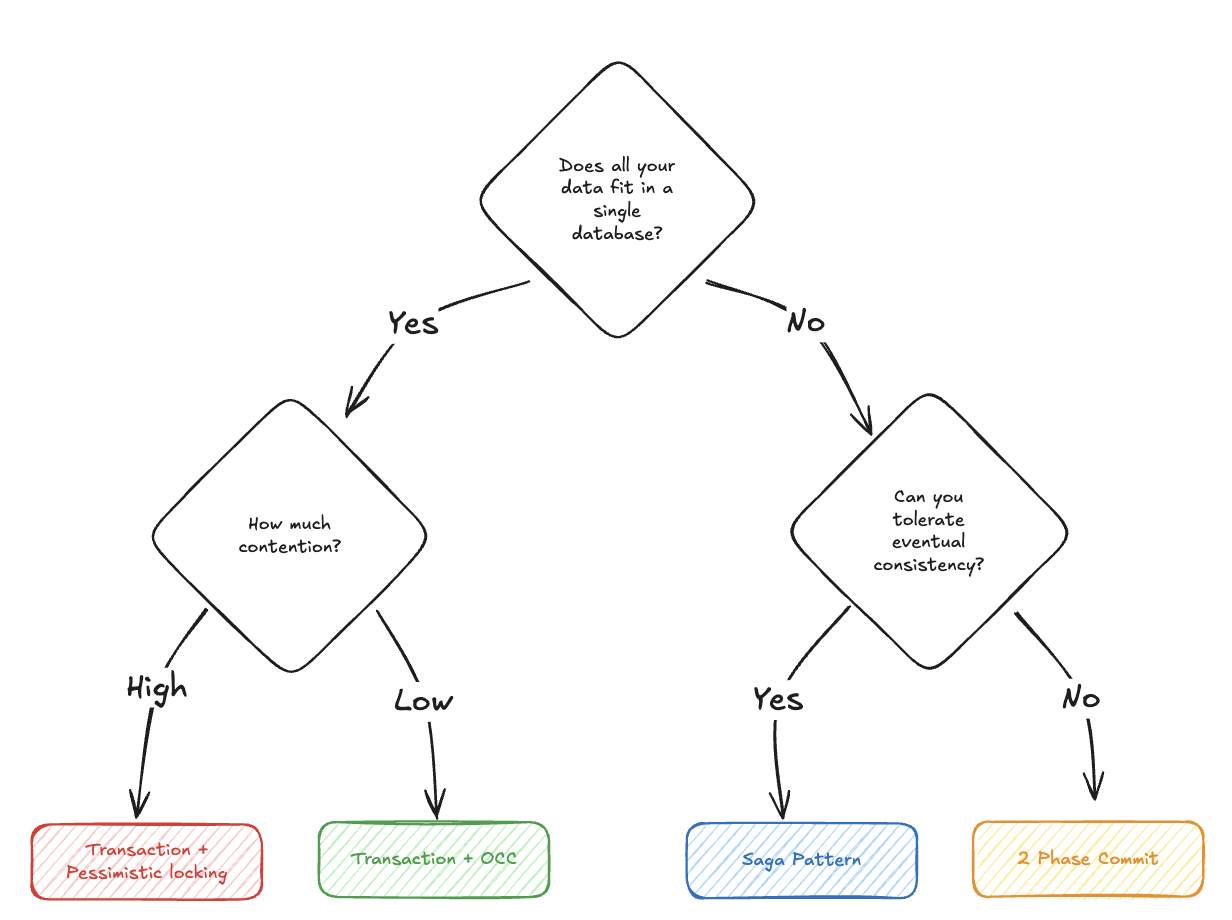

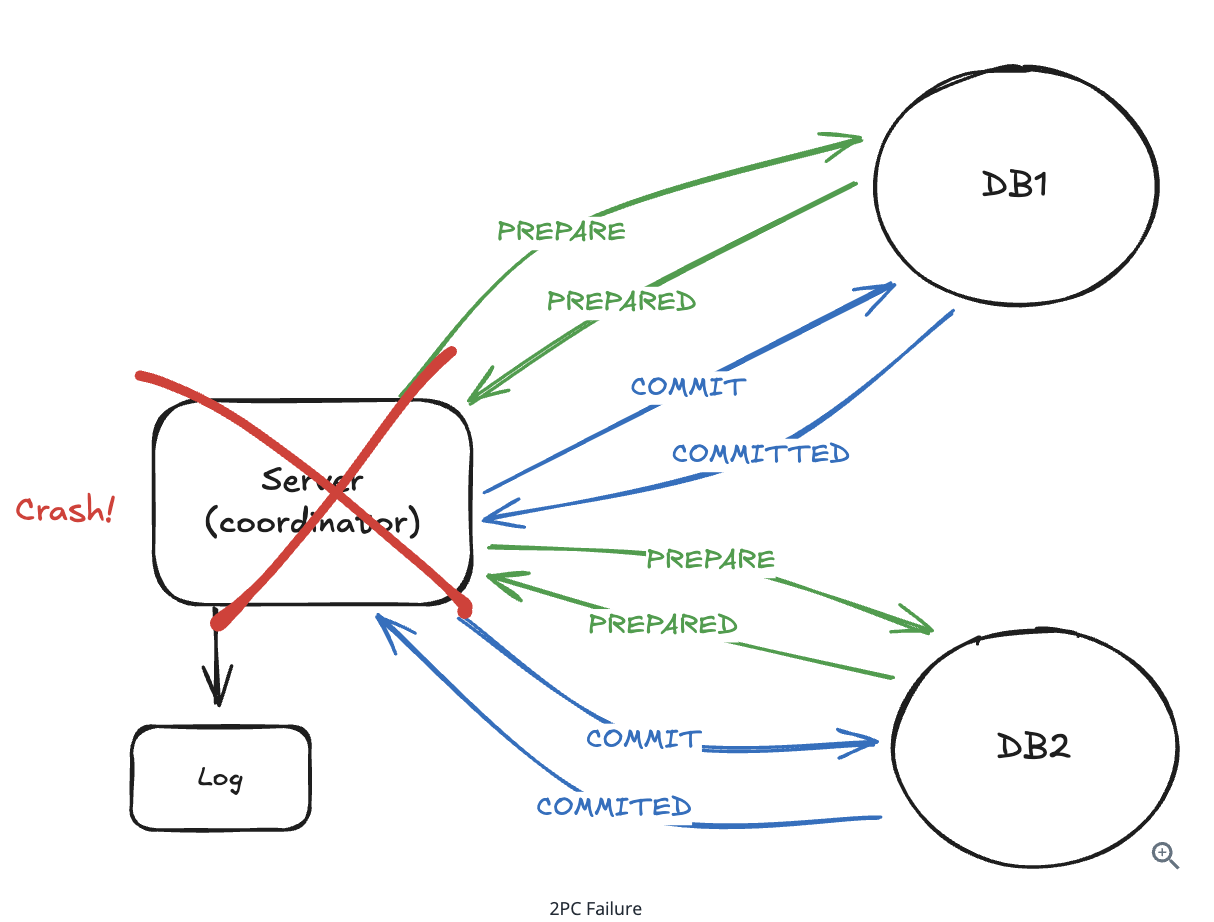

10.2.6. Multiple nodes

Two-phrase commit

- Step 1: Prepare, transaction stays open, waiting for coordinator’s decision

-- Database A during prepare phase

BEGIN TRANSACTION;

SELECT balance FROM accounts WHERE user_id = 'alice' FOR UPDATE;

-- Check if balance >= 100

UPDATE accounts SET balance = balance - 100 WHERE user_id = 'alice';

-- Transaction stays open, waiting for coordinator's decision

-- Database B during prepare phase

BEGIN TRANSACTION;

SELECT * FROM accounts WHERE user_id = 'bob' FOR UPDATE;

-- Verify account exists and is active

UPDATE accounts SET balance = balance + 100 WHERE user_id = 'bob';

-- Transaction stays open, waiting for coordinator's decision

- Step 2: Coordinator commit.

Distributed Locks

-

Redis with TTL

-

Database columns: Add status, expiration column.

-

ZooKeeper/etcd: Use coordination service to distribued lock. Both systems use consensus algorithms (Raft for etcd, ZAB for ZooKeeper) to maintain consistency across multiple nodes.

Saga Pattern

-

Step 1 - Debit $100 from Alice’s account in Database A, commit immediately

-

Step 2 - Credit $100 to Bob’s account in Database B, commit immediately

-

Step 3 - Send confirmation notifications and adjust rollup or commit.

Cons:

-

During saga execution, the system is temporarily inconsistent. After Step 1 completes, Alice’s account is debited but Bob’s account isn’t credited yet.

-

Other processes might see Alice’s balance as $100 lower during this window. If someone checks the total money in the system, it appears to have decreased temporarily.

When to use

Here are some bang on examples of when you might need to use contention patterns:

-

Multiple users competing for limited resources: concert tickets, auction bidding, flash sale inventory, or matching drivers with riders

-

Prevent double-booking or double-charging in scenarios: payment processing, seat reservations, or meeting room scheduling.

-

Ensure data consistency under high concurrency for operations: account balance updates, inventory management, or collaborative editing

-

Handle race conditions in distributed systems: where the same operation might happen simultaneously across multiple servers and where the outcome is sensitive to the order of operations.

When not to use:

- Read-heavy workloads: Handle write conflicts -> Impact read performance.

10.2.7. How do you prevent deadlocks with pessimistic locking?

-

Alice wants to transfer $100 to Bob, while Bob simultaneously wants to transfer $50 to Alice.

-

Transaction A locks Alice’s account first, then tries to lock Bob’s account.

-

Transaction B locks Bob’s account first, then tries to lock Alice’s account. Both transactions wait forever for the other to release their lock.

Solution:

-

Sort the resources you need to lock by some deterministic key like user ID, database primary key, or even memory address.

-

If you need to lock users 123 and 456, always lock 123 first even if your business logic processes 456 first.

-

Use database timeout for fallback => Most modern databases also have automatic deadlock detection that kills one transaction when cycles are detected.

10.2.8. What if your coordinator service crashes during a distributed transaction in 2-Phrase Commit ?

Production systems handle this with coordinator failover and transaction recovery

=> When a new coordinator starts up, it reads persistent logs to determine which transactions were in-flight and completes them.

10.2.9. How do you handle the ABA problem with optimistic concurrency

- Update the review_count for user know the problem.

-- Use review count as the "version" since it always increases

UPDATE restaurants

SET avg_rating = 4.1, review_count = review_count + 1

WHERE restaurant_id = 'pizza_palace'

AND review_count = 100; -- Expected current count

10.2.10. What about performance when everyone wants the same resource

10.2.11. What does the SQL ‘FOR UPDATE’ clause accomplish?

-

Exclusive lock: write.

-

Shared lock: read.

10.2.12. Pessimistic locking more efficient when conflicts are frequent.

- Optimistic concurrency control works best when conflicts are rare, multiple conflicts => multiple retries.

- Frequent conflicts mean lots of retries. making pessimistic locking more efficient.

10.2.13. In two-phase commit, transactions can stay open across network calls.

- This is actually a dangerous aspect of 2PC - open transactions hold locks during network coordination, which can cause problems if the coordinator crashes.

10.2.14. What is the standard solution for preventing deadlocks with pessimistic locking?

- Always acquire locks in a consistent order

10.2.15. Which approach works best for handling the ‘hot partition’ problem where everyone wants the same resource?

- Implement queue-based serialization

10.2.16. When should you choose pessimistic locking over optimistic concurrency control?

- Optimistic approaches that require rollback => Reduce the rollback rates.

10.2.17. What is the main advantage of keeping contended data in a single database?

- ACID transaction.

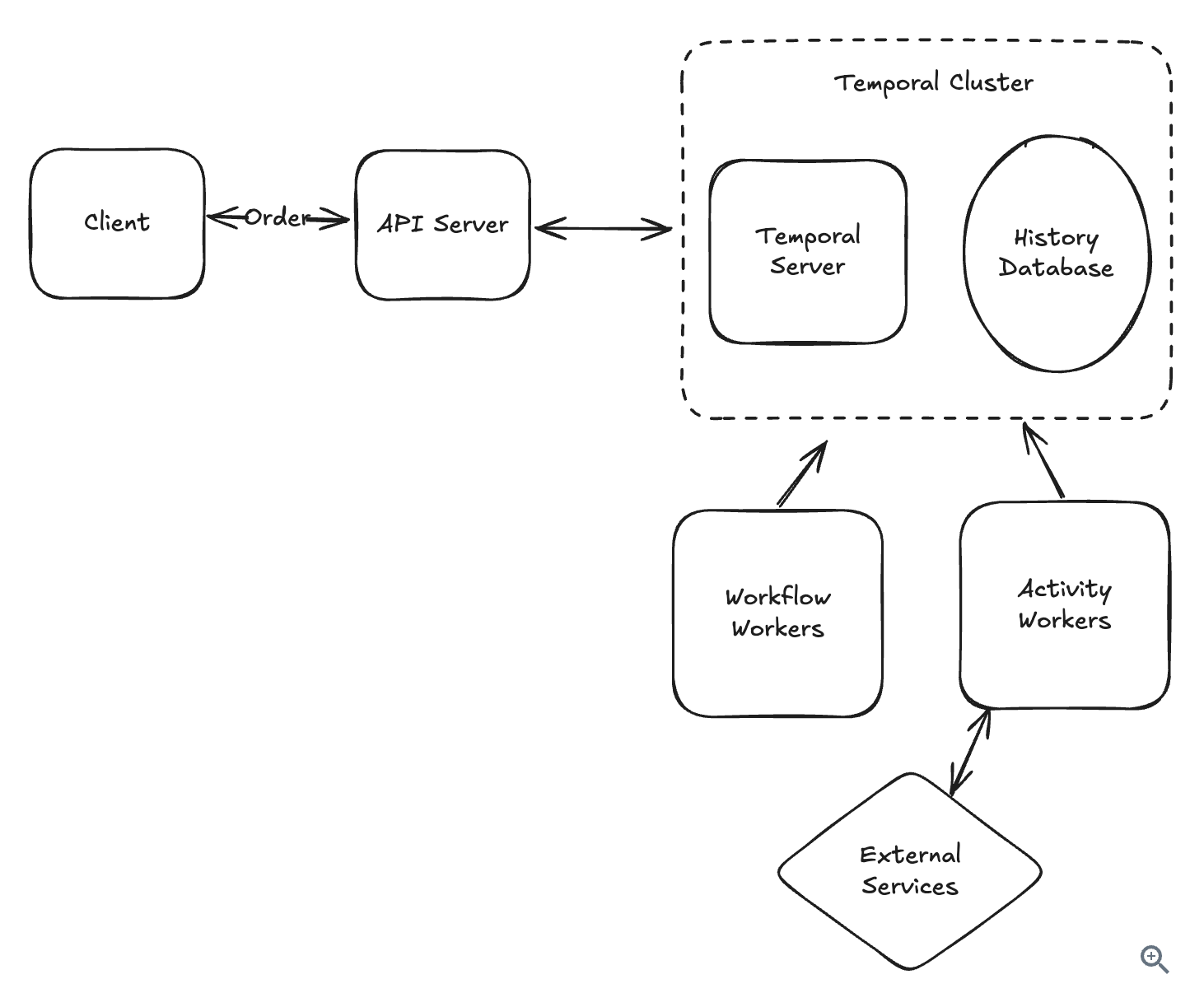

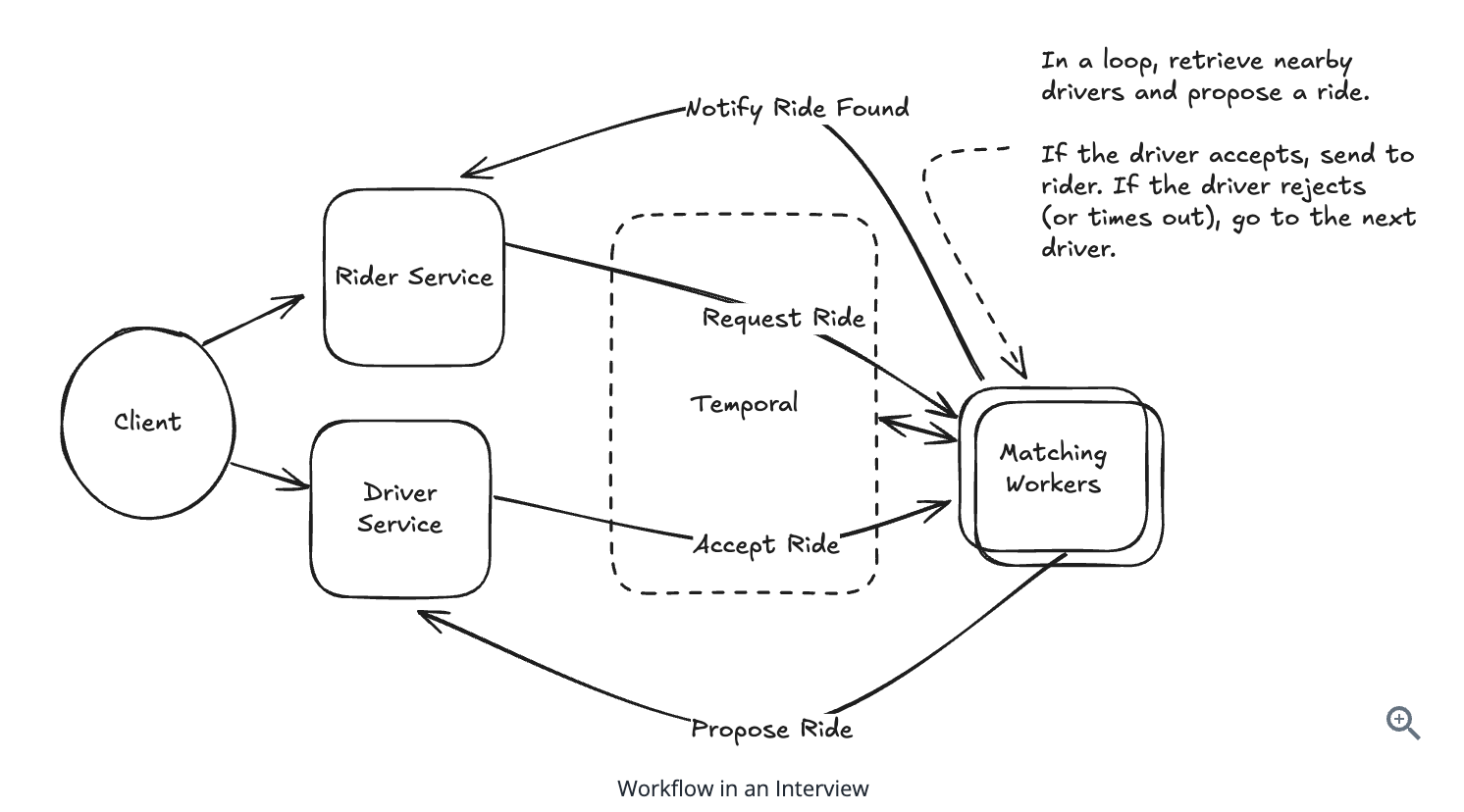

10.3. Multi-step Processes

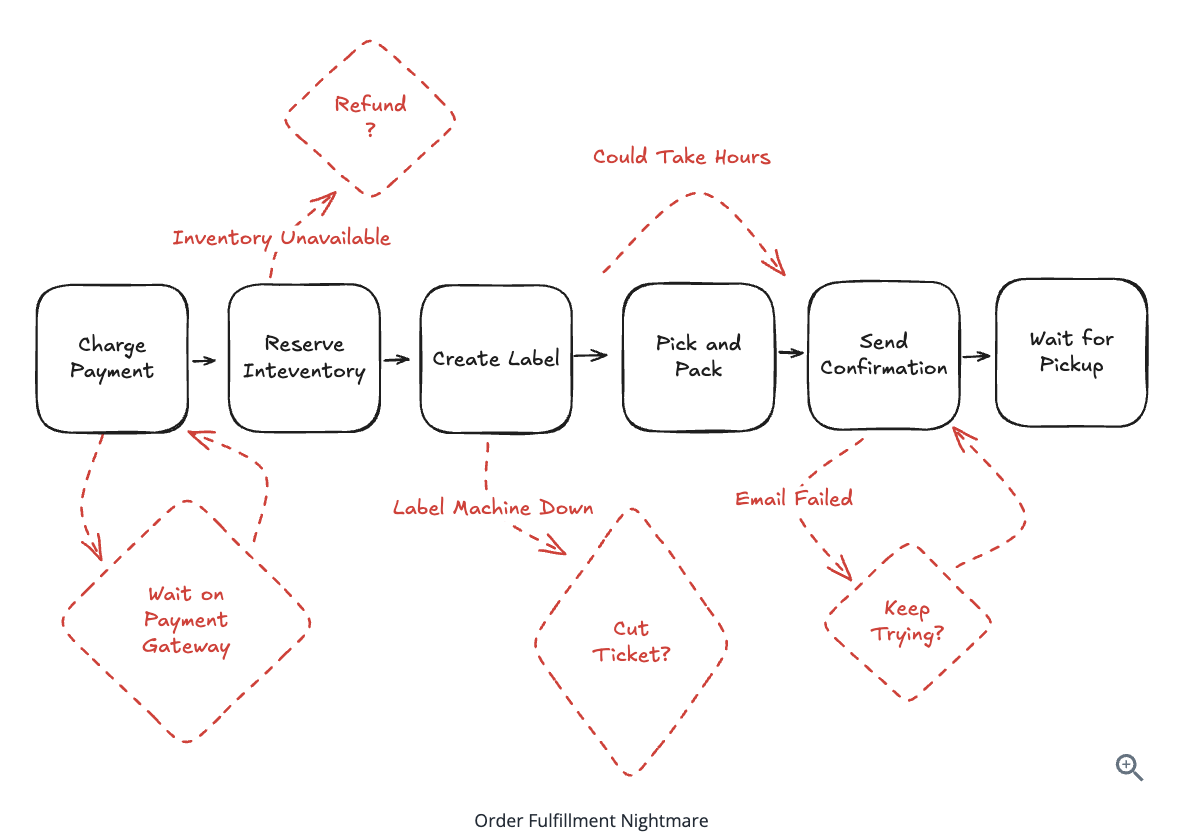

10.3.1. Order Messy State in real-world

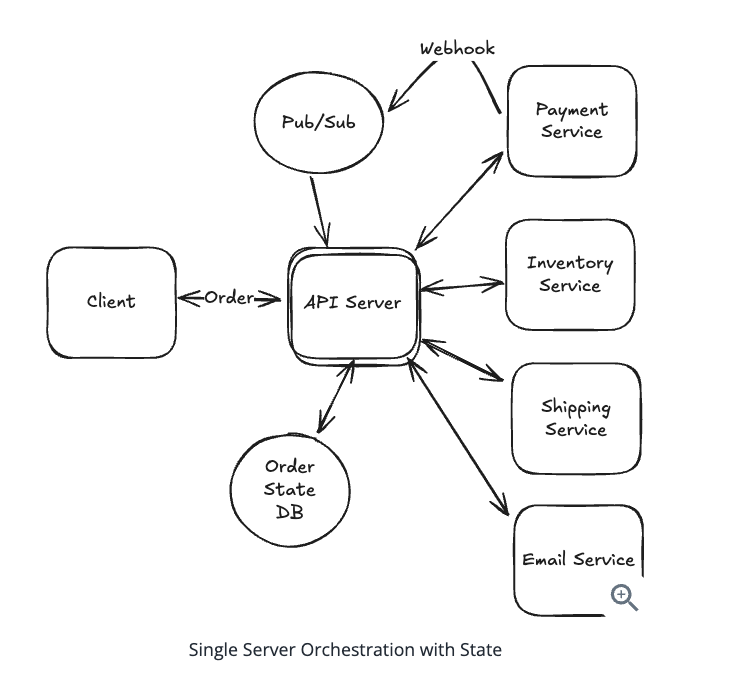

10.3.2. Single Server Orchestration

- Cons: It have to manually config event state and handle failure in each service.

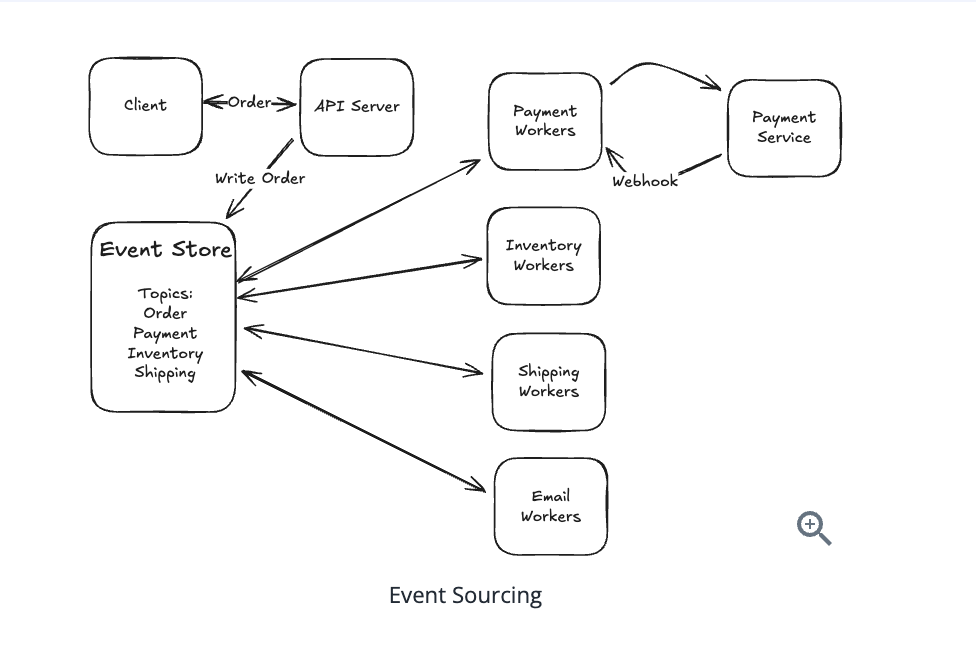

10.3.3. Event Sourcing

- Implement event sourcing patterns.

10.3.4. Workflow Management

-

Manage state of transaction.

-

Query transaction by time.

Use for payment system, rail-hailing system.

10.3.5. How will you handle updates to the workflow ?

-

Workflow Versioning.

-

Workflow Migrations

10.3.6. How do we keep the workflow state size in check

-

First, we should try to minimize the size of the activity input and results.

-

Second, we can keep our workflows lean by periodically recreating them. If you have a long-running workflow with lots of history, you can periodically recreate the workflow from the beginning, passing only the required inputs to the new workflow to keep going.

10.3.7. How do we deal with external events

-

External systems send signals through the workflow engine’s API. The workflow suspends efficiently - no polling, no resource consumption.

-

This pattern handles human tasks, webhook callbacks, and integration with external systems.

10.3.8. How can we ensure X step runs exactly once

-

Most workflow systems provide a way to ensure an activity runs exactly once … for a very specific definition of “run”.

-

The solution is to make the activity idempotent. This means that the activity can be called multiple times with the same inputs and get the same result.

10.3.9. What is the primary challenge that multi-step processes address in distributed systems?

- Coordinating multiple services reliably across failures and retries

10.3.10. What is the key principle behind event sourcing?

- Store a sequence of events that represent what happened

10.3.11. In event sourcing architecture, what triggers the next step in a workflow?

- Workers consuming events from the event store => change state.

10.3.12. Workflow systems eliminate the need for building custom infrastructure for state management and orchestration.

- Workflow systems and durable execution engines provide the benefits of event sourcing and state management => without requiring you to build the infrastructure yourself.

10.3.13. In Temporal workflows, what is the key requirement for workflows vs activities?

-

Workflows must be deterministic (same inputs and history produce same decisions) to enable replay-based recovery.

-

Activities must be idempotent (can be called multiple times with same result) but won’t be retried once they succeed.

10.3.14. Temporal uses a history database to remember activity results during workflow replay.

-

Each activity run is recorded in a history database.

-

If a workflow runner crashes, another runner can replay the workflow and use the history to remember what happened with each activity invocation.

10.3.15. How do managed workflow systems like AWS Step Functions differ from durable execution engines like Temporal?

- They use declarative definitions (JSON/YAML) instead of code

10.3.16. How do workflow systems handle external events like waiting for user input?

- Workflows use signals to wait for external events efficiently.

10.3.17. Apache Airflow is better suited for event-driven, long-running user-facing workflows than scheduled batch workflows

- Apache Airflow excels at scheduled batch workflows like ETL and data pipelines, do not use for user-facing workflows.

10.4. Scaling Reads

10.4.1. Problem

Consider an Instagram feed. When you open the app, you’re immediately hit with dozens of photos, each requiring multiple database queries to fetch the image metadata, user information, like counts, and comment previews. That’s potentially 100+ read operations just to load your feed.

Meanwhile, you might only post one photo per day - a single write operation.

=> It’s not code problem, it’s physics problems about CPU, data, and disk I/O is bounded

10.4.2. Solution

-

Optimize read performance within your database

-

Scale your database horizontally

-

Add external caching layers

10.4.3. Optimize read performance within your database

-

Indexing.

-

Hardware Upgrades.

-

Denormalization Strategies.

-

Materialized views: Precomputing expensive aggregations

10.4.4. Scale your database horizontally

-

Read Replicas.

-

Database Sharding

-

Geographic sharding.

10.4.5. Add External Caching Layers

-

Application-Level Caching: Redis, Memcache.

-

CDN.

10.4.6. Do not apply for write-heavy system

10.4.7. What happens when your queries start taking longer as your dataset grows ?

-

Add index.

-

Using EXPLAIN to check.

10.4.8. How do you handle millions of concurrent reads for the same cached data - Hotpots Problem ?

-

Request coalescing: Query data for first requests and add to memcache.

-

Distributed load: feed:taylor-swift:1, feed:taylor-swift:2 => in multiple reading shards.

10.4.9. What happens when multiple requests try to rebuild an expired cache entry simultaneously?

- When that hour passes and the entry expires, all 100,000 requests suddenly see a cache miss in the same instant.

=> Your database, sized to handle maybe 1,000 queries per second during normal cache misses, suddenly gets hit with 100,000 identical queries.

-

Solution:

-

Distributed Cache: Only first requests hit the database => Lock other requests, consider it will peak the server.

-

Stale-while-revalidate: This refreshes cache entries before they expire, but not all at once.

-

10.4.10. How do you handle cache invalidation when data updates need to be immediately visible?

-

Write-through caching: Change the value of cache when updating.

-

Delete item in cache, after a time => query db and update cache.

-

CDN Caching: More complexity, using TTLs in edge caching.

10.4.11. Modern hardware and database engines handle write well-designed multiple indexes efficiently.

10.4.12. What is the main trade-off when using denormalization for read scaling?

-

Increased storage for faster reads

-

Denormalization trades storage space for read speed by storing redundant data to avoid expensive joins.

10.4.13. What is the key challenge with read replicas in leader-follower replication?

- Replication lag causing potentially stale reads

10.4.14. Synchronous replication ensures consistency but introduces latency, while asynchronous replication is faster but potentially serves stale data.

- Yes

10.4.15. When does functional sharding make sense for read scaling?

- When different business domains have different access patterns

10.4.16. Why do most applications benefit significantly from caching?

- Access patterns are highly skewed - popular content gets requested repeatedly

10.4.17. CDNs only make sense for data accessed by multiple users.

10.4.18. How should cache TTL be determined?

- Based on non-functional requirements, such as: search results should be no more than 30 seconds stale

10.4.19. What is the key benefit of request coalescing for handling hot keys?

- Request coalescing ensures that even if millions of users want the same data simultaneously, your backend only receives N requests - one per application server doing the coalescing.

10.4.20. What is the ‘stale-while-revalidate’ pattern for cache stampede prevention?

- Serving old data while refreshing in the background

10.4.21. Cache versioning avoids invalidation complexity by changing cache keys when data updates, making old entries naturally unreachable.

- Yes

10.5. Scaling Writes

10.5.1. Problem

- Bursty, high-throughput writes with lots of contention is a problem.

10.5.2. Solution

-

Vertical Scaling and Write Optimization

-

Sharding and Partitioning

-

Handling Bursts with Queues and Load Shedding

-

Batching and Hierarchical Aggregation

10.5.3. Vertical Scaling and Database types (Different Write Strategy)

-

Vertical Scaling

-

Database Choices: Cassandra write-heavy database, handle 10k rps rather than 1000 rps of DBMS.

-

Cassandra achieves superior write throughput through its append-only commit log architecture, instead of updating data in place.

Database types:

-

Time-series databases

-

Log-structured databases

-

Column stores

Others:

-

Disable expensive features like foreign key constraints, complex triggers, or full-text search indexing during high-write periods

-

Tune write-ahead logging - databases like PostgreSQL can batch multiple transactions before flushing to disk

-

Reduce index overhead - fewer indexes mean faster writes, though you’ll pay for it on reads

10.5.4. Why we do not use Horizontal Scaling for write-heavy database

-

Writes need to be consistent across all nodes.

-

In a distributed setup, synchronizing writes across nodes introduces latency and increases the chance of conflicts.

-

Horizontal Scaling for read-heavy.

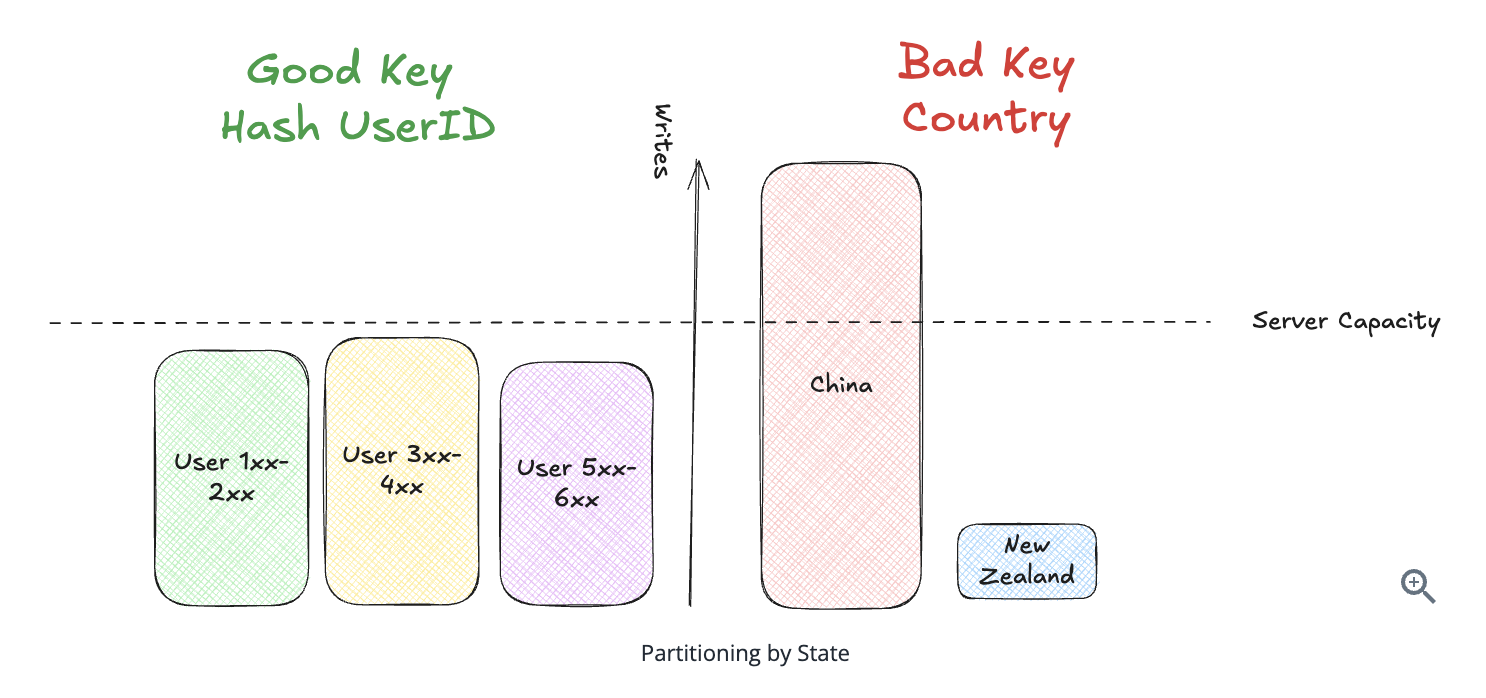

10.5.5. Sharding and Partitioning

Horizontal Sharding

- Selecting a Good Partitioning Key

Vertical Sharding

- Split by table view, some table heavy-write, some table heavy-read, other append-once, time-series

For Post content we’ll use traditional B-tree indexes and is optimized for read performance For Post metrics we might use in-memory storage or specialized counters for high-frequency updates For Post analytics we can use time-series optimized storage or database with column-oriented compression

-- Core post content (write-once, read-many)

TABLE post_content (

post_id BIGINT PRIMARY KEY,

user_id BIGINT,

content TEXT,

media_urls TEXT[],

created_at TIMESTAMP

);

-- Engagement metrics (high-frequency writes)

TABLE post_metrics (

post_id BIGINT PRIMARY KEY,

like_count INTEGER DEFAULT 0,

comment_count INTEGER DEFAULT 0,

share_count INTEGER DEFAULT 0,

view_count INTEGER DEFAULT 0,

last_updated TIMESTAMP

);

-- Analytics data (append-only, time-series)

TABLE post_analytics (

post_id BIGINT,

event_type VARCHAR(50),

timestamp TIMESTAMP,

user_id BIGINT,

metadata JSONB

);

-

For Post content: we’ll use traditional B-tree indexes and is optimized for read performance

-

For Post metrics: we might use in-memory storage or specialized counters for high-frequency updates

-

For Post analytics: we can use time-series optimized storage or database with column-oriented compression

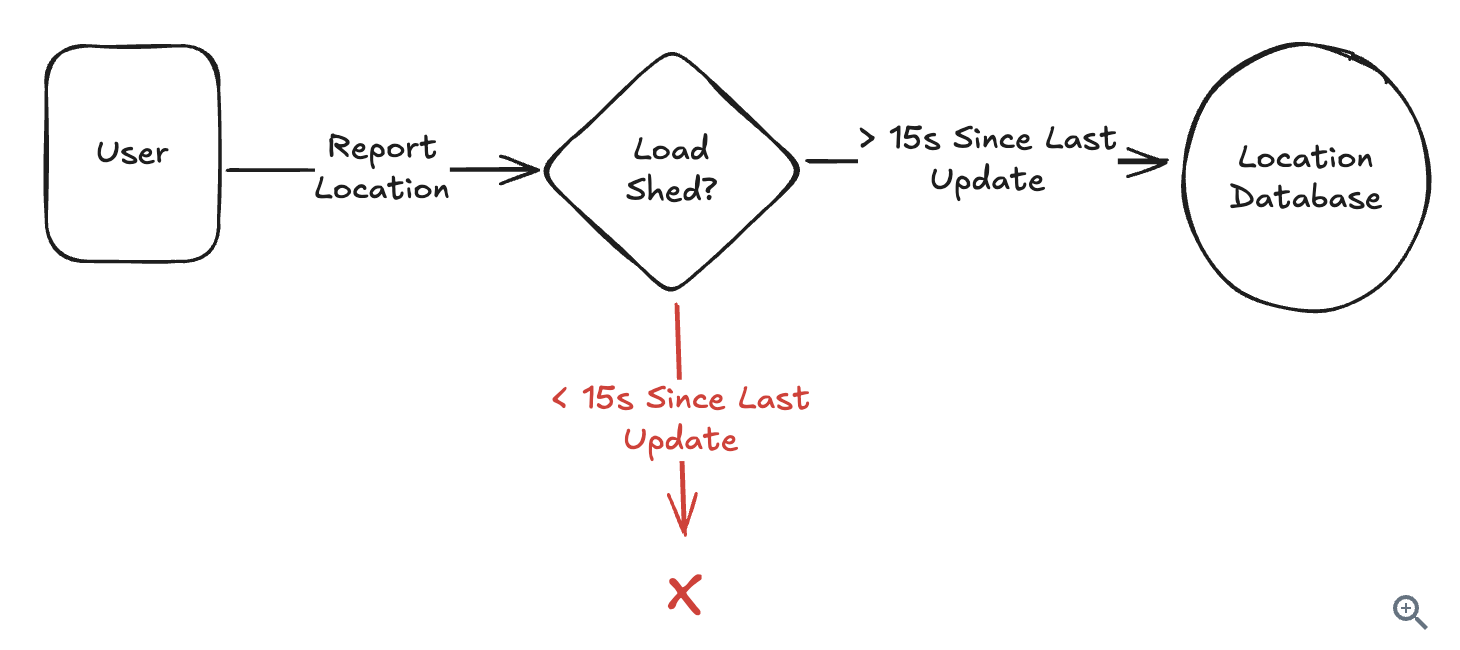

10.5.6. Handling Bursts with Queues and Load Shedding

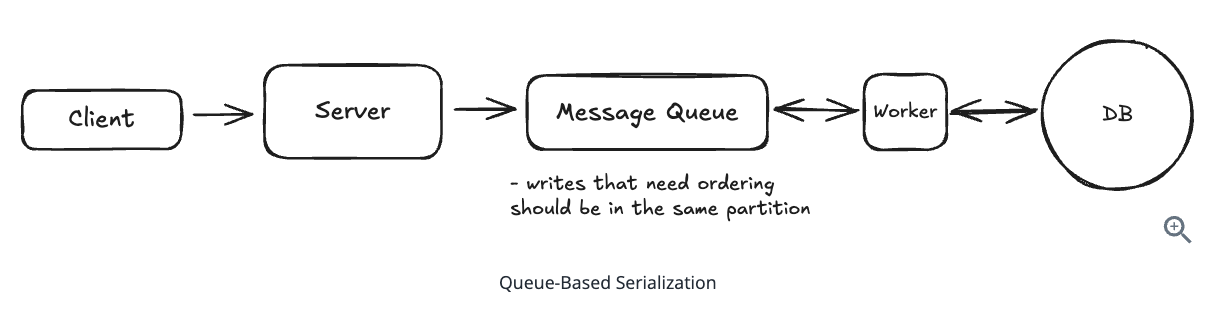

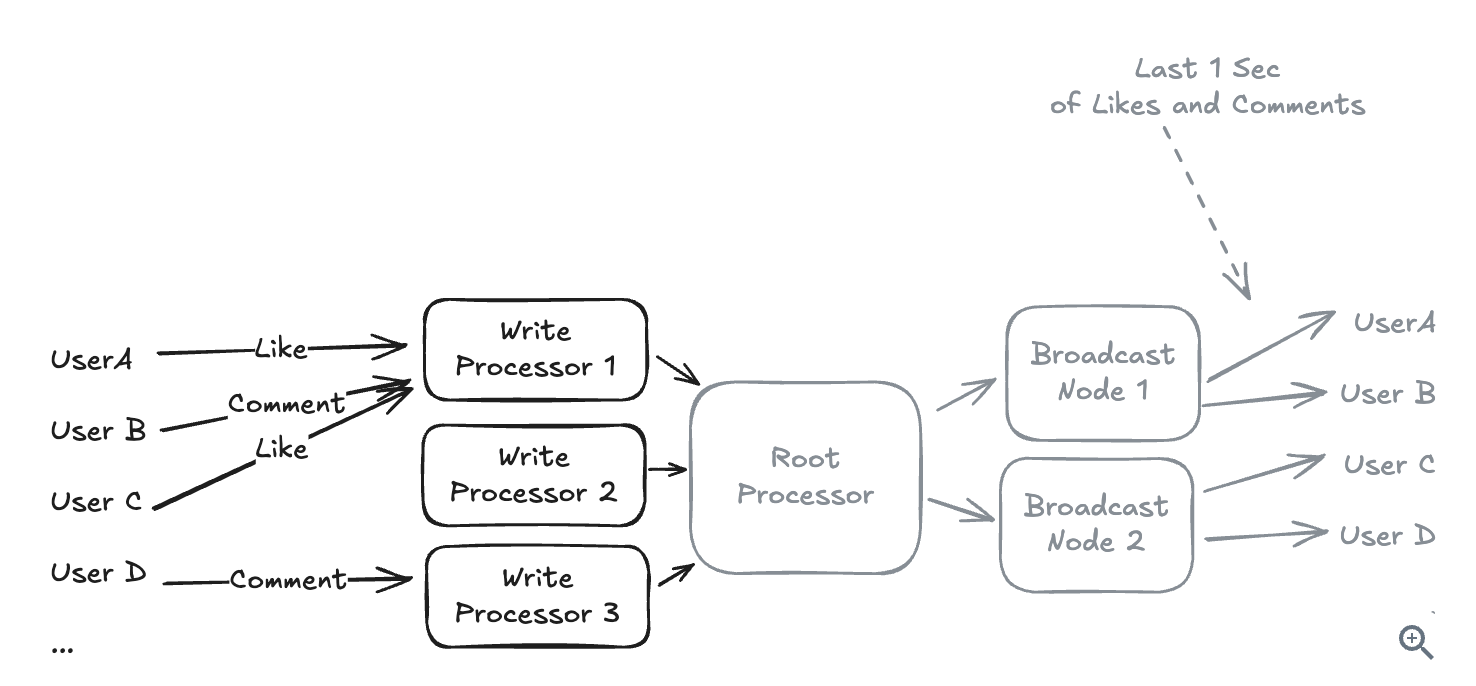

Write Queues for Burst Handling

- This approach provides a few benefits, but the most important is burst absorption: the queue acts as a buffer, smoothing out traffic spikes.

Load Shedding Strategies

-

When your system is overwhelmed, you need to decide which writes to accept and which to reject.

-

This is called load shedding, and it’s better than letting everything fail.

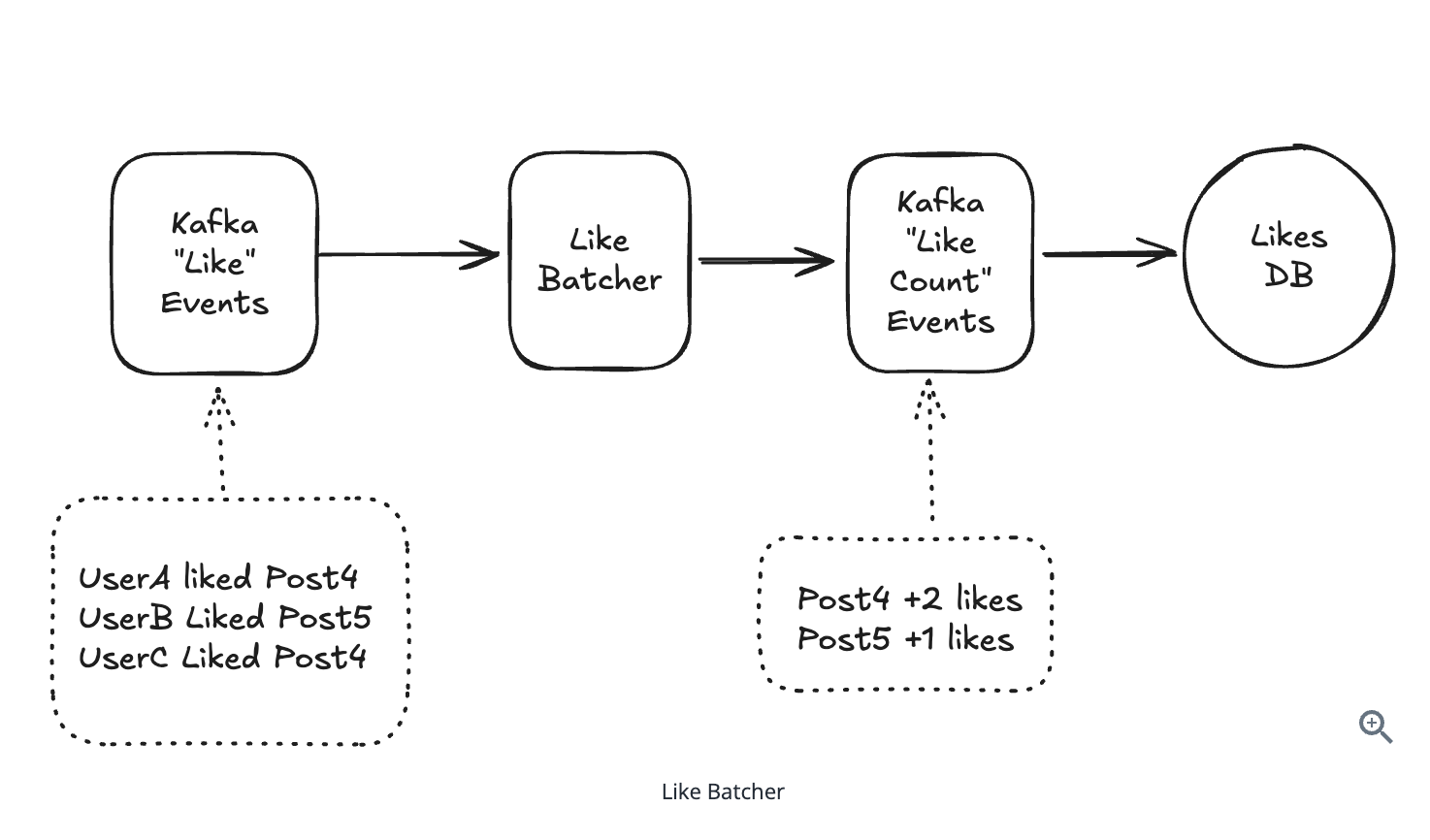

10.5.7. Batching and Hierarchical Aggregation

- Batching and preprocessing immediately.

10.5.8. Streaming Problems (Write Heavy)

10.5.9. What happens when you have a hot key that’s too popular for even a single shard

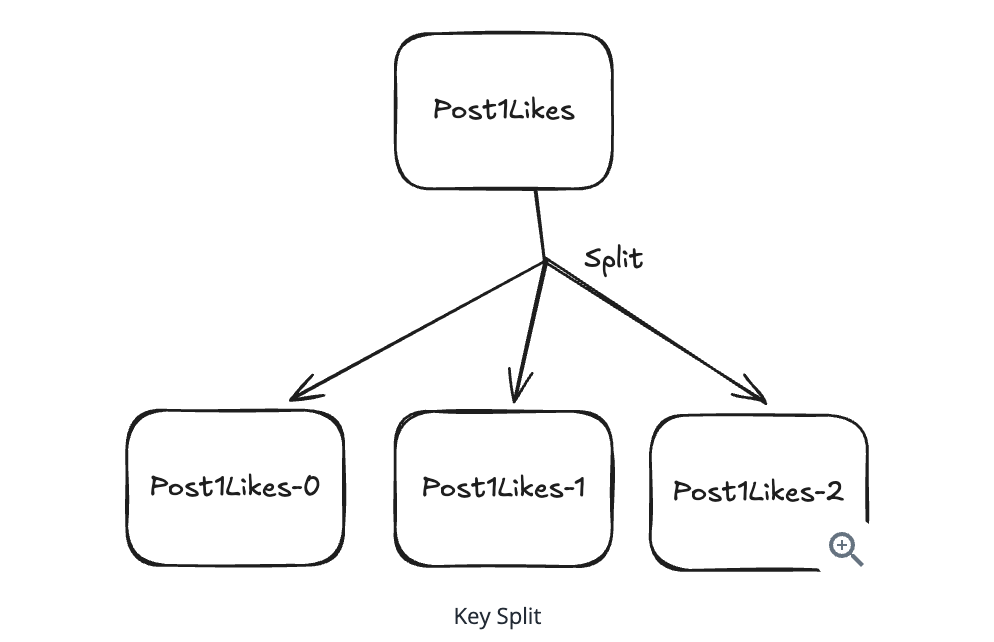

- Split All Keys to multiple shards

10.5.10. How do you handle resharding when you need to add more shards?

-

Take the system offline, rehash all data, and move it to new shards.

-

Production systems use gradual migration which targets writes to both location background => gradually migration.

10.5.11. When choosing a partitioning key for sharding, what should be the primary goal?

-

Balancing is good.

-

Do not any sharding exceed the average performace.

10.5.12. What is the main difference between horizontal and vertical partitioning?

-

Both split to multiple servers

-

Horizontal splits rows, vertical splits columns

10.5.13. Write queues are always the best solution for handling traffic bursts.

- Write timestamp client, because queue my delay.

10.5.14. When might load shedding be preferable to queuing for handling write bursts?

-

When writes are frequently updated (like location updates)

-

Have multiple event, lost some events is not considerable.

10.5.15. Gracefully shutdown

- Handle all batching requests before shut down.

10.5.16. What is the primary purpose of hierarchical aggregation in write scaling?

-

Hierarchical aggregation processes data in stages, reducing volume at each step.

-

It’s used for high-volume data where you need aggregated views rather than individual events.

10.5.17. When resharding a database, the dual-write phase ensures no data is lost during migration.

- During resharding, you write to both old and new shards but read with preference for the new shard.

10.5.18. Splitting hot keys dynamically requires both readers and writers to agree on which keys are hot.

- If writers spread writes across multiple sub-keys but readers don’t check all sub-keys, you have inconsistent data.

10.5.19 (Hay). What is the fundamental principle behind all write scaling strategies?

- Reduce throughput per component

10.5.20. LSM Tree (Log-Structured Merge-tree)

– Built for heavy write volume.

- Appends in memory then merges on disk. Point reads cost extra hops.

10.6. Handling Large Blobs

10.6.1. Pre-signed url:

- Use for clients temporary, scoped credentials to interact directly with storage.

10.6.2. Simple Direct Upload

-

content-length-range: Set min/max file sizes to prevent someone uploading 10GB when you expect 10MB

-

content-type: Ensure that profile picture endpoint only accepts images, not videos

10.6.3. Simple Direct Download

-

Blob storage signatures (like S3 presigned URLs): are validated by the storage service using your cloud credentials. The storage service has your secret key and can verify that you generated the signature.

-

CDN signatures (like CloudFront signed URLs): are validated by the CDN edge servers using public/private key cryptography.

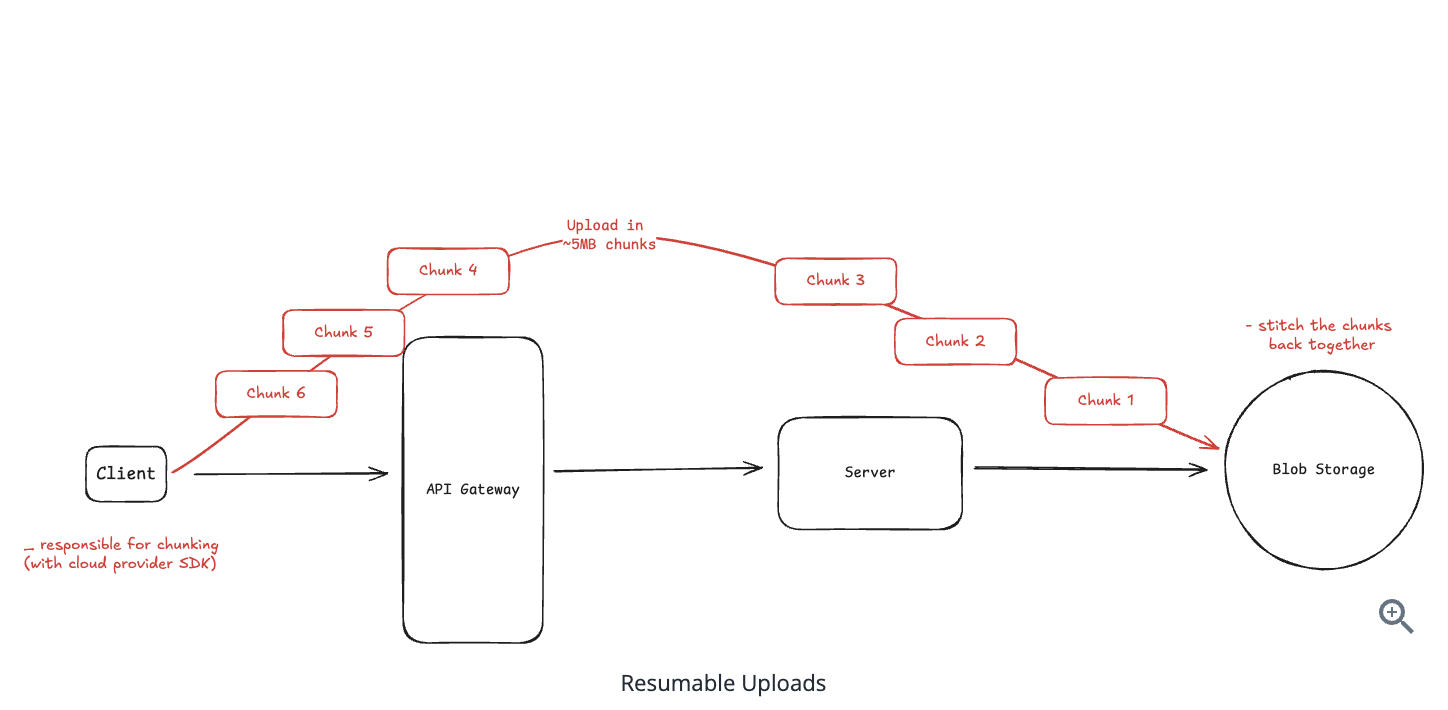

10.6.4. Resumable Uploads for Large Files

10.6.5. State Synchronization Challenges

Use database to track it

CREATE TABLE files (

id UUID PRIMARY KEY,

user_id UUID NOT NULL,

filename VARCHAR(255),

size_bytes BIGINT,

content_type VARCHAR(100),

storage_key VARCHAR(500), -- s3://bucket/user123/files/abc-123.pdf

status VARCHAR(50), -- 'pending', 'uploading', 'completed', 'failed'

created_at TIMESTAMP,

updated_at TIMESTAMP

);

-

Race conditions: The database might show ‘completed’ before the file actually exists in storage

-

Orphaned files: The client might crash after uploading but before notifying you, leaving files in storage with no database record

-

Malicious clients: Users could mark uploads as complete without actually uploading anything

-

Network failures: The completion notification might fail to reach your servers

10.6.6. What if the upload fails at 99%?

-

When files exceed 100MB, you should use chunked uploads - S3 multipart uploads (5MB+ parts), GCS resumable uploads (any chunk size), or Azure block blobs (4MB+ blocks).

-

When a connection drops, the client doesn’t start over. Instead, it queries which parts already uploaded; using ListParts in S3, checking the resumable session status in GCS, or listing committed blocks in Azure.

-

If parts 1-19 succeeded but part 20 failed, the client resumes from part 20.

10.6.7. How do you prevent abuse

-

Run virus scans, content validation, and any other checks before moving files to the public bucket.

-

Only after these checks pass do you move the file to its final location and update the database status to “available.”

10.6.8. How do you handle metadata

-

The storage key is your connection point. Use a consistent pattern like uploads/{user_id}/{timestamp}/{uuid} that includes useful info but prevents collisions.

-

Add metadata with: who uploaded it, what it’s for, processing instructions

-

Generate the hash key.

10.6.9. How do you ensure downloads are fast

-

CDNs solve the geography problem by caching content at edge locations worldwide.

-

The solution is range requests - HTTP’s ability to download specific byte ranges of a file bytes=0-10485759 (first 10MB)

GET /large-file.zip

Range: bytes=0-10485759 (first 10MB)

-

Resumes download feature: Modern browsers and download managers handle this automatically if your storage and CDN support range requests

-

The pragmatic approach: serve everything through CDN with appropriate cache headers. Ensure range requests work for large files. Let the CDN and browser handle the optimization.

10.6.10. What is the primary problem with proxying large files through application servers?

- Application servers become bottlenecks with no added value

10.6.11. Generate presigned urls

- Presigned urls are generated entirely in your application’s memory using your cloud credentials - no network call to storage is needed.

10.6.12. What should you include in presigned URLs to prevent abuse?

- Content-length-range and content-type restrictions

10.6.13. With chunked uploads, the storage service tracks which parts uploaded successfully.

- The storage service maintains state about completed parts using session IDs, allowing clients to query which parts need to be retried.

10.6.14. What is the main challenge with state synchronization in direct uploads?

- Since uploads bypass your servers, your database metadata can become out of sync with what actually exists in blob storage, requiring event notifications and reconciliation.

10.6.15. How do cloud storage services help with state synchronization?

- Storage services publish events (S3 to SNS/SQS, GCS to Pub/Sub) when files are uploaded, letting your system update database status reliably.

10.6.16. CDN signatures are validated by the storage service using public/private key cryptography

10.6.17. At what file size should you typically consider using direct uploads instead of proxying through servers?

-

Around 100MB

-

Direct Upload: Return presigned url for client, client upload to S3.

-

Proxy through server: Upload -> Server -> S3.

10.6.18. After multipart upload completion, object storage combine all the multi-parts to a file

- Once multipart upload completes, the storage service combines all parts into a single object - the individual chunks no longer exist from the storage perspective.

10.6.19 (Hay). When should you NOT use direct uploads in a system design?

-

Need for real-time content validation during upload

-

You can control direct upload from client

10.6.20. Range requests enable resumable downloads for large files.

- Yes

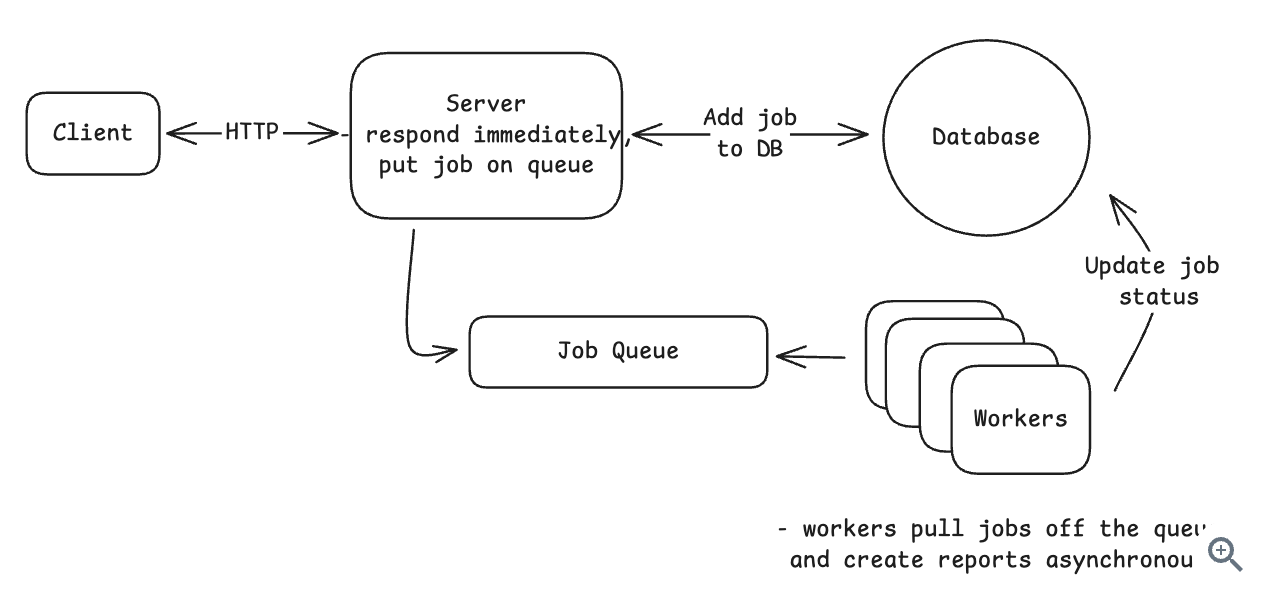

10.7. Managing Long Running Tasks (Async Worker)

10.7.1. Problem

-

When user generate the PDF report => The whole process takes at least 45 seconds => blocking in the UI.

-

With synchronous processing, the user’s browser sits waiting for 45 seconds. Most web servers and load balancers enforce timeout limits around 30-60 seconds, so the request might not even complete.

10.7.2. Solution

- Split it to 2 parts: We’re generating your report + We’ll notify you when it’s ready

10.7.3. When to use

- The moment you hear “video transcoding”, “image processing”, “PDF generation”, “sending bulk emails”, or “data exports” that’s your cue.

10.7.4. Handling Failures

-

What happens if the worker crashes while working the job? => The job will be retried by another worker.

-

Typically, you’ll have a heartbeat mechanism that periodically checks in with the queue to let it know that the worker is still alive.

-

The interval of the heartbeat is a key design decision.

Message queue:

-

SQS: visibility timeout.

-

RabbitMQ: heartbeat interval.

-

Kafka: session timeout.

10.7.5. Handling Repeated Failures

Question: What happens if a job keeps failing? Maybe there’s a bug in your code or bad input data that crashes the worker every time.

Solution: Add to dead letter queue

Message queue:

-

SQS: redrive policy.

-

RabbitMQ: dead letter exchange.

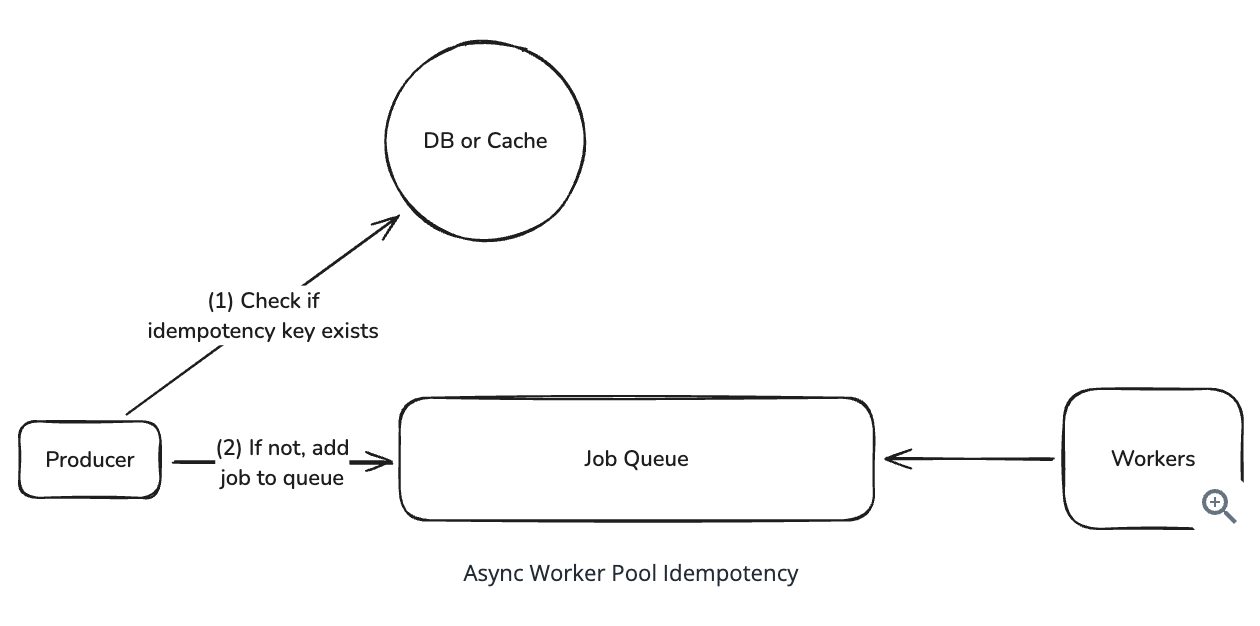

10.7.6. Preventing Duplicate Work

Question: A user gets impatient and clicks ‘Generate Report’ three times. Now you have three identical jobs in the queue. How do you prevent doing the same work multiple times ?

Solution: Use idempotency keys (hash the deterministic IDs from input data)

10.7.7. Managing Queue Backpressure

Question: It’s Black Friday and suddenly you’re getting 10x more jobs than usual. Your workers can’t keep up. The queue grows to millions of pending jobs. What do you do?

- When queue is full => blocking the tasks.

Solution: Use backpressure => Add the depth limits and reject new jobs when the queue are full => “System Busy” response, rather than accepting work that you can’t handle.

10.7.8. Handling Mixed Workloads

Question: Some of your PDF reports take 5 seconds, but end-of-year reports take 5 hours. They’re all in the same queue. What problems does this cause?

Problem:

- Long jobs block short ones. A user requesting a simple report waits hours behind someone’s massive year-end export

Solution:

- Split multiple queues due to job type and expected duration

queues:

fast:

max_duration: 60s

worker_count: 50

instance_type: t3.medium

slow:

max_duration: 6h

worker_count: 10

instance_type: c5.xlarge

10.7.9. Orchestrating Job Dependencies

Question: What if generating a report requires three steps: fetch data, generate PDF, then email it. How do you handle jobs that depend on other jobs

Solution: Using workflow machine.

{

"workflow_id": "report_123",

"step": "generate_pdf",

"previous_steps": ["fetch_data"],

"context": {

"user_id": 456,

"data_s3_url": "s3://bucket/data.json"

}

}

10.7.10. In the async worker pattern, the web server’s job is to perform the actual heavy processing work.

- Web Servers is only receive the jobs.

10.7.11. Redis with Bull/BullMQ

- It is in-memory queue, allow: delayed, stalled, completed, failed,…

10.7.12. What is the main advantage of separating web servers from worker processes?

- Independent scaling based on specific needs

10.7.13. Serverless functions are ideal for processing jobs that take several hours to complete.

- No, because serverless functions have execution time limits (typically 15 minutes)

10.7.14. What is a Dead Letter Queue (DLQ) used for?

- A DLQ isolates jobs that fail repeatedly (after 3-5 retries) to prevent poison messages => retry 3 - 5 failed to DLQ.

10.7.15. How do you prevent duplicate work when a user clicks ‘Generate Report’ multiple times?

- Prevent duplicates => Use Idempotency keys

10.7.16. When implementing backpressure, you should reject new jobs when the queue depth exceeds a threshold.

- Yes

10.7.17. What metric should you monitor for autoscaling workers?

- Queue depth is the key metric for worker autoscaling - by the time CPU is high, your queue is already backed up

10.7.18. How should you handle mixed workloads where some jobs take 5 seconds and others take 5 hours?

- Separate queues by job type or duration

10.7.19. For complex workflows with job dependencies, what should you consider using?

- Workflow orchestrators like AWS Step Functions or Temporal

10.7.20. When should you proactively suggest async workers in a system design interview?

- Operations that take seconds to minutes (video transcoding, image processing, PDF generation, bulk emails) are clear signals for async processing.

10.7.21. The async worker pattern introduces eventual consistency since work isn’t done when the API returns.

- This is a key tradeoff - users might see stale data until background processing completes, but they get immediate response and better overall system performance.

10.7.22. What is the main benefit of using Kafka for job queues compared to Redis?

- Kafka’s append-only log allows message replay, fan-out to multiple consumers, and maintains strict ordering

=> Kafka strict ordering guarantees.

11. Advanced Data Structure

11.1. Bloom Filter (Yes or No)

- Tests if an element is possibly in a set

11.2. Count-Min Sketch (Frequency)

- Approximates frequency counts of items

11.3. Hyperloglog (Number of unique item)

- Estimates the cardinality (number of unique items)

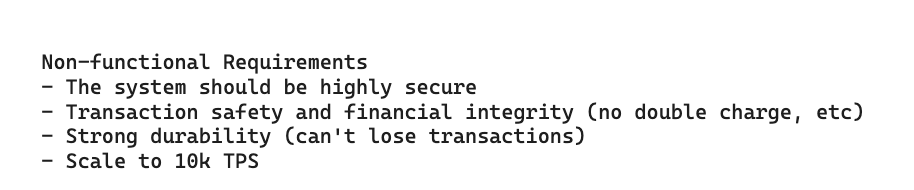

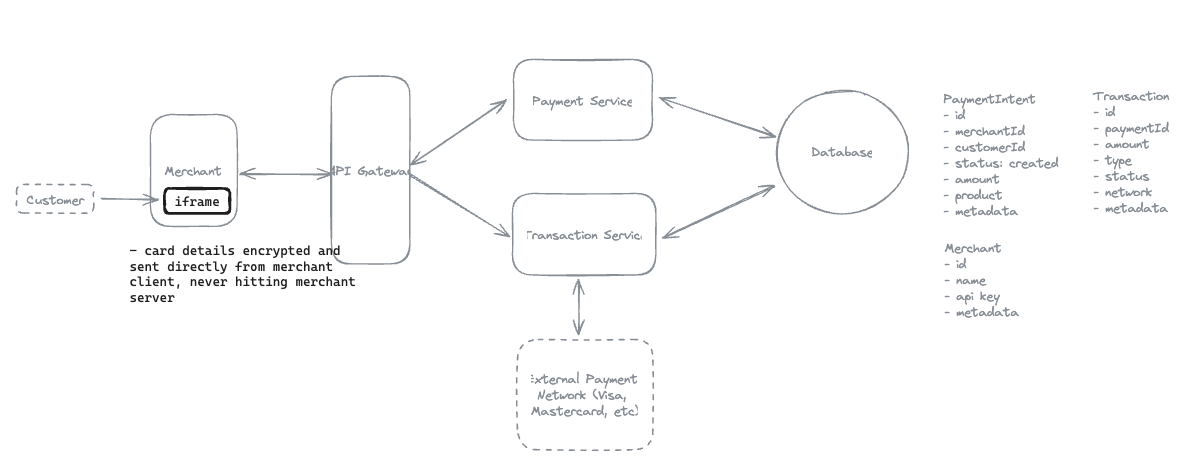

12. Payment System

12.1. Functional Requirements:

-

Merchants should be able to initiate payment requests (charge a customer for a specific amount).

-

Users should be able to pay for products with credit/debit cards.

-

Merchants should be able to view status updates for payments (e.g., pending, success, failed).

12.2. Non-funtional requirements

12.3. Entities:

12.4. API Design:

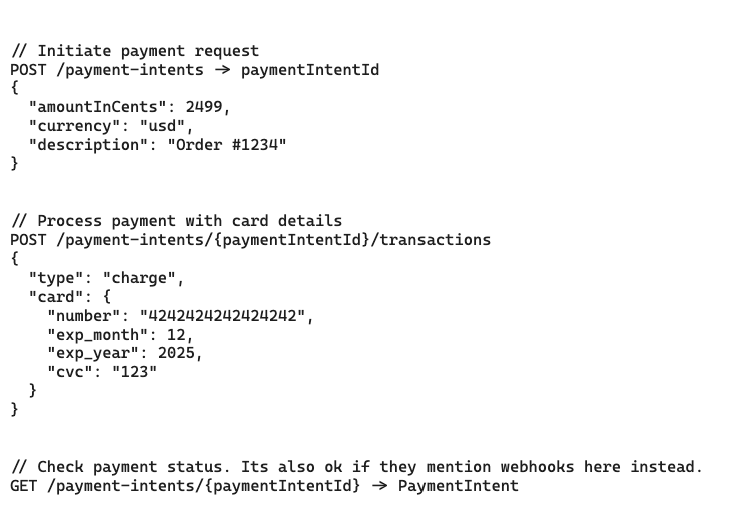

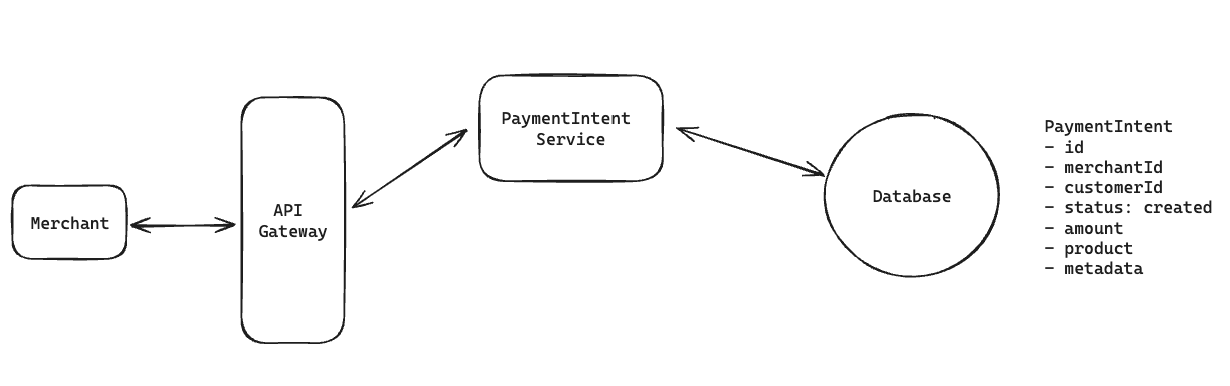

12.5. How will merchants be able to initiate payment requests?

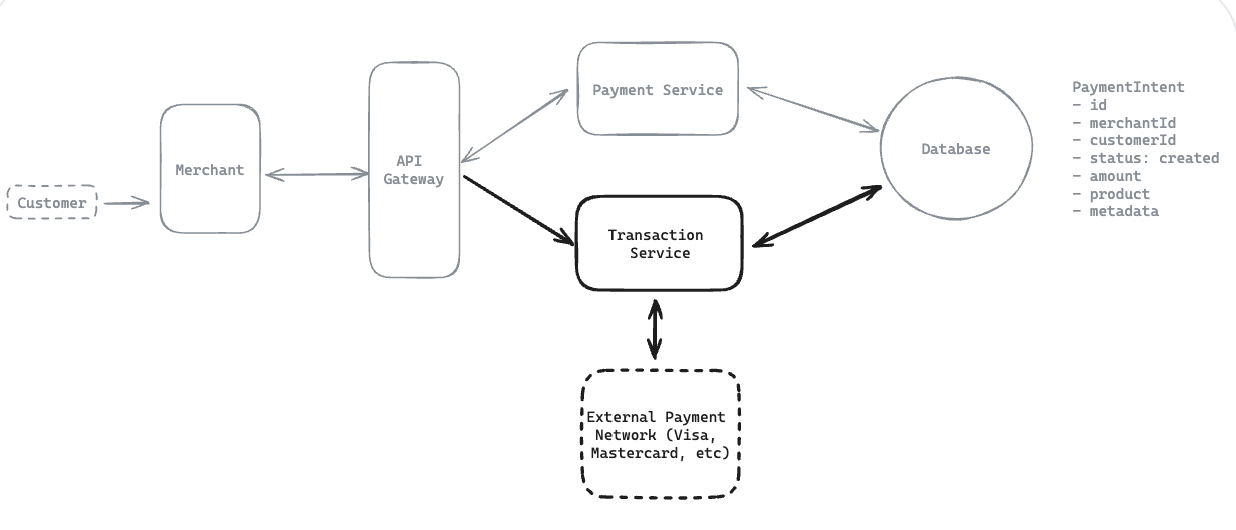

12.6. How will users be able to pay for products with credit/debit cards?

12.7. How will merchants be able to view status updates for payments?

Notes: Client -> API Gateway -> Service -> Database (Every functional requirements => can be implement with this patterns)

- Implement query transaction API, with status: ‘created’, ‘processing’, ‘succeeded’, or ‘failed’

12.8. How would you ensure secure authentication for merchants using the payment system?

Notes: Client -> API Gateway -> Service -> Database (Every functional requirements => can be implement with this patterns)

-

To ensure secure merchant authentication, we’ll implement API key management with request signing.

-

Each merchant gets both a public API key for identification and a private secret key for generating time-bound signatures.

=> Merchant use private key to hash the requests, public key Zalopay to encrypt it => Zalopay engine use private key to decode the payload + merchant key to resolve it.

12.9. How would you secure customer credit card information while in transit?

Notes: Client -> API Gateway -> Service -> Database (Every functional requirements => can be implement with this patterns)

-

Our JavaScript SDK immediately encrypts card details using Zalopay public key before data leaves the customer’s browser.

-

After processing, only store tokens as payment methods, never the card details => Merchant use the token for next payment.

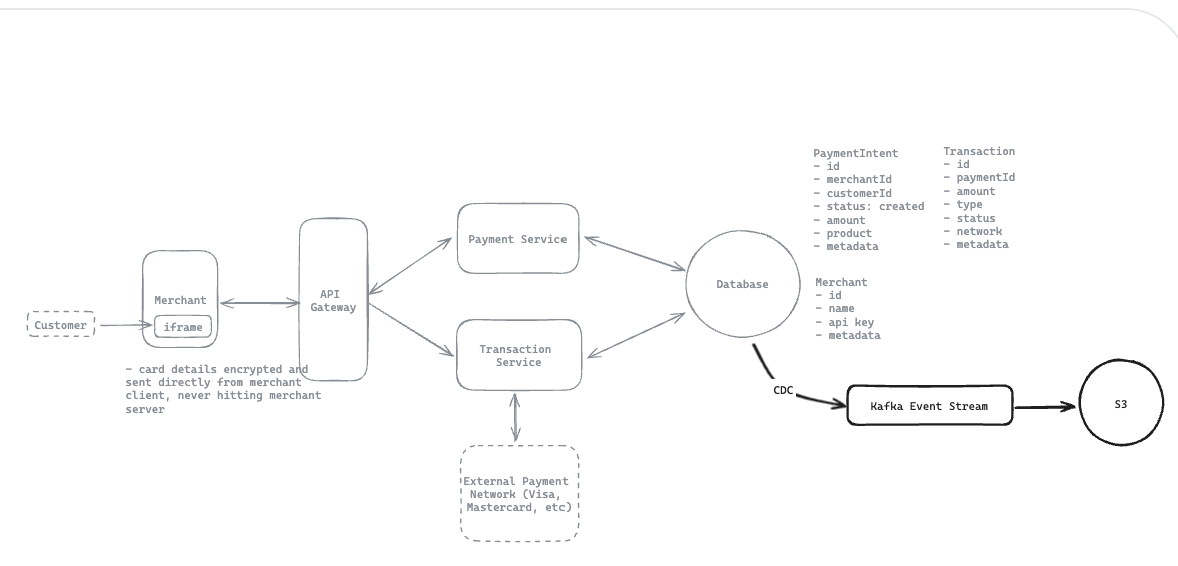

12.10. How would you ensure that no transaction data is ever lost and maintain complete auditability for compliance?

Notes: Client -> API Gateway -> Service -> Database (Every functional requirements => can be implement with this patterns)

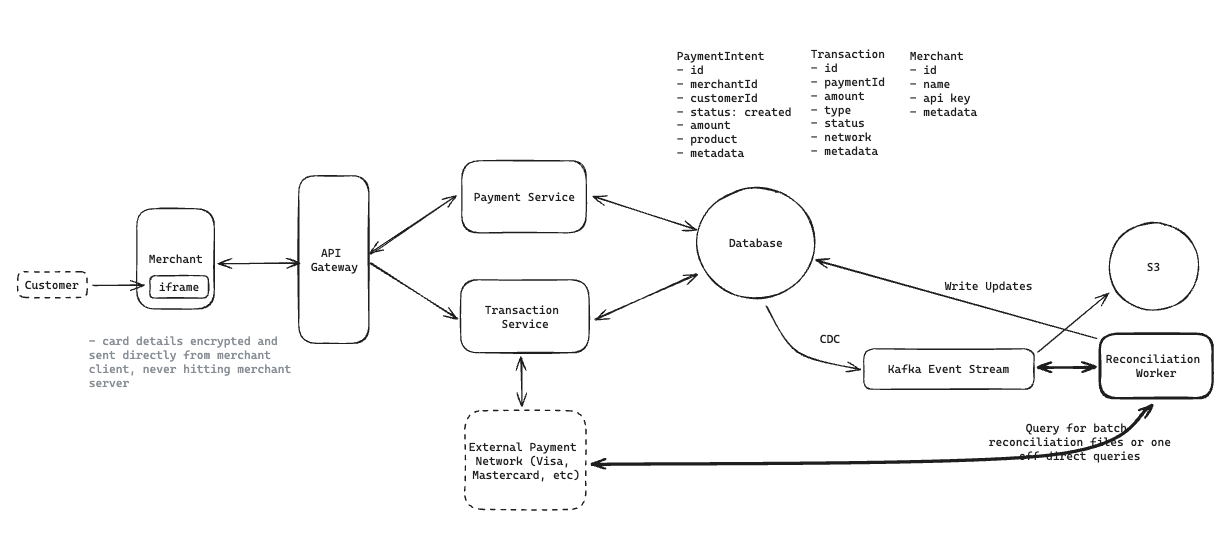

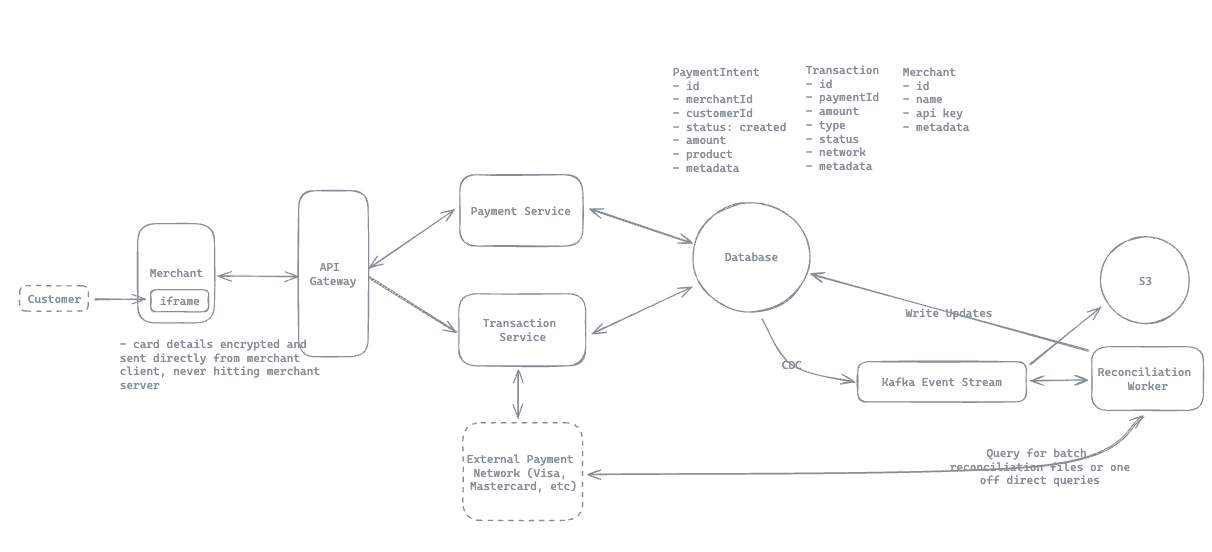

12.11. How would you ensure transaction safety and financial integrity despite the inherently asynchronous nature of external payment networks?

-

To ensure transaction safety despite asynchronous payment networks, we’ll implement an event sourcing architecture with reconciliation.

-

Implement reconcile workers to make sure transaction work.

12.12. How would you scale the payment system to handle 10,000+ transactions per second?

Notes: Client -> API Gateway -> Service -> Database (Every functional requirements => can be implement with this patterns)

-

Horizontal scaling with load balancers distributing traffic across stateless service instances.

-

For Kafka, our event log, we’ll set up multiple partitions (3-5) to handle our throughput needs, payment_intent_id to ensure ordering.

-

Our database, handling around 1,000 write operations per second (an order of magnitude smaller than our event log), can be managed with a well-optimized PostgreSQL instance with read replicas for distributing read operations.

-

Data retention policy.

12.13. Idempotent operations ensure repeated requests produce the same final state.

- True

12.14. Payment systems must handle the inherently asynchronous nature of external payment networks like Visa and Mastercard.

- Yes

12.15. Which technique BEST prevents duplicate charges when external service calls may time out?

- Idempotency keys on client requests

12.16. A payment processor must absorb 10,000 transactions per second spikes. Which scaling pattern distributes load horizontally with minimal coordination?

- Stateless microservices behind a load balancer

12.17. Message queues offering at-least-once delivery can result in duplicate message processing after consumer failures.

- If a consumer crashes before acknowledging a message, the broker re-delivers it to another consumer.

12.18. Which security technique limits a merchant’s PCI DSS scope by never exposing card data to their servers?

- Tokenization with client-side encryption.

12.19. What happens when a duplicate idempotency key is received for an already successful charge?

- The original result is returned without a new charge

12.20. Marking a transaction as ‘pending verification’ after a network timeout avoids overcharging customers.

-

Yes

-

Deferred verification acknowledges uncertainty instead of assuming failure.

-

Preventing merchants from retrying prematurely and creating duplicate charges while the processor reconciles the real outcome.

12.21. Event sourcing allows rebuilding current payment state by replaying immutable events.

- Storing every state-changing event in an append-only log lets systems materialize fresh views at any time, aiding auditing, recovery, and debugging.